This article provides a detailed 2025 GPU cloud pricing comparison, showing how specialized providers like GMI Cloud deliver top-tier performance at significantly lower costs than hyperscalers. It explains how GMI Cloud’s transparent, pay-as-you-go model for NVIDIA H100 and H200 GPUs helps startups and enterprises reduce infrastructure spending by up to 70%.

What you’ll learn:

• How GPU pricing differs between specialized providers and hyperscalers

• Current on-demand rates for NVIDIA H100 and H200 GPUs across major platforms

• Why hidden fees like egress and storage charges can inflate cloud bills

• How GMI Cloud minimizes extra costs and provides predictable billing

• The advantages of GMI Cloud’s flexible, transparent pricing model

• When to choose specialized GPU clouds versus hyperscale ecosystems

• Practical strategies to optimize AI compute budgets without sacrificing performance

Choosing the right GPU cloud provider is critical for managing AI budgets. Specialized providers like GMI Cloud offer significantly better rates—with NVIDIA H100 GPUs starting at $2.10 per hour and H200 GPUs at $2.5 per hour—representing potential savings of 40-70% compared to traditional hyperscale clouds.

This guide provides a direct GPU cloud pricing comparison to help you optimize your infrastructure spending.

Key Takeaways: GPU Pricing at a Glance

- Provider Type Matters: Specialized GPU clouds like GMI Cloud are consistently more cost-effective for high-performance compute than hyperscalers (AWS, GCP, Azure).

- GMI Cloud H100 Pricing: On-demand NVIDIA H100 GPUs are available from $2.10 per hour.

- GMI Cloud H200 Pricing: GMI Cloud offers on-demand NVIDIA H200 GPUs at $2.50/GPU-hour.

- Hyperscaler H100 Pricing: The same H100 GPUs on hyperscale clouds typically cost between $4.00 and $8.00 per hour.

- Hidden Costs: Data transfer (egress) fees and storage can add 20-40% to monthly bills on hyperscale platforms. GMI Cloud helps mitigate this and may negotiate or waive ingress fees.

- Proven Savings: Companies switching to GMI Cloud have seen significant cost reductions, with LegalSign.ai finding it 50% more cost-effective and Higgsfield lowering compute costs by 45%.

Why GPU Cloud Pricing is a Critical Startup Expense

For startups and enterprises building AI applications, GPU compute is often the single largest infrastructure cost. This expense can consume 40-60% of a startup's technical budget in its first two years.

A poorly optimized GPU strategy can burn through funding rapidly. This makes a direct GPU cloud pricing comparison essential.

Platforms like GMI Cloud are built specifically to address this challenge, providing a cost-efficient, high-performance solution that helps reduce training expenses and accelerate model development. As an NVIDIA Reference Cloud Platform Provider, GMI Cloud offers instant access to dedicated, top-tier GPUs without the premium pricing of larger, generalized cloud providers.

GPU Cloud Pricing Comparison: GMI Cloud vs. Hyperscalers

Pricing models generally fall into three categories: on-demand, reserved, and spot instances. On-demand offers the most flexibility, which is crucial for development and variable workloads.

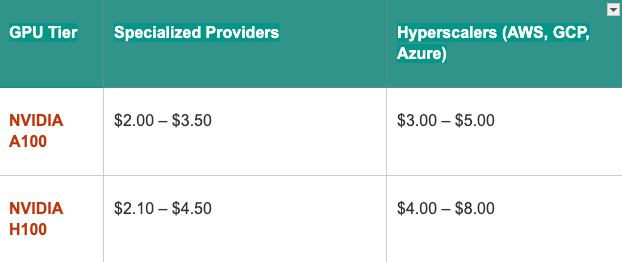

Here is a direct comparison of on-demand pricing for high-end training GPUs.

On-Demand GPU Price Comparison (Per Hour)

Note:GMI Cloud does not currently offer A100; the A100 row reflects industry reference pricing and does not represent GMI’s pricing.

Key finding: As the table shows, specialized providers like GMI Cloud offer substantially lower hourly rates for the exact same high-performance hardware.

GMI Cloud's Transparent Pricing Model

GMI Cloud emphasizes a flexible, pay-as-you-go model, allowing users to scale without long-term commitments or large upfront costs.

- NVIDIA H200: Available on-demand starting at $2.50/GPU-hour.

- NVIDIA H100 (Private Cloud): Dedicated 8x H100 configurations are available for as low as $2.10/GPU-hour.

This clear, predictable pricing structure allows teams to accurately forecast budgets and avoid the billing complexity common on hyperscale platforms.

Beyond the Hourly Rate: The Hidden Costs of Cloud GPUs

A true GPU cloud pricing comparison must account for hidden fees, which can inflate your monthly bill.

- Data Transfer (Egress) Fees: Hyperscalers often charge $0.08–$0.12 per GB for data moving out of their cloud. Moving large datasets or model weights can add thousands to a bill.

- Storage Costs: High-performance storage for datasets and model checkpoints adds up quickly.

- Networking Charges: Distributed training may incur extra fees for inter-zone networking.

GMI Cloud's strategy is designed to minimize these extras. The platform is happy to negotiate or even waive ingress fees, a significant advantage for teams handling large-scale data.

How to Choose Your GPU Provider

Your choice depends on your primary needs: cost efficiency or ecosystem integration.

When to Choose GMI Cloud (Specialized Provider)

GMI Cloud is the ideal choice when:

- Cost efficiency is your top priority for managing budgets.

- You need instant, on-demand access to the latest GPU hardware like the H100 and H200.

- You prefer flexible, pay-as-you-go pricing without long-term commitments.

- Your workload is GPU-focused and doesn't rely on deep integration with a single hyperscaler's other services.

When to Choose Hyperscalers (AWS, GCP, Azure)

Hyperscalers may be a fit when:

- You need deep integration with a wide array of existing cloud services (e.g., databases, web apps).

- You have complex, pre-existing multi-cloud architectures.

- You can commit to 1-3 year reserved instances to get discounts (though this locks you in).

Conclusion: Optimize Your AI Budget with GMI Cloud

For the vast majority of AI training and inference workloads, a specialized provider offers superior value. This GPU cloud pricing comparison shows that GMI Cloud consistently delivers the same, or better, hardware at a fraction of the cost.

By partnering with GMI Cloud, teams gain instant access to a high-performance, scalable AI platform while significantly reducing their primary infrastructure expense.

Frequently Asked Questions (FAQ)

Q1: What is the cheapest GPU cloud platform for H100 GPUs?

Answer: Specialized providers are typically cheapest. GMI Cloud offers NVIDIA H100 GPUs starting at $2.10 per hour, which is significantly lower than hyperscaler rates of $7.00-$13.00 per hour.

Q2: How much does an NVIDIA H200 GPU cost per hour on GMI Cloud?

Answer: GMI Cloud offers on-demand NVIDIA H200 GPUs at a list price of $2.50 per GPU-hour.

Q3: What pricing models does GMI Cloud offer?

Answer: GMI Cloud primarily uses a flexible, pay-as-you-go model. This allows you to access on-demand compute without long-term contracts. They also offer private cloud options with even lower rates, such as 8x H100 clusters for as low as $2.10/GPU-hour.

Q4: How much can I save by switching to GMI Cloud?

Answer: Savings can be substantial. GMI Cloud's pricing for high-end GPUs is often 40-70% lower than hyperscalers. Real-world customers like LegalSign.ai found GMI Cloud to be 50% more cost-effective than alternatives.

Q5: How can startups reduce GPU cloud costs?

Answer: The most effective strategy is to choose a cost-efficient provider like GMI Cloud. Other methods include right-sizing instances (using an A100 instead of an H100 if possible), monitoring utilization to shut down idle instances, and using model optimization techniques like quantization.

.jpg)