For today’s enterprises, artificial intelligence is no longer a backroom experiment. It is a front-line capability driving customer experiences, operational efficiency, and new business models. To unlock AI’s potential, organizations need GPU-powered cloud infrastructures that can handle the unique demands of training and inference.

For CTOs, the real priority is accessing the right compute – delivered through GPUs – that meets enterprise requirements for performance, security, scalability and cost efficiency. With platforms like GMI Cloud, these capabilities are available on demand, enabling teams to focus on innovation rather than infrastructure challenges.

Supporting the full AI lifecycle

The first priority for CTOs is to ensure that the GPU cloud infrastructure supports the entire AI lifecycle. Modern organizations do not just train once and deploy forever. Models need to be retrained with fresh data, validated under new conditions, and deployed repeatedly in production. This requires seamless movement between environments for training, fine-tuning and inference.

An effective architecture should therefore make it easy to provision GPU resources dynamically, allocate them to different teams, and move workloads from research to production with minimal friction. This avoids bottlenecks and accelerates the path from experimentation to business impact.

Choosing the right GPUs for the job

Not all GPUs are equal, and CTOs must carefully evaluate their hardware strategy. High-memory GPUs are indispensable for serving large-scale language and vision models, while more specialized GPUs deliver better performance-per-watt for inference workloads. Features such as multi-instance GPU (MIG) partitioning can allow multiple tasks to run in isolation on the same card, improving utilization and lowering costs.

Equally important is future-proofing. AI models are growing in size and complexity, so enterprises that only provision for today’s needs risk being outpaced tomorrow. Investing in GPUs with scalability and flexibility in mind ensures the infrastructure can evolve alongside the workloads it supports.

Scaling with elasticity and reliability

AI demand is rarely static. Peaks occur during product launches, seasonal traffic surges, or unexpected market events. A strong enterprise GPU cloud architecture must therefore provide elasticity. Auto-scaling clusters and intelligent provisioning ensure that applications maintain performance during surges, while releasing resources when demand subsides.

Reliability is equally important. Business-critical AI applications cannot afford downtime. This makes fault tolerance, load balancing, and automated failover essential features. By embedding resilience into the GPU cloud layer, CTOs can ensure consistent service levels even when individual nodes fail.

Data, storage and networking considerations

Too often, enterprises focus narrowly on compute power while overlooking the supporting ecosystem. GPUs are only as effective as the data pipelines feeding them. High-throughput, low-latency storage is essential for rapid model loading and real-time inference. Without this, even the fastest GPU can sit idle waiting for input.

Networking also plays a critical role. Low-latency interconnects are vital for multi-GPU training, while geographically distributed inference workloads benefit from data locality – placing GPU clusters close to where data is generated or consumed. For global organizations, a well-designed architecture minimizes cross-region latency and bandwidth costs while maintaining compliance with local data regulations.

Security, compliance and governance

As AI moves into production, the security of GPU cloud environments is nonnegotiable. Enterprises must enforce role-based access controls, encrypted communications, and strict audit trails. GPU-powered clusters should be deployed in secure containerized environments to ensure workloads remain isolated.

Compliance is another priority. From GDPR in Europe to HIPAA in healthcare, industries impose strict requirements on how data is stored and processed. CTOs must confirm that their GPU cloud providers and internal deployments meet these obligations without compromising on performance. Building compliance into the architecture from the start avoids costly retrofits later.

Cost efficiency and utilization

GPUs are powerful, but they are also expensive. For CTOs, cost optimization is as important as raw performance. The key is maximizing utilization. Techniques such as model quantization, pruning, and batching help reduce the computational footprint of inference workloads, allowing more tasks to be processed per GPU.

Monitoring tools that provide real-time visibility into GPU usage can highlight inefficiencies – such as idle GPUs or underutilized clusters – that drain budgets. Scheduling systems that prioritize workloads and allocate resources intelligently can further lower cost-per-inference, ensuring that GPU investments generate maximum business value.

Orchestration, observability and developer experience

Enterprises succeed with GPU cloud architectures not only because of hardware, but because of the tools and workflows layered on top. Developer productivity depends on streamlined orchestration pipelines, standardized APIs, and self-service portals for requesting resources.

Equally important is observability. CTOs must provide engineering teams with dashboards that track GPU utilization, inference latency, throughput and error rates. This visibility enables faster debugging and performance tuning, shortening development cycles and reducing time-to-market for AI applications.

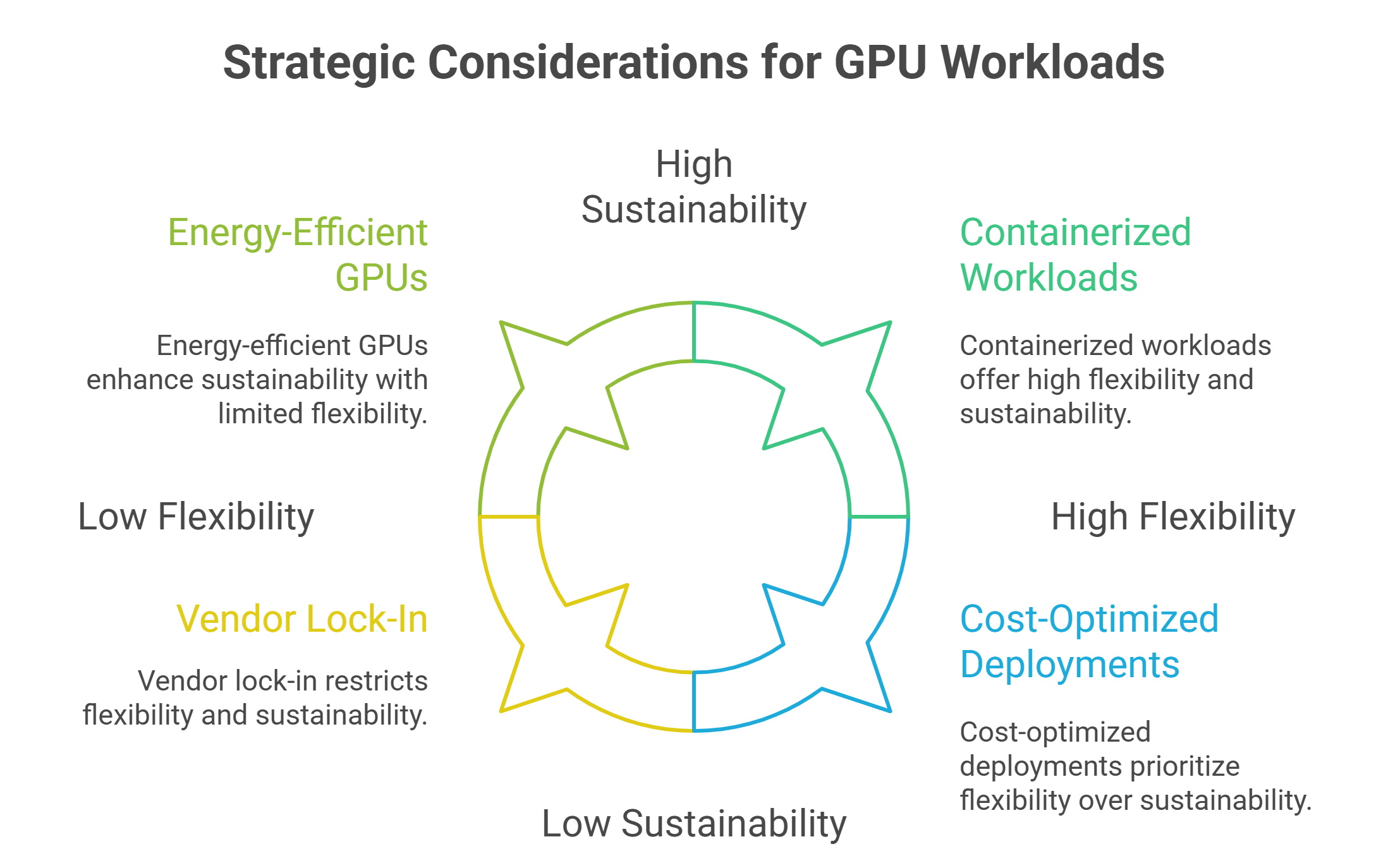

Multi-cloud and hybrid flexibility

Few enterprises today are locked into a single cloud provider. GPU workloads are increasingly deployed across multi-cloud and hybrid environments to balance cost, compliance and resilience. CTOs should prioritize architectures that make workloads portable across providers without extensive reconfiguration.

Containerization and Kubernetes-based orchestration help achieve this flexibility, enabling workloads to shift dynamically between on-premises GPU clusters and cloud environments. This not only reduces vendor lock-in but also opens opportunities to optimize costs by matching workloads to the most efficient GPU instances available.

Building for sustainability

As enterprises scale GPU usage, energy efficiency becomes a strategic concern. Large GPU clusters consume significant power, and sustainability is now a board-level priority. CTOs should consider energy-efficient GPUs, workload consolidation strategies, and data center partners committed to renewable energy.

Monitoring carbon impact alongside performance and cost is becoming a best practice. A GPU cloud architecture that balances sustainability with business needs not only lowers operating expenses but also aligns with corporate environmental, social, and governance (ESG) goals.

What CTOs should prioritize

Designing an enterprise GPU cloud architecture requires balancing many factors at once. CTOs should prioritize support for the full AI lifecycle, choosing GPUs that align with both current and future workloads. Elasticity and reliability ensure that performance remains stable under fluctuating demand, while robust data pipelines, storage, and networking prevent bottlenecks.

Security and compliance must be built into every layer, safeguarding sensitive data and meeting regulatory requirements. Cost efficiency comes from maximizing GPU utilization, while developer productivity relies on strong orchestration, APIs, and observability. Multi-cloud flexibility reduces vendor dependence, and sustainability ensures long-term viability.

By addressing these priorities, CTOs can design GPU cloud infrastructures that not only power today’s AI workloads but also adapt to the innovations of tomorrow.

Frequently Asked Questions About Enterprise GPU Cloud Architecture for CTOs

What should CTOs prioritize first in an enterprise GPU cloud architecture?

Ensure the platform supports the full AI lifecycle—training, fine-tuning, validation, and production inference—with dynamic GPU provisioning, smooth hand-offs between research and production, and minimal friction for team allocation. This is how you turn experiments into business impact quickly.

How do I choose the “right” GPUs for enterprise workloads?

Match hardware to workload: high-memory GPUs for large language and vision models, and more specialized GPUs for efficient inference. Features like multi-instance GPU (MIG) help isolate multiple tasks on one card to boost utilization. Plan for growth—future-proofing matters as models get larger.

How do we scale with elasticity while staying reliable?

Use auto-scaling clusters and intelligent provisioning for traffic peaks, then release capacity as demand drops. Build reliability in with fault tolerance, load balancing, and automated failover so business-critical AI stays available even when individual nodes fail.

Which data, storage, and networking choices prevent GPU bottlenecks?

Provide high-throughput, low-latency storage for fast model loads and real-time inference. Use low-latency interconnects for multi-GPU training, and place GPU clusters close to data sources (data locality) to cut cross-region latency and bandwidth costs while meeting local compliance rules.

How can we improve cost efficiency without sacrificing performance?

Maximize GPU utilization: apply model quantization, pruning, and batching to reduce compute per request; monitor real-time GPU usage to find idle or underused capacity; and schedule workloads intelligently to lower cost-per-inference. These levers turn raw GPU power into ROI.