Artificial intelligence models do not exist in isolation. They pass through a lifecycle that transforms raw data and algorithms into production-ready systems capable of generating real-world value. Understanding this lifecycle is critical for CTOs, data scientists, and engineering teams building enterprise AI strategies. Each stage – training, validation, deployment and inference – comes with unique technical challenges and infrastructure demands.

Cloud-based GPUs play a central role in enabling this journey. Their parallel processing capabilities accelerate compute-intensive workloads, while cloud platforms add flexibility ,scalability, and cost efficiency. For organizations navigating the complexities of AI, GPU cloud infrastructure offers the backbone that allows models to evolve from prototypes to production systems.

Data preparation and preprocessing

The lifecycle begins with data. Collecting, cleaning and labeling data is often the most time-consuming part of any AI project. High-quality datasets determine how effectively a model will learn. This stage includes removing inconsistencies, normalizing inputs, and sometimes augmenting the dataset to cover edge cases.

While preprocessing does not always require GPU acceleration, cloud GPUs can be leveraged for large-scale operations such as feature extraction or transforming massive image and video datasets. The ability to spin up GPU resources on demand allows teams to accelerate what might otherwise be a bottleneck.

Training AI models with GPUs

Training is where models learn patterns by processing enormous volumes of data through complex neural network architectures. This step is computationally intensive, involving billions – or even trillions – of matrix operations. CPUs can handle training, but at a fraction of the speed. GPUs, by contrast, are optimized for parallelism, enabling much faster convergence of deep learning models.

Training on GPUs in the cloud offers two significant advantages. First, teams gain access to cutting-edge hardware without capital investment in on-premises infrastructure. Second, cloud elasticity allows for scaling up GPU clusters to handle large models and datasets, then scaling down once training is complete. This flexibility prevents resources from sitting idle and optimizes costs.

Techniques such as distributed training, mixed-precision computation, and gradient checkpointing are often used in tandem with GPUs to further reduce training time. For organizations developing large language models, vision systems, or recommendation engines, these efficiencies can shorten time-to-market dramatically.

Validation and evaluation

Once a model is trained, it must be validated against unseen data to measure accuracy, generalization and robustness. Validation requires running inference repeatedly across large test datasets, which can be accelerated by GPUs.

At this stage, metrics such as precision, recall and F1 score are analyzed to ensure the model is not overfitting. Cloud GPUs make it possible to evaluate models quickly at scale, allowing teams to iterate and fine-tune hyperparameters without lengthy delays. Fast validation cycles improve productivity and help ensure models perform reliably before deployment.

Deployment into production environments

After validation, models move into deployment, where they are integrated into real-world applications. Here, infrastructure decisions play a defining role. Deployments can range from batch inference jobs that process large volumes of data periodically to real-time systems that deliver predictions in milliseconds.

Cloud GPUs shine in both contexts. For real-time applications – such as fraud detection, recommendation systems, or autonomous navigation – GPU acceleration ensures predictions are delivered with low latency. For batch workloads, GPUs allow high throughput, reducing the time required to process massive datasets.

Containerization and orchestration frameworks like Kubernetes are often used to manage deployments. These tools make it easier to allocate GPU resources dynamically, maintain version control, and roll out updates with minimal downtime.

Inference at scale

Inference marks the stage where the model generates value from live data. While training is about building intelligence, inference is about applying it. The demands here vary depending on the application. A chatbot needs millisecond response times, while a predictive maintenance system may tolerate slight delays but require high throughput for sensor data streams.

GPUs are particularly effective for inference tasks that involve large models or high request volumes. Features like batching, multi-instance GPU partitioning, and model quantization can optimize inference performance and lower costs. Cloud platforms add scalability, enabling automatic adjustments to GPU resources based on traffic spikes. This ensures consistent performance even when demand fluctuates.

Monitoring and optimization

The lifecycle does not end once a model is deployed. Continuous monitoring is essential to detect model drift, performance degradation, or anomalies in production data. If a model begins producing less accurate predictions due to changes in input data distributions, retraining may be necessary.

Cloud platforms equipped with observability tools allow teams to track inference latency, throughput, and error rates in real time. GPUs play a role here too, since monitoring systems themselves often rely on AI-driven analytics that benefit from accelerated processing. This closed feedback loop enables proactive optimization and maintains business value over time.

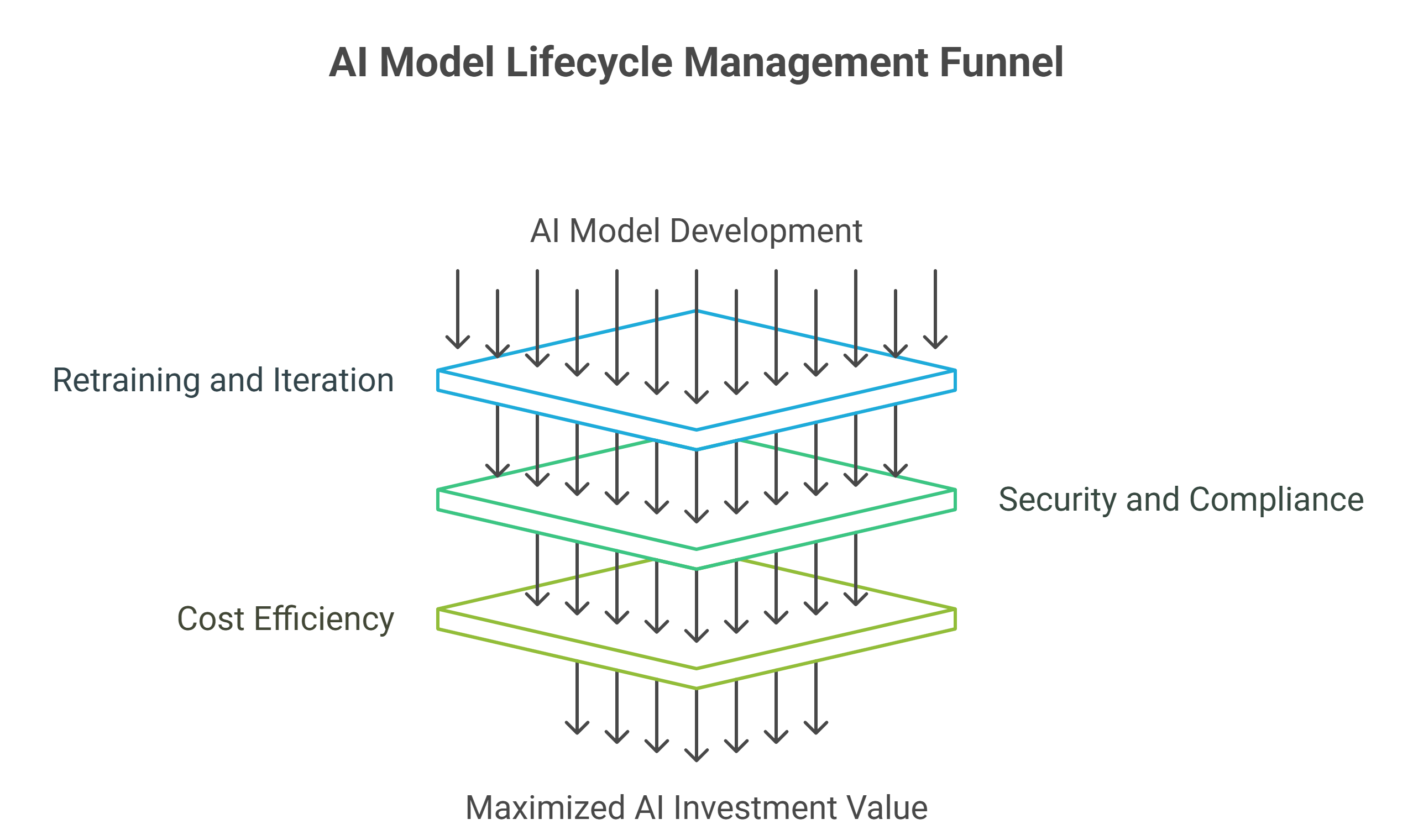

Retraining and iteration

AI models are never static. As new data becomes available, retraining ensures that models remain relevant and accurate. Cloud GPUs accelerate this process by reducing training cycles, allowing enterprises to update models more frequently. This is particularly important in dynamic domains like finance, e-commerce, and cybersecurity, where data patterns shift rapidly.

Enterprises often adopt a continuous learning pipeline, where models are retrained on a rolling basis and redeployed into production automatically. Cloud GPU elasticity supports this iterative approach without requiring permanent overprovisioning of hardware.

Security and compliance throughout the lifecycle

Across every stage – training, validation, deployment and inference – security and compliance are paramount. Enterprises must safeguard sensitive data, enforce role-based access controls, and ensure encryption in transit and at rest. Regulatory frameworks such as GDPR, HIPAA, and PCI DSS impose strict requirements on how AI workloads are handled.

Cloud providers offering GPU infrastructure often provide built-in compliance certifications, but CTOs must ensure governance policies are enforced consistently. This alignment between technical performance and regulatory responsibility is what allows AI systems to scale safely.

Cost efficiency in lifecycle management

While GPU acceleration is indispensable for modern AI, it comes at a cost. Cloud platforms mitigate this through flexible pricing models, pay-as-you-go options, and reserved capacity discounts. To maximize ROI, enterprises must monitor GPU utilization, avoid idle clusters, and use techniques like pruning and quantization to lower computational demand.

A well-designed lifecycle strategy aligns costs with business outcomes, ensuring GPUs are used where they add measurable value – whether that is faster training, lower-latency inference, or higher developer productivity.

Final thoughts

The lifecycle of an AI model is a continuous journey from data collection to real-world deployment and beyond. Each stage requires different capabilities, and cloud GPUs provide the acceleration, flexibility, and scalability needed to meet these demands.

For CTOs and technical leaders, the priority is to design workflows that integrate GPU acceleration seamlessly across training, validation, deployment, and inference. By doing so, enterprises not only shorten development cycles but also unlock the full value of AI in production.

GMI Cloud’s GPU-optimized platform supports this lifecycle end to end, enabling organizations to train, deploy and scale AI models efficiently. By combining advanced hardware with elastic cloud infrastructure, enterprises can ensure that their AI investments deliver both immediate impact and long-term adaptability.

Frequently Asked Questions about the AI Model Lifecycle with Cloud GPUs

What are the stages of an AI model lifecycle, and where do cloud GPUs help most?

The lifecycle spans data preparation, training, validation, deployment, and inference. Cloud GPUs accelerate compute-heavy parts—speeding training, fast validation at scale, and low-latency or high-throughput inference—while the cloud adds flexibility and cost control.

Do I need cloud GPUs for data preparation and preprocessing?

Not always. But for large-scale feature extraction or transforming massive image/video datasets, on-demand cloud GPUs can remove bottlenecks and keep pipelines moving.

Why is training on cloud GPUs more efficient than on CPUs?

Deep learning training involves huge parallel tensor ops. Cloud GPUs deliver faster convergence and elastic scaling (spin clusters up for big runs, down afterward). Techniques like distributed training, mixed precision, and gradient checkpointing further cut training time.

How should I deploy for real-time vs. batch inference on cloud GPUs?

Use GPUs for low-latency, real-time predictions and for high-throughput batch jobs. Containerization and orchestration (e.g., Kubernetes) help allocate GPU resources dynamically, manage versions, and roll out updates with minimal downtime.

How do I monitor, retrain, and optimize models in production?

Continuously track inference latency, throughput, and error rates to catch model drift or degradation. When data shifts, retrain and redeploy—cloud GPU elasticity supports frequent iterations. Features like batching, multi-instance GPU partitioning, and model quantization help optimize performance and cost.