TL;DR: For AI startups in 2025, a GPU cloud cost comparison reveals that specialized providers offer significant savings over hyperscalers. GMI Cloud provides one of the most competitive, high-performance options, with NVIDIA H100 GPUs starting at $2.10/hour and next-gen NVIDIA H200 GPUs at $3.35/hour (container). While hyperscalers like AWS and Azure charge a premium for their ecosystem, their on-demand H100 rates can be 2-3x higher, making specialized platforms like GMI Cloud a more sustainable choice for extending your startup's runway.

Key Takeaways:

- Prioritize Specialized Clouds: Specialized providers like GMI Cloud almost always offer lower per-hour GPU costs than hyperscalers.

- GMI Cloud Price Advantage: GMI Cloud offers transparent, pay-as-you-go pricing for top-tier hardware.

- Hyperscaler Costs: Major clouds (AWS, GCP, Azure) integrate many services, but you pay a premium for this convenience.

- Beware Hidden Fees: The hourly rate isn't the total cost. Data egress, storage, and networking fees can add 20-40% to your monthly bill.

- Instant Access is Key: Avoid providers with long lead times. GMI Cloud provides instant access to dedicated GPUs, accelerating your time-to-market.

Why Your GPU Cloud Choice is Critical for Startup Survival

For AI startups, GPU compute is the single largest infrastructure expense, often consuming 40-60% of the technical budget in the first two years. A poorly optimized GPU strategy can burn through seed funding months ahead of schedule.

This is where a GPU cloud cost comparison becomes your most critical strategic tool. The market is split into two main categories:

- Hyperscale Clouds (AWS, GCP, Azure): These giants offer a vast ecosystem of integrated services. However, their GPU compute is often priced at a premium, and accessing the latest hardware can involve waitlists.

- Specialized Clouds (GMI Cloud): These providers focus specifically on high-performance GPU compute. They typically offer better pricing, faster access to the latest hardware, and more transparent billing.

For startups, a provider like GMI Cloud is often the superior choice. As an NVIDIA Reference Cloud Platform Provider, GMI Cloud delivers a cost-efficient, high-performance solution specifically designed to reduce training expenses and speed up model development.

2025 GPU Cloud Cost Comparison: GMI Cloud vs. The Market

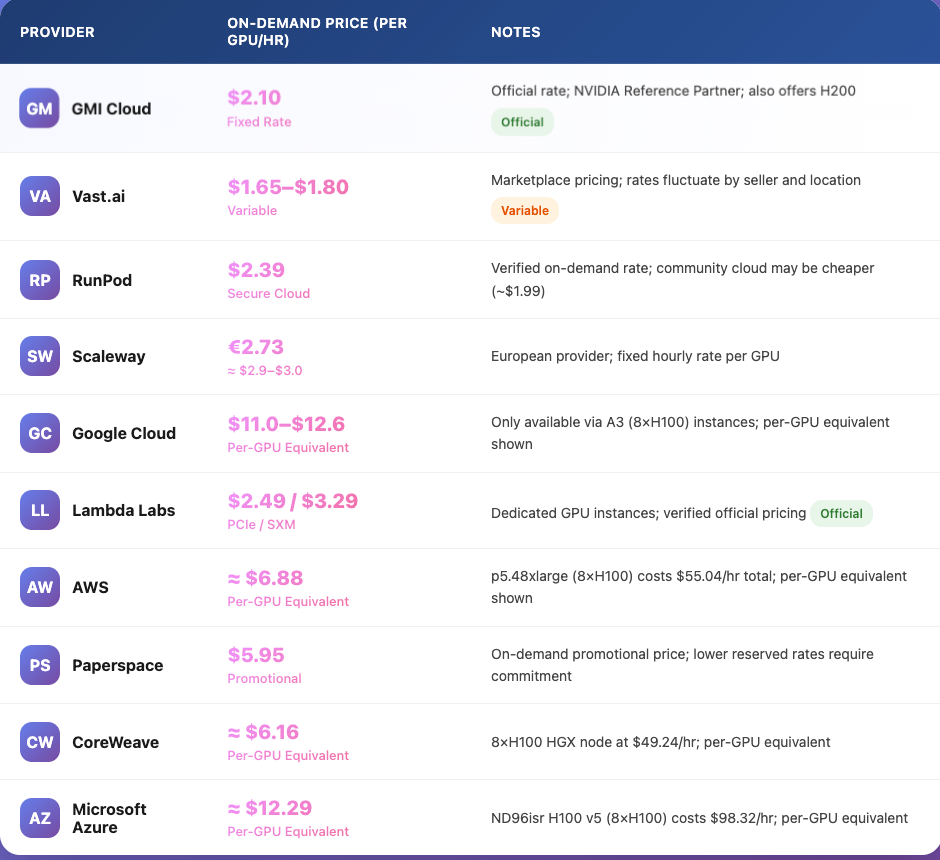

A direct GPU cloud cost comparison shows a clear price divide. Specialized providers, especially GMI Cloud, consistently lead on cost-efficiency for high-end GPUs.

Note: The prices below for providers other than GMI Cloud are based on publicly available data as of late 2025 and are marked. They are intended for comparison and are subject to change. Always check the provider's official pricing page.

On-Demand NVIDIA H100 Pricing (Per GPU/Hour) - 10 ProvidersProvider

Table Conclusion: The data is clear. For a startup's core AI workloads, choosing a specialized provider like GMI Cloud over a hyperscaler can cut your compute bill by 50% or more.

A Deeper Look: Hyperscalers vs. Specialized Providers

The Hyperscale Providers (AWS, GCP, Azure)

Hyperscalers are a good choice if your startup is already deeply embedded in their ecosystem (e.g., using their proprietary databases, storage, and APIs) and you can commit to long-term reserved instances.

- Pros: Deep integration with a wide array of services.

- Cons: Significantly higher on-demand costs, complex billing, and "hidden fees" for data transfer and storage that can inflate your bill.

The Specialized Providers (GMI Cloud, Lambda, etc.)

Specialized providers are built for one purpose: to deliver the best GPU performance for the lowest possible cost.

- Pros: Far more cost-efficient, transparent pay-as-you-go pricing, and faster, instant access to the latest GPUs like the H100 and H200.

- Cons: Less integration with non-AI cloud services (though this is often irrelevant for a dedicated training or inference workload).

Spotlight on GMI Cloud:

GMI Cloud exemplifies the specialized provider advantage. It's not just about low cost; it's about providing a platform optimized for AI. GMI Cloud offers:

- Top-Tier Hardware: Instant access to dedicated NVIDIA H100 and H200 GPUs.

- Optimized Services: A high-performance Inference Engine with fully automatic scaling and a Cluster Engine for managing complex GPU workloads.

- Superior Networking: Non-blocking InfiniBand networking ensures ultra-low latency, which is critical for distributed training.

- Flexible Model: A true pay-as-you-go model that lets startups scale without long-term commitments or large upfront costs.

Beyond the Hourly Rate: Hidden Costs in Your GPU Cloud Bill

An accurate GPU cloud cost comparison must look beyond the sticker price. Be sure to budget for:

- Data Transfer Fees: Hyperscalers are notorious for high "egress" fees, charging you to move your own data and model weights out of their cloud.

- Storage Costs: Storing massive datasets and model checkpoints costs money, often $0.10-$0.30 per GB monthly.

- Idle Time Waste: Many teams waste 30-50% of their budget on GPUs that are provisioned but not actively training.

Conclusion: How to Optimize Your GPU Spend

Recommendation: For AI startups in 2025, the most effective strategy is to use a specialized provider like GMI Cloud for your core AI workloads.

You can reduce costs by 40-70% by following these steps:

- Choose GMI Cloud: Start with the most cost-effective platform. GMI Cloud's leading rates for H100 and H200 GPUs provide an immediate financial advantage.

- Right-Size Your Instance: Don't use an H100 for a task a smaller GPU can handle. GMI Cloud offers a range of options to match your workload.

- Monitor Utilization: Shut down idle instances. GMI Cloud's flexible platform makes this easy.

- Leverage Auto-Scaling: Use services like the GMI Cloud Inference Engine, which supports fully automatic scaling to match workload demands, ensuring you only pay for what you use.

By pairing the right strategies with the right platform, you can extend your startup's runway and focus on innovation, not infrastructure costs.

Frequently Asked Questions (FAQ)

What is the cheapest GPU cloud provider for AI startups?

"Cheapest" depends on the hardware. For high-end NVIDIA H100 GPUs, specialized providers like GMI Cloud offer some of the lowest on-demand rates, starting at $2.10/hour. This is significantly more cost-effective than hyperscalers like AWS or Azure.

Why are specialized GPU clouds like GMI Cloud cheaper than AWS?

Specialized providers focus on optimizing one thing: GPU compute. They avoid the massive overhead and integrated service "tax" that hyperscalers pass on to customers. GMI Cloud's efficient operations and direct partnerships allow it to offer top-tier hardware at a lower price.

What GPUs does GMI Cloud offer?

GMI Cloud provides instant access to dedicated NVIDIA H100 and H200 GPUs. They also plan to add support for the next-generation Blackwell series soon.

What is the difference between GMI Cloud's Inference Engine and Cluster Engine?

The Inference Engine (IE) is for deploying models and supports fully automatic scaling to handle fluctuating traffic with ultra-low latency. The Cluster Engine (CE) is for managing scalable GPU workloads (like training) and requires customers to adjust compute power manually via console or API.

Are reserved instances worth it for a startup?

Reserved instances (RIs) offer discounts for 1-3 year commitments, which is risky for startups with uncertain growth. A better strategy is to use a flexible, pay-as-you-go provider like GMI Cloud. This avoids long-term lock-in while still providing low, predictable costs.