In 2025, AI video generation tools from platforms like Runway, Pika, and HeyGen are moving from experiments to enterprise tools. While these "open beta" platforms offer rapid content creation, businesses face significant bottlenecks in performance, cost, and scalability. The solution lies in the underlying infrastructure. For companies needing to deploy scalable AI video workloads, a high-performance GPU cloud provider like GMI Cloud is essential for reducing latency and controlling costs.

- Market Status: Most AI video tools are in "open beta," meaning features are evolving, but performance and security can vary.

- Business Need: Companies use AI video to cut production costs and accelerate marketing, but face latency and scaling challenges.

- The Bottleneck: Real-time video generation is one of the most demanding AI workloads, requiring massive GPU power.

- Infrastructure Solution: Platforms like GMI Cloud provide the specialized GMI Cloud AI infrastructure, including H100/H200 GPUs and low-latency inference engines, which are critical for production-grade AI video.

- Proven Results: The generative video company Higgsfield reduced its compute costs by 45% and cut inference latency by 65% by using GMI Cloud.

Introduction — The Rise of AI Video Generation

The field of AI video generation for business is exploding in 2025. Tools that generate video from text prompts (text-to-video), create realistic AI avatars, or animate existing images are no longer just novelties. Leading platforms like Runway, Pika Labs, Synthesia, HeyGen, and Recraft are enabling businesses to create marketing, training, and e-commerce content at unprecedented speed.

However, many of these powerful tools remain in an "open beta" phase. This signifies a period of rapid evolution, but it also means businesses must navigate inconsistent performance, shifting pricing, and potential limitations in deployment. As companies move from testing to full-scale adoption, the underlying infrastructure becomes the most critical factor for success.

Why Businesses Are Adopting AI Video Tools

The adoption of enterprise AI video tools is driven by clear business value.

- Cost Savings: AI video dramatically reduces the need for expensive film shoots, actors, and lengthy post-production cycles.

- Speed & Acceleration: Marketing and training teams can generate localized or personalized video content in minutes, not weeks.

- Brand Consistency: AI avatars and style-consistent models ensure that all corporate communications maintain a uniform look and feel.

Despite these advantages, enterprises quickly encounter significant pain points:

- High Latency: Slow generation speeds for high-definition video hinder real-time applications.

- Scalability Issues: Public SaaS platforms often struggle to handle high-volume batch processing or sudden spikes in demand.

- Data Security: Uploading sensitive corporate data to third-party beta platforms raises significant compliance and security concerns.

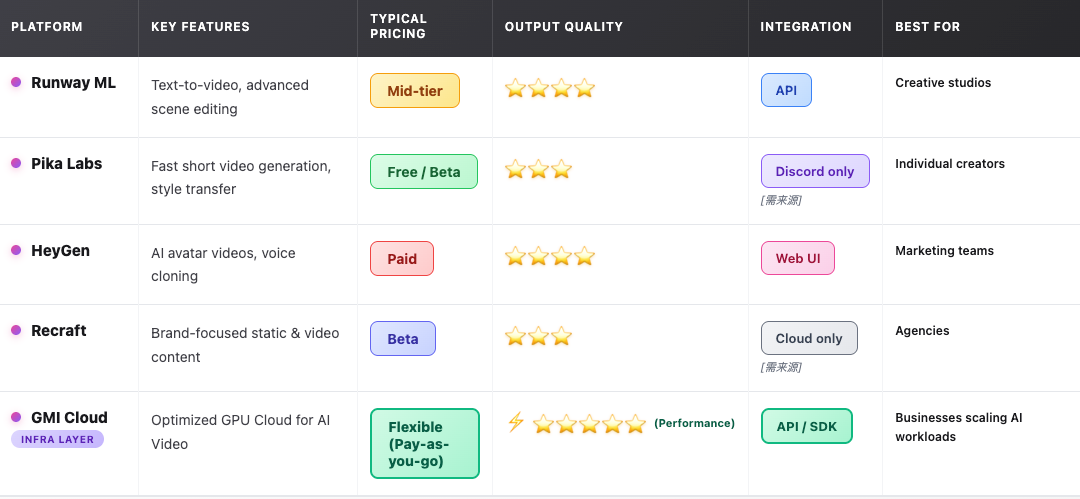

Comparison of Leading Open Beta AI Video Platforms

The current market offers a range of tools, each suited for different tasks. However, for businesses planning to scale, the most important component is the infrastructure layer that powers these tools.

Comparison: AI Video Platforms vs. Infrastructure

💡 Note: GMI Cloud is not a content generation tool. It is the high-performance infrastructure backbone that businesses use to deploy their own AI video tools, run open-source models, or build custom solutions with maximum performance and efficiency.

Infrastructure Matters — The Hidden Bottleneck in AI Video

AI video generation is one of the most computationally intensive tasks in modern AI. It combines the challenges of large language models (understanding prompts) with the demands of high-resolution image rendering, all sequenced over time.

This process requires immense GPU acceleration for both training the models and, more importantly, for inference (the act of generating the video). Most SaaS platforms run on generalized cloud environments, which are not optimized for these specific, ultra-low-latency workloads.

This is precisely why businesses building or scaling enterprise AI video solutions turn to specialized infrastructure. Platforms built on GMI Cloud AI infrastructure, for example, are designed to handle these demanding tasks, achieving significantly lower latency and higher throughput for scalable AI video workloads.

How GMI Cloud Supports AI Video Generation at Scale

GMI Cloud provides the production-ready foundation for businesses that find off-the-shelf tools too slow, too expensive, or not secure enough. It bridges the gap between beta-level performance and enterprise-grade reliability.

Key Features for AI Video Workloads:

- High-Performance GPU Access: GMI Cloud offers instant, on-demand access to top-tier NVIDIA GPUs, including the H100 and H200, which are essential for complex video rendering.

- Optimized Inference Engine: The GMI Cloud Inference Engine is purpose-built for real-time AI, providing ultra-low latency and automatic scaling. This is ideal for running generative video models efficiently.

- Scalable Cluster Engine: For businesses training their own custom video models, the GMI Cloud Cluster Engine provides a robust environment for managing and orchestrating large-scale GPU workloads.

- Cost-Efficient Pricing: With a flexible, pay-as-you-go model and proven cost savings of up to 50% compared to other providers, GMI Cloud makes scaling AI video economically viable.

- High-Speed Networking: GMI Cloud uses InfiniBand networking to eliminate data bottlenecks, ensuring high-throughput connectivity for distributed workloads.

For teams looking to optimize latency or deploy custom models, GMI Cloud provides the high-performance foundation needed for production success.

Real-World Success: Higgsfield Scales Generative Video with GMI Cloud

Instead of a hypothetical scenario, a real-world case study demonstrates the impact of specialized infrastructure. Higgsfield, a company redefining generative video, faced significant infrastructure challenges.

The Challenge: Higgsfield needed to power high-throughput inference for real-time video generation. Their CEO, Alex Mashrabov, noted, "Generative video is one of the most demanding Al workloads. It requires real-time inference, top-tier performance, and the ability to scale without tradeoffs".

The Solution: Higgsfield partnered with GMI Cloud to build a customized infrastructure stack. GMI Cloud provided access to the newest NVIDIA GPUs and its optimized Cluster and Inference Engines, tailored for Higgsfield's unique workload.

The Results:

- 65% Reduction in Inference Latency: Video generation became faster and smoother for users.

- 45% Lower Compute Costs: GMI Cloud's efficiency and pricing significantly reduced operational expenses.

- 200% Increase in Throughput: Higgsfield could serve more users simultaneously without performance degradation.

This case study shows a clear pipeline for success: test models on open beta platforms, then move to GMI Cloud for scalable, cost-effective, and high-performance production.

Conclusion — What Businesses Should Do Next

Conclusion: The 2025 "open beta" landscape for AI video generation tools offers powerful capabilities for businesses. However, relying on the default infrastructure of these SaaS platforms creates a ceiling on performance, cost, and scalability.

The key to unlocking AI video generation for business lies in optimizing the underlying compute layer. As generative video moves from experiment to a core business function, the performance of your GPU cloud for video AI becomes your primary competitive advantage.

If your business is exploring AI video generation, building your own models, or hitting performance walls with current tools, explore how GMI Cloud's GPU infrastructure can give you the speed, flexibility, and reliability you need to scale without limits.

Frequently Asked Questions (FAQ)

What is AI video generation for business?

It is the use of artificial intelligence to create or modify video content for commercial purposes, such as marketing campaigns, corporate training, product visualizations, and personalized customer engagement.

Why is specialized GPU infrastructure so important for AI video tools?

AI video generation is extremely computationally expensive. It requires high-performance GPUs to process complex models and render high-resolution frames quickly. Standard cloud infrastructure often leads to slow generation times (high latency) and high costs.

How does GMI Cloud help businesses using AI video?

GMI Cloud provides a specialized GMI Cloud AI infrastructure that is optimized for demanding AI workloads. This includes instant access to top-tier NVIDIA GPUs (like the H100/H200), an Inference Engine for ultra-low latency, and a Cluster Engine for managing scalable workloads. This results in faster, more reliable, and more cost-effective AI video generation, as seen with clients like Higgsfield.

Can I run my own custom or open-source AI video models on GMI Cloud?

Yes. GMI Cloud is ideal for this. You can use the Cluster Engine to manage containerized workloads or the Inference Engine to serve open-source or custom models on dedicated endpoints with high performance and automatic scaling.

What is the difference between GMI Cloud's Inference Engine and Cluster Engine for video?

- Inference Engine (IE): Best for running (serving) pre-trained models. It is optimized for real-time, low-latency video generation and scales automatically with user demand.

- Cluster Engine (CE): Best for managing and training models. It gives you control over a cluster of GPUs to orchestrate complex, large-scale training jobs or manage custom containerized environments.