As artificial intelligence continues to move from research labs into production environments, the focus has shifted from building large models to deploying them efficiently at scale. Inference – the stage of the AI lifecycle where trained models process new data – is where organizations actually deliver value to users. Whether it is a voice assistant generating responses in real time, a fraud detection system scanning thousands of transactions per second, or a recommendation engine adapting instantly to user behavior, inference engines determine how quickly and reliably these models can operate.

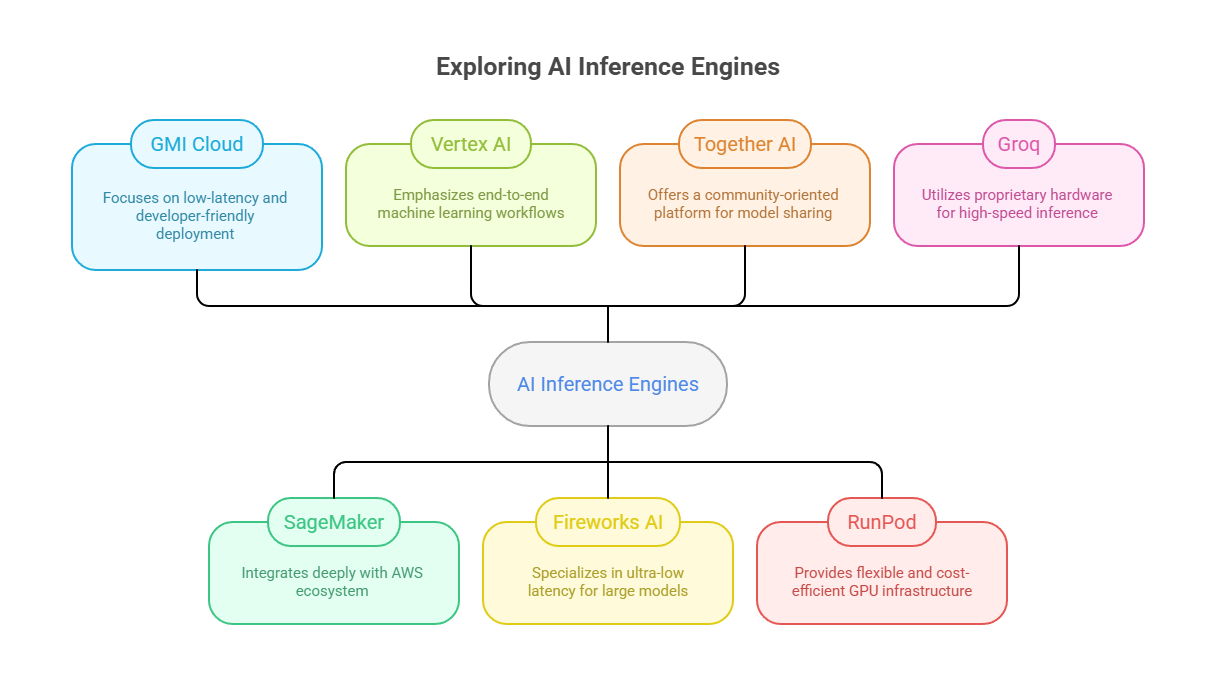

Cloud providers and specialized platforms have responded by offering inference-optimized environments that remove much of the complexity of deployment. But the market is now crowded, and each option comes with its own performance profile, pricing model, and level of control. GMI Cloud’s Inference Engine is one such platform, but decision-makers often compare it against larger ecosystems like Amazon SageMaker and Google Vertex AI, as well as newer entrants such as Fireworks.ai, Together.ai, RunPod.ai and Groq.com. Understanding how these solutions differ is critical for teams choosing the right partner for their workloads.

The role of inference engines in modern AI

An inference engine is responsible for managing how models are deployed, scaled, and optimized in production. It abstracts away infrastructure complexity, so developers can focus on building applications rather than provisioning servers or tuning hardware manually. Key considerations include latency, throughput, scalability, framework compatibility, and cost efficiency.

For real-time applications – like chatbots, image recognition systems, or financial transaction monitoring – inference must happen within milliseconds. Batch inference, by contrast, can tolerate more latency but demands cost-effective throughput at scale. Most cloud inference platforms attempt to address both needs, but the degree of optimization varies significantly between providers.

GMI Cloud: Inference built for speed and control

GMI Cloud positions its Inference Engine as a purpose-built platform for low-latency AI workloads. Unlike hyperscale providers that integrate inference into sprawling ecosystems, GMI emphasizes performance, predictability, and developer-friendly deployment.

One of its strengths is ultra-low latency scheduling. Requests are routed to GPUs through optimized load-balancing mechanisms, reducing the time between input and output to mere milliseconds. Automatic scaling ensures that GPU resources expand and contract with traffic, maintaining consistent performance even during demand spikes. GMI also supports a broad range of AI frameworks, so teams can bring existing models without extensive reengineering.

Because GMI Cloud operates out of Tier-4 data centers with inference-optimized GPUs, customers benefit from both reliability and raw speed. Cost efficiency is another differentiator, with a usage-based model designed to maximize performance per dollar. For organizations that need control without navigating the overhead of a hyperscale platform, GMI offers a streamlined and transparent approach.

SageMaker: Deep integration with AWS

Amazon SageMaker is one of the most widely adopted platforms for deploying and managing machine learning models. Its strength lies in integration with the broader AWS ecosystem. Teams that already run workloads in AWS can spin up inference endpoints that connect seamlessly with data pipelines, monitoring tools, and security frameworks.

SageMaker supports both real-time and batch inference, along with features like multi-model endpoints, automatic scaling, and built-in model monitoring. However, this breadth comes with complexity. The platform requires a level of AWS expertise, and costs can escalate quickly when multiple services are combined. Latency is competitive, but because inference is one of many features rather than a single focus, it may not always match the performance tuning of specialized providers.

For organizations deeply embedded in AWS infrastructure, SageMaker reduces friction. But for teams seeking simplicity, or those primarily concerned with millisecond-level responsiveness, the trade-offs in cost and complexity need to be considered carefully.

Vertex AI: Streamlined machine learning on Google Cloud

Google’s Vertex AI takes a slightly different approach, emphasizing end-to-end machine learning workflows within the Google Cloud ecosystem. Its inference services integrate tightly with Google’s storage, BigQuery analytics, and TensorFlow support, making it a natural fit for teams already leveraging Google’s stack.

Vertex AI supports custom and pre-trained models, with options for online prediction and batch inference. One of its advantages is access to Google’s global infrastructure, which can reduce latency for geographically distributed applications. Like SageMaker, however, inference is just one component of a much larger ecosystem. The platform is designed to guide users from experimentation to deployment, which is powerful but can feel heavyweight for teams that only need inference at scale.

For developers working with TensorFlow or leveraging Google’s data analytics tools, Vertex AI is compelling. But the learning curve, ecosystem lock-in, and pricing complexity may pose challenges for smaller teams or those seeking maximum agility.

Fireworks AI: A newcomer focused on inference speed

Fireworks AI is one of several newer players focusing narrowly on inference optimization. Its value proposition centers on enabling developers to deploy models quickly with ultra-low latency, particularly for large language models. Unlike hyperscale platforms, Fireworks.ai positions itself as lean and performance-driven, with APIs that simplify deployment and scaling.

This makes it attractive to startups and research groups experimenting with cutting-edge models. However, as a younger company, Fireworks.ai may not yet offer the same depth of ecosystem integrations, compliance certifications, or global data center footprint as larger providers. For early adopters who prioritize speed above all else, it is an option worth evaluating, but long-term considerations around stability and support remain.

Together AI: Collaboration-driven model hosting

Together AI takes a community-oriented approach, offering a platform where developers can run, share, and collaborate on AI models. Its inference services are built around accessibility and transparency, with an emphasis on open-source frameworks and democratizing access to large models.

Performance is competitive for standard inference workloads, and Together.ai’s API-first design makes integration straightforward. Where it differentiates is less in raw throughput and more in enabling a shared infrastructure for the AI community. For organizations that value openness and interoperability, Together.ai aligns philosophically. For enterprises with strict latency, compliance, or SLAs, it may not yet provide the guarantees of more established inference engines.

RunPod: Flexibility and cost efficiency

RunPod is another emerging provider that focuses on accessible GPU infrastructure for AI workloads. Its inference offering emphasizes flexibility, with options for serverless deployments and dedicated GPU pods. The platform is designed to be cost-efficient, giving developers control over instance types and pricing tiers.

For small to medium teams, RunPod provides an attractive balance of affordability and GPU access. It appeals to developers who want more control than fully managed services allow, but without investing in on-premises infrastructure. Like other new entrants, its global footprint and enterprise integrations may not yet match the hyperscalers, but its niche is clear: delivering scalable GPU inference at lower cost.

Groq: A hardware-first approach to inference

Groq has built inference infrastructure using its proprietary Language Processing Unit (LPU), a purpose-built ASIC designed specifically for ultra-low-latency, high-throughput inference workloads. Unlike GPUs, the LPU co-locates compute and memory, eliminates traditional caches, and uses a kernel-less compiler – delivering deterministic model execution and streamlined scaling.

Groq offers two inference deployment paths: GroqCloud™, a public and private cloud platform for serverless LPU-powered inference, and GroqRack™, an on-prem rack-scale solution suitable for data center deployments. Groq’s systems demonstrate industry-leading speed and cost efficiency: they deliver higher token throughput and very low latency compared to conventional cloud GPU services, while being notably more energy efficient.

Final thoughts: choosing the right inference engine

The right inference platform depends on an organization’s priorities. Hyperscale providers like SageMaker and Vertex AI offer deep ecosystem integration but often introduce added complexity and potential lock-in. Emerging players like Fireworks AI, Together AI or RunPod emphasize agility, cost efficiency or community-driven innovation, while Groq introduces a hardware-first approach that achieves impressive performance with unique trade-offs.

GMI Cloud takes a different path by focusing specifically on inference rather than embedding it into a broad ecosystem. Its inference-optimized infrastructure provides low latency, scalability and developer usability, helping teams deploy quickly and scale intelligently without unnecessary overhead. For enterprises that value speed, control and a streamlined path to production, GMI Cloud’s Inference Engine offers a compelling balance against both hyperscale platforms and newer entrants – reflecting the broader evolution of the inference ecosystem as AI adoption accelerates.

Frequently Asked Questions About AI Inference Engines and Platforms

1. What is an inference engine and why is it important?

An inference engine manages how trained AI models are deployed, scaled, and optimized in production. It abstracts away infrastructure complexity, enabling developers to focus on applications rather than hardware management. For real-time tasks like fraud detection or chatbots, inference engines ensure results are delivered in milliseconds, while batch inference emphasizes throughput and cost efficiency.

2. How does GMI Cloud’s Inference Engine differ from hyperscale providers like SageMaker or Vertex AI?

GMI Cloud focuses specifically on inference, offering ultra-low latency scheduling, predictable performance, and developer-friendly deployment without the overhead of managing an entire cloud ecosystem. By contrast, SageMaker and Vertex AI integrate inference into broader cloud stacks (AWS and Google Cloud), which provide ecosystem benefits but can introduce added cost, complexity, and potential vendor lock-in.

3. What are the strengths of newer inference-focused platforms such as Fireworks.ai, Together.ai, and RunPod?

- Fireworks.ai emphasizes ultra-low latency for large language models, making it attractive to early adopters and research teams.

- Together.ai focuses on openness and collaboration, enabling developers to share and run models within a community-driven environment.

- RunPod prioritizes flexibility and cost efficiency, offering serverless and dedicated GPU pods for teams that want scalable infrastructure at lower cost.

4. How does Groq’s approach to inference differ from GPU-based platforms?

Groq uses its own Language Processing Unit (LPU), a specialized chip designed for ultra-low-latency and high-throughput inference. Unlike GPUs, it integrates compute and memory closely, eliminating bottlenecks. Groq’s solutions (GroqCloud™ and GroqRack™) deliver deterministic execution, higher token throughput, and notable energy efficiency, offering a hardware-first alternative to traditional GPU inference.

5. How should organizations choose the right inference platform?

The choice depends on business priorities:

- Ecosystem integration → AWS SageMaker, Google Vertex AI.

- Low latency and performance focus → GMI Cloud, Groq.

- Agility and cost efficiency → Fireworks.ai, RunPod.

- Openness and collaboration → Together.ai.

Enterprises should weigh latency needs, cost models, compliance requirements, and developer usability to select the best fit. GMI Cloud’s focus on inference-only infrastructure offers a streamlined alternative for organizations seeking speed and control without unnecessary complexity.