Conclusion (TL;DR): For most enterprises in 2025, accessing NVIDIA H100 GPUs through a specialized cloud provider like GMI Cloud is significantly more cost-effective and flexible than on-premise deployment. Cloud solutions eliminate massive upfront CapEx, reduce time-to-market from months to minutes, and offer competitive hourly rates. On-premise only makes sense for highly specific, long-term workloads with predictable demand and strict data sovereignty needs.

Key Takeaways: The H100 "Build vs. Buy" Decision

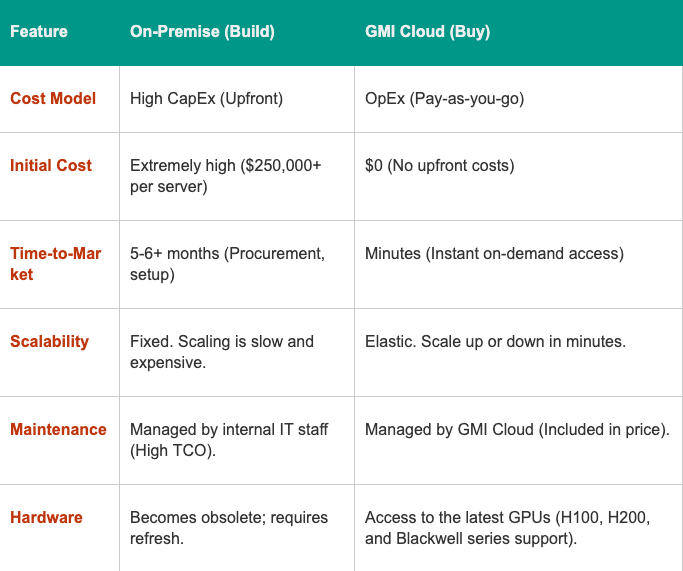

Enterprises face a critical choice for powering AI workloads: building an on-premise H100 cluster (CapEx) or using a cloud service (OpEx).

- Cloud (OpEx): Offers instant access and scalability with zero upfront cost. Specialized providers like GMI Cloud offer H100s at highly competitive rates, starting as low as $2.10/hour.

- On-Premise (CapEx): Requires massive initial investment (often $250,000+ for an 8x H100 server) and long lead times (5-6 months industry average).

- Hidden Costs: On-premise Total Cost of Ownership (TCO) is high, including power, cooling, networking, and IT staff. Cloud models bundle these costs.

- The GMI Solution: GMI Cloud provides enterprise-grade performance, including bare-metal H100 access and InfiniBand networking, with flexible, pay-as-you-go pricing.

Understanding the Core Pricing Models

The primary difference in NVIDIA H100 GPU pricing lies in capital expenditure (CapEx) versus operational expenditure (OpEx).

On-Premise: The CapEx Model

This is the traditional "build" approach. Enterprises purchase and own the hardware.

- Initial Cost: A single server equipped with 8x NVIDIA H100 GPUs can cost over $250,000.

- Lead Times: Hardware procurement is a major bottleneck. The industry average lead time for GPU clusters is 5-6 months.

- Hidden Costs (TCO): The sticker price is just the beginning. Enterprises must also pay for:

- Data center space, power, and industrial-grade cooling.

- High-speed networking (like InfiniBand).

- Dedicated IT staff for setup, maintenance, and security.

- Hardware refresh cycles every 3-5 years.

Cloud: The OpEx Model

This is the modern "buy" approach. Enterprises pay for access to H100 GPUs on an hourly or reserved basis.

- No Upfront Costs: This model eliminates CapEx, freeing up capital.

- Pay-As-You-Go: You only pay for the resources you use, allowing for precise budget control.

- Instant Access: Resources are available in minutes, not months.

Cloud H100 Pricing: Hyperscalers vs. Specialized Providers

Not all cloud providers are priced equally. For high-end H100 GPUs, specialized providers often deliver significant advantages.

Hyperscalers (AWS, GCP, Azure)

Hyperscalers integrate H100s into their vast ecosystem. However, this often comes at a premium.

- Cost: On-demand H100 pricing can range from $4.00 to $8.00 per hour.

- Availability: Due to high demand, access to H100 instances can be limited, with waitlists common.

Specialized Providers: The GMI Cloud Advantage

Specialized, AI-focused providers like GMI Cloud are built specifically for high-performance GPU workloads. As an NVIDIA Reference Cloud Platform Provider, GMI Cloud offers a more direct and cost-effective solution.

- Aggressive Pricing: GMI Cloud offers NVIDIA H100 GPUs starting at just $2.10 per hour on-demand.

- Cost-Effective Private Cloud: For steady workloads, enterprises can get H100 private cloud access for as low as $2.50 per GPU-hour.

- Proven Savings: Case studies show clients switching to GMI Cloud achieved 50% more cost-effectiveness than alternative cloud providers.

- Better Availability: GMI Cloud focuses on efficient supply chains, offering faster access to bare-metal infrastructure (2.5 months lead time) compared to the 5-6 month industry average, and instant access for on-demand services.

Comparison: Cloud vs. On-Premise for H100 Workloads

Recommendation: When to Choose GMI Cloud

Choose GMI Cloud if your enterprise needs:

- Cost Efficiency: You want to reduce AI training expenses and avoid high hyperscaler costs.

- Speed and Agility: You need to get projects to market faster and cannot wait 6 months for on-prem hardware.

- Scalability: Your workload is variable. GMI's Inference Engine supports fully automatic scaling.

- Enterprise-Grade Security: GMI provides SOC 2 certified infrastructure with options for dedicated private clouds and secure networking.

Common Questions: NVIDIA H100 GPU Pricing (FAQ)

Question: What is the cheapest way to access NVIDIA H100 GPUs in 2025?

Answer: The most cost-effective method for most workloads is using a specialized GPU cloud provider. Platforms like GMI Cloud offer on-demand H100 access starting as low as $2.10 per GPU-hour, which is often 50-70% cheaper than hyperscaler rates.

Question: Is on-premise H100 deployment cheaper in the long run?

Answer: Not necessarily. While the hourly cost becomes $0 after the hardware is paid off, the Total Cost of Ownership (TCO) remains high. This includes substantial, ongoing costs for power, cooling, maintenance, and IT staff, which are often underestimated. The lack of scalability also means you pay for idle hardware.

Question: How quickly can my team get access to H100 GPUs?

Answer: With an on-premise strategy, expect to wait 5-6 months for hardware delivery and setup. With a specialized provider like GMI Cloud, you can get instant, on-demand access to H100 compute within minutes of signing up.

Question: What is the pricing for an NVIDIA H200 GPU?

Answer: GMI Cloud offers on-demand NVIDIA H200 GPUs at a list price of $3.50 per GPU-hour for bare-metal and $3.35 per GPU-hour for containers.