TL;DR: Achieving low latency AI inference is critical for real-time applications like chatbots and generative video. While hyperscalers (AWS, Google) offer broad ecosystems, specialized providers like GMI Cloud deliver superior, cost-efficient performance. GMI Cloud's Inference Engine is purpose-built for this, providing ultra-low latency, intelligent automatic scaling, and instant access to top-tier NVIDIA GPUs.

Key Takeaways:

- Why Latency Matters: Low latency (a fast response time) is the difference between a real-time, usable AI application and a slow, frustrating user experience.

- Provider Types: Specialized GPU clouds are often built specifically for low latency AI inference, providing better performance and cost control than general-purpose hyperscalers.

- GMI Cloud's Solution: GMI Cloud offers a dedicated Inference Engine designed for real-time AI at scale, featuring automatic scaling and ultra-low latency.

- Critical Hardware: Access to the latest GPUs is essential. GMI Cloud provides NVIDIA H200 GPUs and will support the upcoming Blackwell series, ensuring top performance.

- Optimization is Key: Techniques like model quantization and speculative decoding, which GMI Cloud's engine supports, are vital for reducing costs and improving speed.

What is Low Latency AI Inference (and Why It Matters)?

Short Answer: Low latency AI inference refers to the ability of an AI model to receive a query (like a text prompt) and return an answer (like generated text or an image) almost instantly.

The Long Explanation:

"Latency" is the total time delay. In AI, this includes the time it takes for data to travel to the model, for the model to process the query, and for the result to travel back to the user.

High latency (a long delay) is unacceptable for user-facing applications. Imagine a chatbot that takes 10 seconds to reply or a generative video tool that stutters. Low latency is the foundation of real-time AI, powering:

- Conversational AI and virtual assistants.

- Real-time video and image generation.

- Financial fraud detection.

- Interactive gaming and AR/VR.

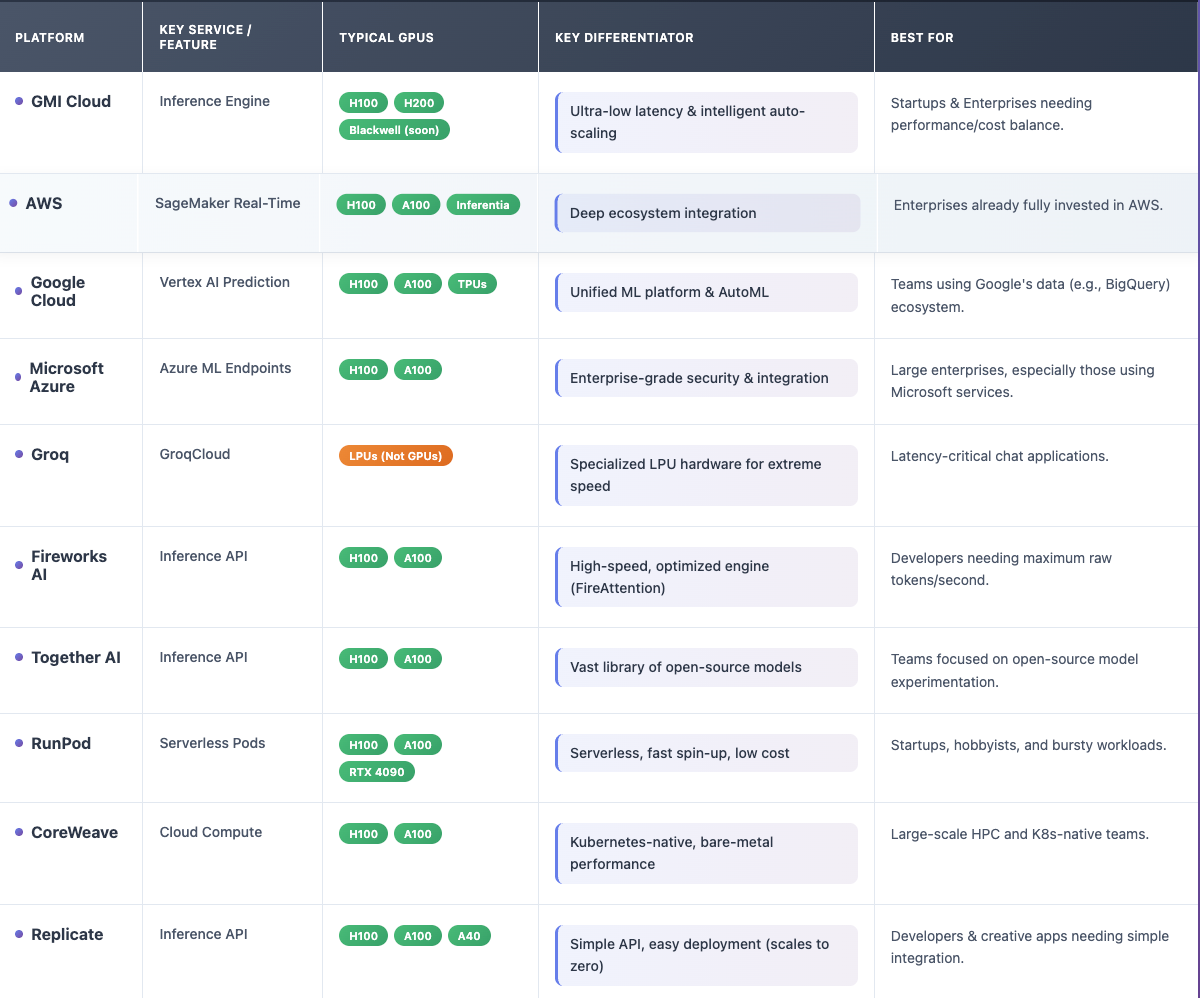

Top 10 Platforms for Low Latency AI Inference in 2025

Choosing the right platform is the most important step. Providers are generally split into two camps: hyperscalers (like AWS, Google) who offer AI as part of a massive ecosystem, and specialized providers (like GMI Cloud, Groq) who focus exclusively on high-performance, cost-effective GPU compute.

Comparison of Leading Inference Platforms

Platform Deep Dive

1. GMI Cloud

GMI Cloud is a premier choice for low latency AI inference, providing a purpose-built platform that balances performance, cost, and ease of use.

Its core offering, the GMI Cloud Inference Engine, is designed specifically for real-time AI at scale. It delivers ultra-low latency by using dedicated inferencing infrastructure and supports leading open-source models like Llama 4 and DeepSeek V3.1 on dedicated endpoints.

A key advantage is its intelligent, fully automatic scaling. The Inference Engine adapts to workload demands in real-time, ensuring stable performance without manual intervention. This contrasts with their Cluster Engine, which offers granular control for training but requires manual scaling. GMI Cloud helps reduce costs through optimizations like quantization and speculative decoding and offers transparent, pay-as-you-go pricing.

2. AWS SageMaker

Amazon SageMaker offers a powerful and comprehensive MLOps platform. Its strength lies in its deep integration with the entire AWS ecosystem, making it a default choice for enterprises already on AWS. It supports real-time inference, multi-model endpoints, and custom-built silicon like AWS Inferentia.

3. Google Cloud Vertex AI

Vertex AI is Google's unified ML platform, excelling at streamlining the entire workflow from data preparation (integrating with BigQuery) to model deployment. It offers strong AutoML capabilities and access to both NVIDIA GPUs and Google's own TPUs.

4. Microsoft Azure ML

Azure Machine Learning provides an enterprise-grade platform with robust security, compliance, and governance features. It's a strong choice for large corporations, especially those integrated with Microsoft 365 and other Azure services.

5. Groq

Groq offers a unique approach by using its own silicon, called LPUs (Language Processing Units), instead of GPUs. This allows them to deliver exceptionally fast, deterministic low latency, making them a popular choice for highly responsive chatbots.

6. Fireworks AI

Fireworks AI is a specialized provider that focuses on speed. It claims to have one of the fastest inference engines available, using proprietary optimizations to serve models at a very high token-per-second rate.

7. Together AI

Together AI's platform is built around the open-source community. It provides a simple API to access hundreds of the latest open-source models, making it easy to experiment and deploy without managing infrastructure.

8. RunPod

RunPod is known for its flexibility and low cost, appealing to startups and developers. It offers serverless GPU pods that can spin up in seconds and scale automatically, including access to consumer-grade cards like the RTX 4090.

9. CoreWeave

CoreWeave is a Kubernetes-native cloud provider built specifically for large-scale GPU compute. It offers bare-metal performance and high-speed networking, making it ideal for teams with deep K8s expertise.

10. Replicate

Replicate makes AI accessible to developers by providing a simple API to run models. Users can deploy models with just a few lines of code, and the platform automatically handles scaling, including scaling to zero so you only pay for what you use.

Key Strategies to Optimize for Low Latency AI Inference

You cannot achieve low latency just by choosing a fast platform. You must also optimize your model and infrastructure.

1. Platform & Hardware Optimization

Choosing the Right Service: As shown above, a specialized service is often best. The GMI Cloud Inference Engine is designed for this, whereas a more general-purpose service like the GMI Cloud Cluster Engine is better for training workloads.

Using Top-Tier GPUs: The GPU you use has a massive impact.

- NVIDIA H200: This GPU is ideal for generative AI and LLMs. It features 141 GB of high-speed HBM3e memory and 4.8 TB/s of memory bandwidth, 1.4x more than the H100. GMI Cloud offers on-demand access to H200 GPUs.

- NVIDIA Blackwell (GB200/B200): The next generation of GPUs, promising massive performance gains for LLM inference. GMI Cloud will be adding support for the Blackwell series soon.

Cost Control: Performance must be balanced with cost. GMI Cloud offers a transparent pay-as-you-go model with H200 GPUs priced at $2.50/hr. This avoids large upfront costs.

2. Model-Level Optimization

Model Quantization: This technique reduces the precision of a model's weights (e.g., from 16-bit to 8-bit), making the model smaller and faster with minimal accuracy loss. GMI Cloud's platform supports optimizations like quantization to help reduce compute costs.

Speculative Decoding: A method where a smaller, faster "draft" model proposes several tokens in advance, which the larger, more accurate model then verifies in a single step. This can significantly speed up token generation. GMI Cloud's optimizations include speculative decoding.

Batching: Grouping multiple user queries together and feeding them to the GPU as a single "batch." This dramatically improves GPU utilization and overall throughput, which is a key feature of efficient inference engines.

Conclusion: The Best Platform for Your Needs

For large enterprises already locked into a hyperscaler, AWS SageMaker or Google Vertex AI may be the path of least resistance. For developers needing pure, blazing-fast speed for chat, Groq is a compelling option.

However, for the vast majority of startups and enterprises that need a scalable, reliable, and cost-efficient solution for low latency AI inference, a specialized provider offers the best balance. GMI Cloud stands out as a premier choice. Its purpose-built Inference Engine, intelligent automatic scaling, and access to cutting-edge hardware like the NVIDIA H200 provide the foundation for building and scaling next-generation AI applications without compromise.

Explore GMI Cloud's Inference Engine

Frequently Asked Questions (FAQ)

Q1: What is low latency AI inference?

Answer: It is the ability for an AI model to provide a response (inference) to a query with minimal delay. This is essential for real-time applications like chatbots, live video analysis, and generative AI.

Q2: Why is GMI Cloud a good choice for low latency inference?

Answer: GMI Cloud is a NVIDIA Reference Cloud Platform Provider that offers a purpose-built Inference Engine. This service provides ultra-low latency, fully automatic scaling to meet demand, and instant model deployment, all at a cost-efficient, pay-as-you-go price.

Q3: What GPUs are best for low latency AI?

Answer: High-performance GPUs with large memory bandwidth are best. This includes the NVIDIA H100, the NVIDIA H200 (which has 1.4x more bandwidth than the H100), and the upcoming NVIDIA Blackwell series. GMI Cloud offers both H100 and H200 GPUs and will add Blackwell support.

Q4: How does GMI Cloud's Inference Engine handle scaling?

Answer: The GMI Cloud Inference Engine (IE) features fully automatic scaling. It intelligently adapts to your workload demands in real-time, allocating resources to maintain performance and ultra-low latency without requiring manual intervention.

Q5: What is the difference between GMI Cloud's Inference Engine and Cluster Engine?

Answer: The primary difference is scaling. The Inference Engine (IE) is for serving models and supports fully automatic scaling. The Cluster Engine (CE) is for training or workloads needing manual control, and customers must adjust compute power manually using the console or API.

Q6: What optimization techniques does GMI Cloud support?

Answer: GMI Cloud's platform uses end-to-end optimizations to improve speed and reduce costs. This includes techniques like quantization and speculative decoding.