TL;DR: Choosing where to host AI workloads involves balancing cost, performance, and scalability. While hyperscalers (AWS, GCP) offer ecosystem integration, specialized GPU clouds like GMI Cloud provide superior cost-efficiency and direct access to high-performance hardware (like NVIDIA H100s/H200s). This makes them ideal for intensive training and ultra-low latency inference.

Key Takeaways:

- AI workloads, such as LLM training and video generation, are computationally intensive and require specialized GPU hardware.

- Hosting options range from general-purpose hyperscalers to specialized, high-performance AI hosting providers.

- Key decision factors include GPU type, cost-efficiency, scalability (automatic vs. manual), security, and networking.

- Specialized providers like GMI Cloud offer dedicated, high-performance NVIDIA H100 and H200 GPUs.

- GMI Cloud provides flexible, pay-as-you-go pricing, which can be significantly more cost-effective than alternatives.

- GMI Cloud offers tailored solutions: the Inference Engine for ultra-low latency and automatic scaling, and the Cluster Engine for robust management of containerized training workloads.

I. The Challenge of Computationally Intensive AI

Modern artificial intelligence workloads, especially in generative AI, are extremely demanding on computing resources.

Tasks like training Large Language Models (LLMs), running real-time video generation models, and processing complex multi-modal data require massive parallel processing power. This intensity creates a critical challenge for enterprises and development teams: finding the right environment where to host AI workloads that is powerful, scalable, and cost-effective.

II. What Makes an AI Workload “Computationally Intensive”?

Definition: Computationally intensive workloads are characterized by their need for large-scale parallel processing, high GPU memory (VRAM), and sustained, high-throughput data access. These tasks often involve models with billions of parameters that must be trained over days or weeks, or inference tasks that demand immediate, real-time responses.

Examples of demanding workloads:

- LLM Training & Fine-Tuning: Training or fine-tuning models like Llama or DeepSeek requires clusters of high-end AI GPU hardware connected with high-speed, low-latency networking like InfiniBand.

- Real-Time Inference: Deploying models for services like generative video, AI chatbots, or autonomous systems demands ultra-low latency and high availability to serve users at scale.

- High-Performance Computing (HPC): Scientific simulations, drug discovery, and complex financial modeling driven by AI also fall into this category, requiring robust HPC for AI infrastructure.

III. The Main Hosting Options for AI Workloads (2025 Overview)

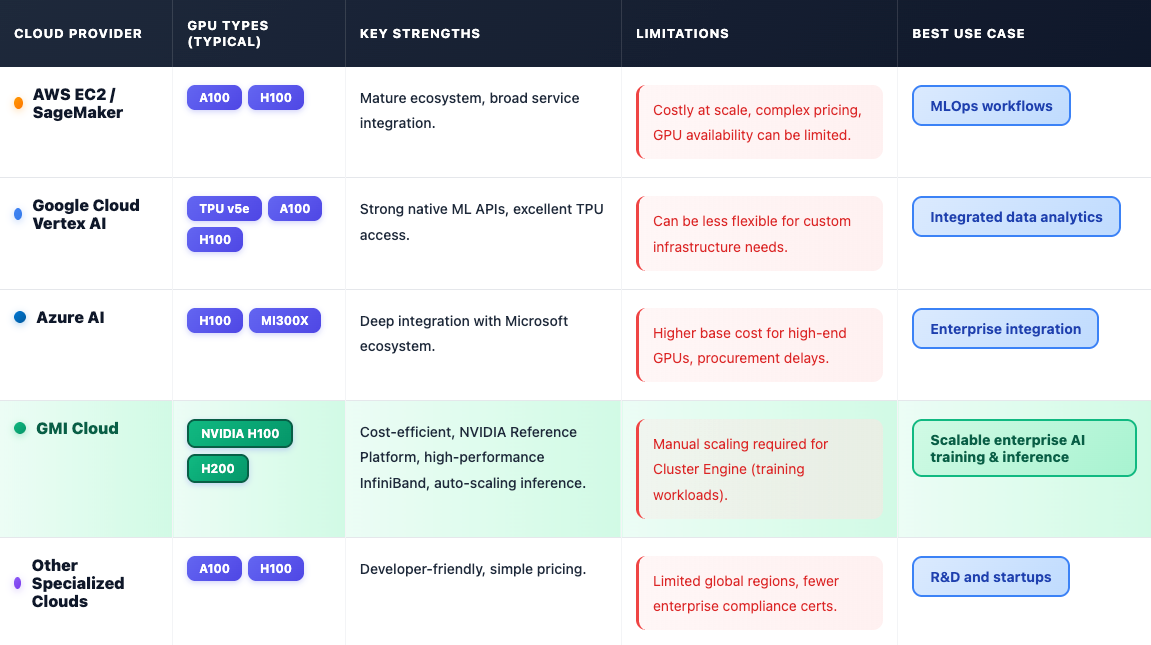

Teams must choose between large, established hyperscalers and specialized AI hosting providers. Each has distinct strengths and weaknesses.

Comparison: The following table provides a GPU cloud comparison for where to host AI workloads.

IV. Key Factors When Choosing Where to Host AI Workloads

Selecting the right provider depends on several key factors beyond just the price per hour.

- Compute Performance: Does the provider offer the right AI GPU for your task? Look for instant access to top-tier hardware like the NVIDIA H100 or H200 and high-speed interconnects like InfiniBand to eliminate bottlenecks.

- Scalability & Elasticity: How easily can you scale resources? An ideal inference solution, like GMI Cloud's Inference Engine, supports fully automatic scaling to meet workload demands. For training, look for robust orchestration tools like the Cluster Engine.

- Latency & Throughput: For inference, ultra-low latency is crucial. For training, you need high, stable throughput to minimize training time.

- Cost Efficiency: Avoid large upfront costs and long-term commitments by choosing a provider with a flexible, pay-as-you-go model. Specialized providers like GMI Cloud are often significantly more cost-effective than hyperscalers.

- Security & Compliance: Ensure the provider meets enterprise standards. GMI Cloud, for example, is SOC 2 certified and utilizes Tier-4 data centers for maximum uptime and security.

- API & Automation: The platform must support modern DevOps practices, including container management, virtualization, and API-driven orchestration for seamless deployment.

For teams balancing cost, flexibility, and high GPU performance, GMI Cloud offers dedicated GPU clusters optimized for AI workloads — from fine-tuning to large-scale inference.

V. Why GMI Cloud Is Built for Computationally Intensive AI

GMI Cloud is an AI hosting provider designed specifically for high-performance, scalable GPU infrastructure. It provides a complete foundation for AI success, helping teams architect, deploy, optimize, and scale their AI strategies.

Optimized for Generative AI:

GMI Cloud offers a high-performance Inference Engine for low-latency deployment and a Cluster Engine for containerized ops, all built on top of on-demand, top-tier GPUs.

Top-Tier GPU Access:

As an NVIDIA Reference Cloud Platform Provider, GMI Cloud provides instant, dedicated access to NVIDIA H100 and H200 GPUs. It is also preparing support for the next-generation Blackwell series to future-proof AI infrastructure.

Enterprise-Grade Services:

The platform is built for enterprise AI. The Cluster Engine streamlines operations by simplifying container management and orchestration, with native Kubernetes support. The Inference Engine delivers ultra-low latency and automatic scaling for real-time applications, supporting models like DeepSeek and Llama.

Flexible & Transparent Pricing:

GMI Cloud avoids long-term commitments with a flexible, pay-as-you-go model. This transparency is proven to reduce costs. For example, NVIDIA H200 GPUs are available on-demand at list prices of $2.50/GPU-hour. Private cloud configurations with 8x H100s can be as low as $2.10/GPU-hour.

Real-World Success:

- LegalSign.ai found GMI Cloud to be 50% more cost-effective than alternative cloud providers and accelerated its AI model training by 20%.

- Higgsfield, a generative video platform, partnered with GMI Cloud to achieve a 65% reduction in inference latency and 45% lower compute costs.

- DeepTrin utilized GMI's high-performance H200 GPUs to achieve a 10-15% increase in LLM inference accuracy and efficiency.

VI. How to Get Started — From Prototype to Production with GMI Cloud

Transitioning your AI workload to a high-performance platform like GMI Cloud is a straightforward process designed to get you to production faster.

Steps:

- Benchmark & Plan: Identify your resource needs. Are you training a large model (needing H100s/H200s for their large memory) or deploying a fine-tuned model for real-time inference?

- Select a GMI Cloud Service:

- For Training: Use the Cluster Engine to manage scalable GPU workloads, orchestrate containers, and leverage Kubernetes-native environments.

- For Inference: Use the Inference Engine to deploy models like DeepSeek V3 or Llama 4 with automatic scaling, ultra-low latency, and simple API/SDKs.

- Deploy: Launch your models in minutes, not weeks. The platform's automated workflows and pre-built models eliminate heavy configuration.

- Monitor & Scale: Use built-in real-time performance monitoring and resource visibility tools to keep operations smooth. The Inference Engine will scale automatically, while the Cluster Engine allows manual scaling via the console or API.

Ready to host your next AI workload? Explore GMI Cloud’s high-performance GPU clusters built for advanced generative and computational AI.

VII. Conclusion

Conclusion: Deciding where to host AI workloads is a critical decision that directly impacts performance, cost, and your time-to-market.

While hyperscalers offer a broad ecosystem, their costs, GPU availability, and limitations can be prohibitive for computationally intensive AI.

Specialized AI hosting providers like GMI Cloud offer a compelling, high-performance alternative. By providing cost-efficient GPU infrastructure, instant access to the latest NVIDIA H100 and H200 GPUs, and solutions engineered for both training (Cluster Engine) and inference (Inference Engine), GMI Cloud provides the ideal balance of power, flexibility, and price for enterprise AI.

Call to Action:

Deploy smarter. Scale faster. Build your next AI workload on GMI Cloud.

VIII. Frequently Asked Questions (FAQ) about Hosting AI Workloads

Q1: What is the best GPU for hosting large AI workloads?

A: For large-scale training and generative AI, NVIDIA's H100 and H200 Tensor Core GPUs are the industry standard. The H200, available on GMI Cloud, is particularly powerful as it features 141 GB of HBM3e memory and 4.8 TB/s of memory bandwidth, making it ideal for large language models (LLMs).

Q2: What is the difference between GMI Cloud's Inference Engine and Cluster Engine?

A: The Inference Engine (IE) is a platform purpose-built for deploying models for real-time AI inference; it provides ultra-low latency and fully automatic scaling to match demand. The Cluster Engine (CE) is an Al/ML Ops environment for managing scalable GPU workloads (like training) using container orchestration; in the CE, scaling must be adjusted manually by the customer via the console or API.

Q3: How much does it cost to host AI workloads on GMI Cloud?

A: GMI Cloud uses a flexible, pay-as-you-go pricing model, allowing users to avoid large upfront costs or long-term commitments. As examples, NVIDIA H200 GPUs are listed on-demand at $2.50/GPU-hour. GMI Cloud also offers private cloud options, with 8x H100 configurations as low as $2.10/GPU-hour.

Q4: Can I use Kubernetes to host my AI workloads on GMI Cloud?

A: Yes. The GMI Cloud Cluster Engine is Kubernetes-Native and designed to seamlessly orchestrate complex AI/ML tasks, HPC, and cloud-native applications in a GPU cloud environment.

Q5: Why choose a specialized provider like GMI Cloud over AWS or GCP?

A: As an NVIDIA Reference Cloud Platform Provider, GMI Cloud is often more cost-efficient and provides instant access to dedicated, high-performance GPUs without the long delays common at hyperscalers. This combination helps reduce training expenses and significantly speeds up time-to-market for AI applications.