When people think about scaling AI workloads, they usually focus on GPUs. After all, GPUs are the engines behind deep learning – accelerating the massive matrix multiplications, attention mechanisms and tensor operations that drive today’s models. But while GPUs deliver the raw horsepower, there’s another equally critical factor that determines whether that power can be fully harnessed: network bandwidth.

As models grow in size and complexity, and as training and inference are distributed across clusters of GPUs, network bandwidth becomes a defining bottleneck. Without high-throughput, low-latency networking, even the most advanced GPUs spend much of their time idle, waiting for data to arrive. For CTOs and ML teams, understanding the interplay between GPU performance and network infrastructure is essential to building AI systems that scale effectively.

The data movement challenge in AI workloads

AI workloads are not just compute-heavy – they are also data-hungry. Training a large language model (LLM) or vision transformer involves feeding terabytes of training data into the system, distributing gradients across multiple nodes, and synchronizing weights after every iteration. In inference scenarios, low latency is key, especially when applications like fraud detection, real-time translation or autonomous navigation demand split-second responses.

The catch: none of this can happen efficiently without robust networking. Every GPU in a cluster must continuously communicate with others, exchanging parameters, gradients, and intermediate results. If the network is too slow or congested, GPUs wait idle, throughput collapses and costs skyrocket.

Why bandwidth matters as much as GPU FLOPs

GPUs are often measured in terms of FLOPs (floating point operations per second), but raw compute is only part of the picture. The speed at which GPUs can be fed data depends heavily on interconnect bandwidth. This is why modern high-performance computing (HPC) and enterprise AI systems rely on specialized high-speed networking technologies.

High-bandwidth networking ensures that distributed training runs efficiently across clusters. For example, in data-parallel training, each GPU processes a subset of the dataset, and gradients must be aggregated and synchronized across all nodes. If bandwidth is insufficient, synchronization delays dominate, nullifying the benefit of adding more GPUs. Similarly, in model-parallel training, different GPUs handle different parts of a model, making real-time communication between them non-negotiable.

Simply put: scaling GPUs without scaling network bandwidth is like building a multi-lane racetrack but only opening one lane. The theoretical performance exists, but it cannot be realized.

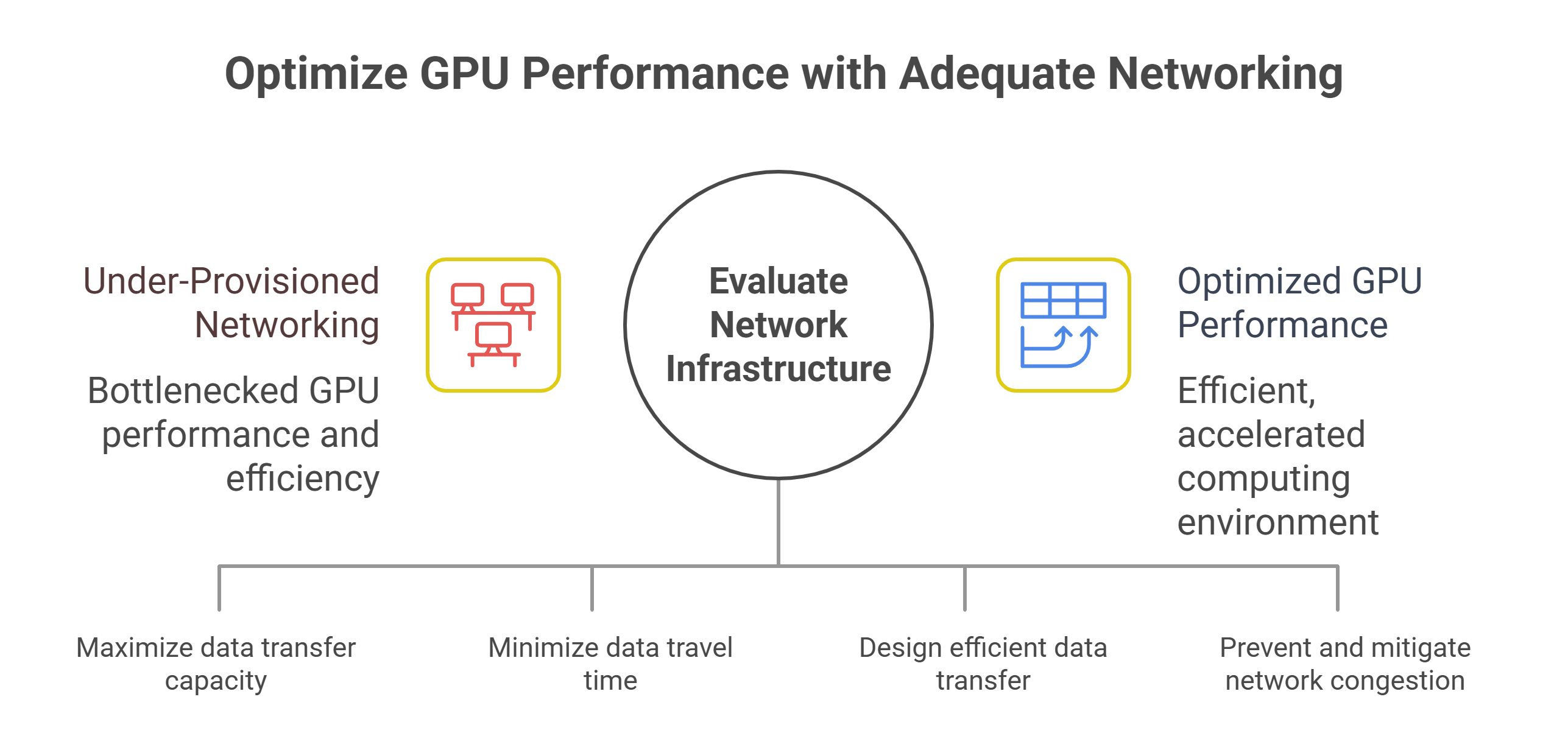

The hidden costs of under-provisioned networking

When bandwidth is overlooked, the costs show up quickly. Organizations may provision expensive GPU clusters only to find that performance plateaus far below expectations because of communication overheads. The result is longer training cycles, higher energy consumption and slower time to market.

For cloud deployments, this inefficiency is magnified by pricing. GPU instances already carry a premium compared to CPU resources. If GPUs spend time idle due to network congestion, organizations are effectively paying for compute they cannot use. That directly undermines ROI and makes scaling financially unsustainable.

The financial impact also depends on how resources are consumed. With reserved cloud models, enterprises can secure GPUs at a lower cost per GPU hour, but this comes with a trade-off: if networking bottlenecks or uneven workloads leave those GPUs underutilized, the organization is still paying for idle time. On-demand models work differently. They carry a higher cost per GPU hour, but they provide flexibility by allowing teams to pay only for the compute resources they actually use. Both approaches have advantages depending on workload patterns, yet in either case, under-provisioned networking reduces efficiency by stranding valuable compute power and diminishing overall return on investment.

This is why enterprises evaluating GPU cloud providers must look beyond GPU specs alone and scrutinize the networking fabric that ties clusters together.

The role of low-latency networking

It’s not just about raw bandwidth – latency matters too. In distributed AI training, communication happens iteratively, with thousands of synchronization points across the training cycle. If each synchronization introduces even small delays, those delays accumulate into hours or days of wasted training time.

Low-latency networking reduces the “waiting time” between GPUs and allows systems to scale linearly as more nodes are added. This is critical for workloads like reinforcement learning (RL), where fast feedback loops between environment simulation and policy updates determine training efficiency.

In inference, low latency directly affects user experience. A chatbot powered by a large model cannot take seconds to respond while waiting for GPU nodes to exchange data. Applications in healthcare, autonomous vehicles or financial systems demand sub-second responses – and achieving that requires as much focus on networking as on GPU compute.

How GPU cloud platforms address networking bottlenecks

Modern GPU cloud providers recognize that bandwidth is inseparable from GPU performance. Leading platforms architect their environments with high-speed interconnects that allow GPUs to communicate seamlessly at scale. This often involves technologies like RDMA (Remote Direct Memory Access) over Converged Ethernet, InfiniBand or NVLink, which minimize latency and maximize throughput between nodes.

At the enterprise level, the advantage of GPU cloud platforms is elasticity. Instead of over-provisioning expensive networking hardware on-premises, organizations can rely on cloud infrastructure that scales both GPU compute and bandwidth dynamically. When workloads spike – say during the training of a new model or the launch of a real-time AI service – additional GPU instances and networking capacity can be provisioned instantly.

This alignment of compute and bandwidth is crucial. A GPU cloud platform that provides abundant GPUs without the networking backbone to support them will fail to deliver meaningful performance gains.

What CTOs and ML teams should prioritize

For decision-makers, the takeaway is clear: GPU specifications alone don’t guarantee performance. When evaluating infrastructure for AI workloads, both in-house and in the cloud, networking should be a first-class consideration.

CTOs and ML teams should ask:

- What interconnect technology does the platform use?

- How much bandwidth is available per node?

- What are the latency guarantees?

- How does the provider scale networking as GPU clusters expand?

These questions ensure that investments in GPUs translate into real-world performance rather than wasted potential.

The GMI Cloud advantage

At GMI Cloud we approach AI infrastructure with the principle that compute and bandwidth must evolve together. The platform’s GPU-optimized cloud is underpinned by high-speed networking fabrics designed specifically to support large-scale distributed training and low-latency inference.

With auto-scaling capabilities, GMI Cloud provisions both GPU and network resources dynamically, ensuring workloads never hit hidden bottlenecks. Customers can also choose between reserved and on-demand models: reserved options provide cost efficiency for steady, predictable workloads, while on-demand instances give teams the flexibility to scale up only when needed without paying for idle GPUs. This combination ensures enterprises get the best of both worlds – predictable costs where appropriate, and agility when workloads fluctuate.

For CTOs and ML teams, this translates into faster iteration cycles, lower total cost of ownership and a competitive edge in industries where speed to market is everything.

Final thoughts

AI’s future depends not only on more powerful GPUs but also on the networks that connect them. Bandwidth is the circulatory system of modern AI workloads – without it, even the most advanced compute nodes sit underutilized. As organizations push toward ever larger and more complex models, the ability to scale both compute and communication will define success.

GPU cloud platforms that prioritize both dimensions, like GMI Cloud, enable enterprises to move past bottlenecks and build AI systems that are truly production-ready. For ML teams striving to deliver real-time, reliable and scalable AI, network bandwidth is no longer a supporting detail – it is mission-critical infrastructure.

Frequently Asked Questions About Network Bandwidth vs. GPU Power in AI Workloads

1. Why is network bandwidth just as important as raw GPU FLOPs for AI training?

Because modern AI training is as much about moving data as it is about crunching data. Large models constantly exchange parameters, gradients, and batches across GPUs. If interconnect bandwidth is limited, GPUs sit idle waiting for data, throughput collapses, and costs rise—even if you have state-of-the-art accelerators.

2. How does low-latency networking affect real-world performance?

Training involves thousands of synchronization points; tiny delays at each step add up to hours or days over a full run. Lower latency cuts that “waiting time” between GPUs so clusters scale efficiently. On the inference side, latency directly shapes user experience—use cases like fraud detection, real-time translation, or autonomy need fast responses, which means networking matters as much as compute.

3. What are the hidden costs of under-provisioned networking in the GPU cloud?

You pay premium rates for GPUs that end up idle behind a congested network. That wastes budget and slows time-to-market. The financing model does not rescue you: with reserved capacity, you still pay for idle time if bandwidth strands your GPUs; with on-demand, you pay more per hour but only when running—yet poor networking still reduces efficiency and ROI in either case.

4. Which interconnect technologies help remove AI training bottlenecks?

GPU cloud platforms tackle this with high-speed fabrics such as RDMA over Converged Ethernet, InfiniBand, or NVLink. These reduce latency and increase throughput so gradient aggregation, weight sync, and model-parallel communication do not become the limiting factor.

5. What should CTOs and ML teams ask when evaluating a GPU cloud for networking?

Focus on networking as a first-class requirement:

- What interconnect technology is used?

- How much bandwidth per node is available?

- What are the latency characteristics?

- How does networking scale as clusters grow?

These questions ensure GPU investments translate into real performance rather than stranded compute.