This guide explores what makes a GPU cloud platform ideal for LLM training and why GMI Cloud delivers the highest performance, scalability, and cost efficiency compared to traditional hyperscalers.

Training large language models (LLMs) requires substantial GPU compute power, high-speed networking, and infrastructure that can scale from prototype to production.

With NVIDIA H100 and H200 GPUs now standard for LLM workloads—and costs ranging from $2 to $13+ per GPU hour—choosing the right cloud platform can determine both your training efficiency and total project cost.

What Makes a GPU Cloud Provider Ideal for LLM Training?

Training LLMs differs greatly from inference or standard ML tasks. It requires specialized infrastructure capable of distributed scaling, ultra-fast networking, and predictable performance under heavy workloads.

Critical Requirements for Large Language Model Training

Training large language models isn’t just about having GPUs — it’s about building the right infrastructure stack that balances speed, scale, and cost efficiency.

Here are the core components every serious AI training environment needs, and why they matter.

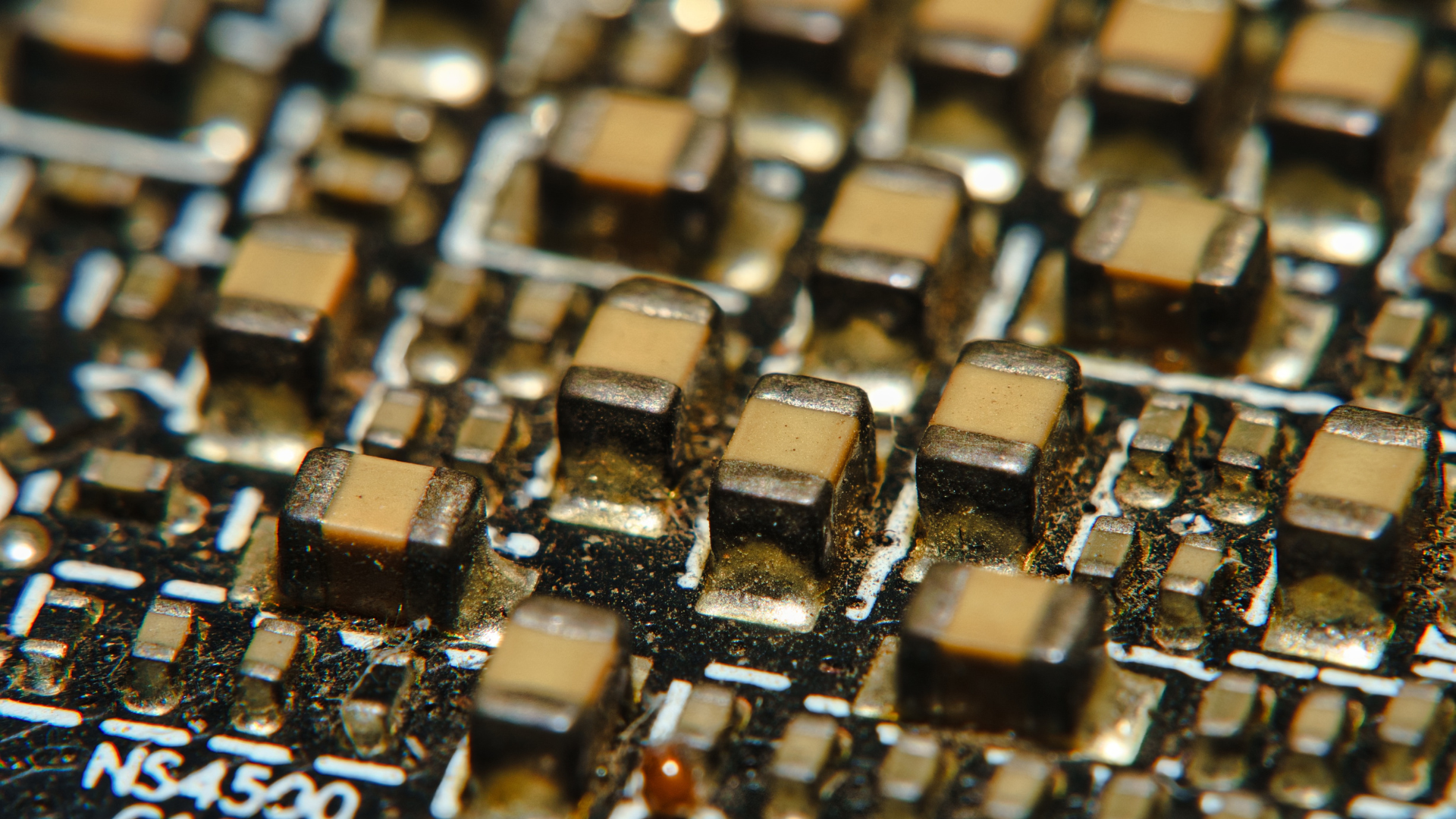

High-Performance GPUs

Modern LLM training depends on H100/H200 GPUs, which deliver 3–9× faster performance than older A100 chips. This massive speed gain can shrink training cycles from weeks to days, accelerating both experimentation and iteration.

High-Speed Interconnect

Distributed training only works when nodes communicate fast. That’s why InfiniBand (3.2 Tbps) is crucial — it provides the high-throughput, low-latency backbone for efficient multi-node scaling across large GPU clusters.

Bare-Metal Performance

Virtualization introduces overhead. By running on bare-metal machines, teams get maximum compute efficiency, ensuring every GPU cycle contributes directly to model training.

Flexible Scaling

Workloads shift fast — today’s 8-GPU experiment might become tomorrow’s 512-GPU run. Dynamic scaling lets you add or remove GPUs on demand, achieving cost optimization without over-provisioning.

Fast Storage

LLMs thrive on massive datasets, and high-IOPS storage ensures smooth, consistent data streaming. This setup eliminates I/O bottlenecks, keeping GPUs fully utilized and training pipelines unblocked.

Network Isolation

Security isn’t optional. Using dedicated subnets for AI training environments protects proprietary data and minimizes exposure to cross-tenant risks.

Simple Access

Developers shouldn’t wrestle with setup.

Providing SSH and standard tool access makes environments plug-and-play, enabling teams to start training in minutes, not days.

Transparent Pricing

Predictable, usage-based billing keeps long-term training runs financially manageable.

With clear cost visibility, teams can plan ahead and avoid unpleasant budget surprises.

GMI Cloud — Best GPU Cloud for High-Performance LLM Training at Scale

GMI Cloud provides the highest-performing infrastructure for large-scale AI and LLM workloads, combining bare metal NVIDIA H100 GPUs, 3.2 Tbps InfiniBand networking, and fully isolated training clusters for maximum throughput and data security.

Key Infrastructure Advantages

- 3.2 Tbps InfiniBand Networking: Industry-leading interconnect for distributed training

- Bare Metal H100 Servers: Zero virtualization overhead for full compute access

- NVIDIA NVLink Integration: Ultra-fast GPU-to-GPU communication

- Dedicated Training Clusters: Isolated environments for secure, large-scale training

- Network Subnet Isolation: Enhanced data protection for proprietary models

- Native Cloud Integration: Seamless resource scaling and cluster management

Superior Developer Experience

GMI Cloud provides a frictionless environment built for ML engineers and researchers. You can start training in minutes—no orchestration or complex configuration required.

No Complex Setup Required

- Direct SSH access to GPU clusters

- Pre-configured for PyTorch, TensorFlow, and JAX

- Standard Linux environments with full control

- Built-in support for Horovod and NCCL for distributed training

- pip and conda for custom environments

Enterprise-Grade Capabilities

GMI Cloud offers dedicated, compliant, and customizable GPU environments designed for enterprise reliability, scalability, and predictable performance.

Advanced Features

- Network Resource Isolation: InfiniBand partitioning for secure multi-tenant environments

- Custom Infrastructure Configurations: Tailored for specific model sizes and workloads

- Predictable Costs: Transparent pricing—no surprise charges

- Enterprise Compliance: Certified security standards for regulated industries

Framework and Software Support

GMI Cloud supports all major AI frameworks and libraries used for LLM research and production training.

Supported Frameworks: TensorFlow, PyTorch, Keras, MXNet, ONNX, Caffe

Optimized for Modern Large Language Model Training

- PyTorch 2.0+ with FSDP (Fully Sharded Data Parallel)

- JAX/Flax for efficient distributed training

- DeepSpeed and Megatron-LM integration

- Hugging Face Transformers optimization

- Customizable training pipelines

Flexible Pricing and Cost Optimization

GMI Cloud provides flexible pricing models tailored to project scale and utilization levels.

Available Models

- On-Demand: Instant access, hourly billing

- Reserved Instances: Long-term commitment discounts

- Spot Instances: Save up to 70% on preemptible workloads

- Automatic Scaling: Dynamically adjusts GPUs to match workload demand

Result: Predictable, optimized costs—without the inflated overhead typical of hyperscalers.

Training Large Language Models Efficiently and Cost-Effectively

Training LLMs at scale requires careful planning and optimization. Here are four proven strategies to maximize efficiency and minimize cost.

1. Start Small, Scale Strategically

Begin with smaller models to validate your training pipeline before scaling to larger architectures.

- Start with a 1B parameter model on 1 GPU

- Scale to 7B on 8 GPUs

- Move to 70B+ models on 64+ GPUs

Why it works: Detects bugs early, validates hyperparameters, and minimizes wasted compute.

2. Use Spot or Preemptible Instances for Savings

Spot instances can reduce costs by 50–70%.

- Save checkpoints every 1–2 hours

- Automatically resume training after interruptions

GMI Cloud integrates checkpointing and auto-resume for seamless recovery.

3. Optimize the Data Loading Pipeline

- Use NVMe or fast network storage

- Pre-tokenize datasets offline

- Use multi-worker DataLoader for parallelism

Impact: Improves GPU utilization from ~70% to 95%+, cutting training cost by up to 40%.

4. Monitor and Optimize Continuously

Track and analyze metrics like:

- Tokens/sec per GPU

- GPU memory and compute utilization

- Network bandwidth usage

- Cost per million tokens

Use TensorBoard, NVIDIA DCGM, or GMI Cloud monitoring tools for real-time insights.

The Bottom Line

GMI Cloud delivers the best combination of performance, flexibility, and cost-efficiency for training large language models in 2025.

With bare metal NVIDIA H100/H200 GPUs, 3.2 Tbps InfiniBand networking, predictable pricing, and enterprise-grade security, GMI Cloud enables AI teams to train faster and smarter—without the complexity or cost overhead of hyperscalers.

Start Training Today: Explore GMI Cloud GPU Instances →