Building a powerful AI model is only half the battle. Real impact happens when those models are served efficiently, reliably and at scale. Whether powering personalization, assistants or industrial automation, inference is where performance, cost and user experience converge. And as workloads grow, the way inference is structured can make the difference between a system that scales smoothly and one that crumbles under demand.

Scalable model serving isn’t just about throwing more GPUs at the problem. It’s about choosing the right serving patterns – architectural approaches that optimize throughput, minimize latency, and align with both workload characteristics and business goals.

In practice, different serving patterns shine in different scenarios. Here are some of the most effective strategies enterprises use to deliver fast, cost-efficient inference at scale.

1. Single-model, multi-instance serving

This is the simplest and most common serving pattern. A single model is deployed across multiple GPU instances, with requests distributed through a load balancer or gateway. It offers horizontal scalability and resilience – if one node fails, others take over seamlessly.

This pattern is ideal for:

- Stable workloads with predictable traffic

- High-availability systems

- Applications powered by one core model

Autoscaling makes it easy to adjust capacity dynamically. However, when traffic fluctuates, some GPU capacity may sit idle, making this pattern less efficient for variable workloads.

2. Multi-model serving on shared GPUs

Many organizations serve more than one model: multiple versions for testing, different models for various use cases, or distinct tasks like classification and detection. Running each model on a separate GPU can get expensive fast.

Multi-model serving allows several models to share the same GPU, improving utilization. Frameworks can load frequently used models into memory and swap out less-used ones as needed. Smart scheduling keeps latency stable.

This approach is best for:

- A/B testing and gradual rollouts

- Teams running multiple lightweight models

- Cost optimization when GPUs are limited

The challenge is resource contention. If too many models compete for GPU memory, performance can degrade. Techniques like caching, batching and prioritization help maintain stability.

3. Batch inference for high-throughput workloads

When low latency isn’t critical, batch inference is highly efficient. In this pattern, requests are collected and processed together, maximizing GPU throughput and lowering cost per prediction.

Common scenarios include:

- Large-scale recommendation pipelines

- Offline scoring and analytics

- Embedding generation

Batching makes full use of GPU resources and aligns well with reserved capacity models. The trade-off is increased latency, so many teams pair batch inference with a real-time serving layer for time-sensitive requests.

4. Streaming and real-time inference

Some use cases demand instant responses. Streaming or real-time inference processes requests as they arrive, often within milliseconds. It typically involves:

- An inference gateway to route traffic

- A pool of GPU-backed workers

- Autoscaling for demand spikes

Ideal for:

- Chatbots and assistants

- Fraud detection and recommendations

- Operational systems with tight latency budgets

Careful batching and queuing strategies help maintain low latency under heavy load. Locating inference close to users – or distributing across regions – can reduce network overhead further.

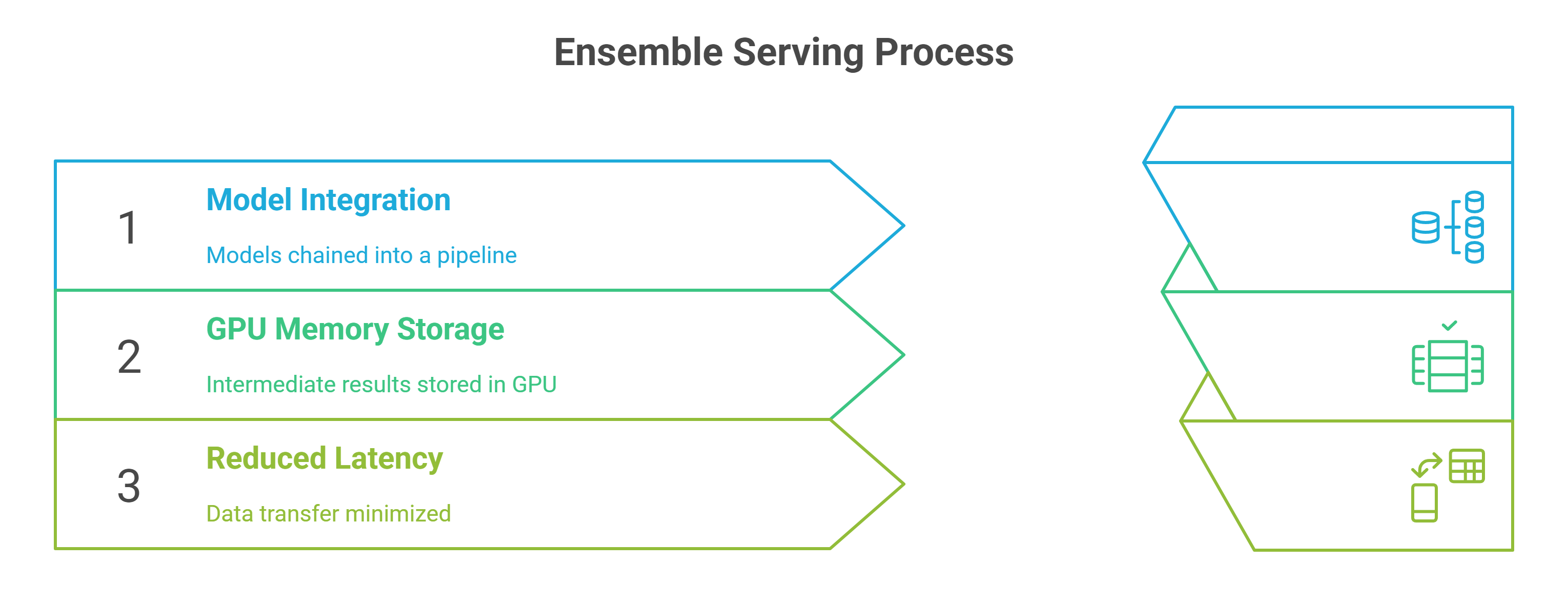

5. Ensemble and pipeline serving

Complex AI systems often rely on multiple models working in sequence. A speech assistant, for example, might use an ASR model, a language model and a recommendation engine.

Ensemble serving chains these models into a single pipeline. Intermediate results stay in GPU memory, minimizing data transfer and latency. This pattern shines when:

- Multiple models need to work together

- Applications require structured decision flows

- Teams want to avoid building separate serving layers for each stage

Efficient orchestration ensures each stage runs smoothly, keeping the pipeline fast and reliable.

6. Edge-cloud hybrid serving

For global deployments or ultra-low latency use cases, edge-cloud hybrid serving combines local and centralized resources. Lightweight tasks run at the edge, while heavy computations go to the cloud.

Typical scenarios include:

- Geo-distributed applications

- Data privacy and residency constraints

- Cost-sensitive deployments with local pre-processing

For example, filtering or feature extraction can happen at the edge, with only relevant data sent to the cloud for final inference. This reduces bandwidth costs and improves response times. The main challenge is keeping models synchronized across environments.

7. Serverless and on-demand inference

For workloads with unpredictable traffic, serverless inference is an increasingly popular pattern. Instead of running persistent clusters, endpoints spin up automatically when requests arrive and scale down to zero when idle.

This pattern is best for:

- Spiky or intermittent workloads

- Reducing idle GPU costs

- Lightweight models with fast startup times

The key advantage is cost efficiency – teams pay only for what they use. As serverless GPU platforms mature, cold start delays continue to shrink, making this option viable for more use cases.

Choosing the right pattern

Each serving pattern has strengths and trade-offs. The best choice depends on traffic, model size, latency requirements and budget.

Examples in practice:

- A recommendation system might combine real-time streaming for active users with nightly batch jobs for offline updates.

- A fintech platform could use multi-model serving for testing and single-model clusters for production stability.

- A healthcare provider might use edge-cloud hybrid serving to comply with local data regulations while scaling globally.

Many organizations adopt a hybrid strategy, blending multiple patterns to support different workloads efficiently.

How GMI Cloud supports scalable inference

GMI Cloud is designed to power these serving patterns with GPU-optimized infrastructure. High-speed networking and low-latency interconnects allow teams to scale inference without communication bottlenecks.

The platform supports both reserved and on-demand GPU capacity, enabling organizations to balance cost and elasticity. With autoscaling, observability and intelligent scheduling built in, ML teams can deploy pipelines, streaming systems or serverless endpoints with minimal friction.

Scaling inference the smart way

Serving models at scale is about more than just raw GPU power. It requires architectural choices that align with business goals, performance targets and cost constraints. By adopting the right serving patterns – and combining them strategically – enterprises can deliver fast, reliable AI experiences without overpaying for infrastructure.

As model architectures evolve and workloads diversify, flexibility will matter more than ever. Platforms like GMI Cloud give organizations the building blocks to deploy inference intelligently – so they can scale seamlessly while keeping performance sharp and costs predictable.