This article explains how to design scalable, efficient inference pipelines for Kubernetes-native GPU clusters. It explores how Kubernetes orchestration, GPU scheduling, and intelligent autoscaling enable enterprises to deliver high-performance, low-latency AI inference at scale while maintaining cost efficiency and compliance.

What you’ll learn:

• Why Kubernetes is the foundation for scalable GPU inference

• Core components of Kubernetes-native inference pipelines

• How autoscaling strategies maintain responsiveness and control costs

• Best practices for GPU scheduling and multi-model serving

• Techniques for managing model updates and rolling deployments

• How to optimize data flow for high-throughput inference

• Using observability tools to balance performance and cost

• Security, compliance, and governance in GPU-based clusters

• How GMI Cloud’s Cluster Engine simplifies orchestration and scaling

Enterprises running AI at scale need infrastructure that can adapt fast, stay resilient, and deliver consistent performance. Kubernetes has become the orchestration layer of choice, and when paired with GPU acceleration, it forms a powerful foundation for production-grade inference.

Kubernetes-native GPU clusters let teams scale complex workloads without rebuilding their infrastructure – but success depends on more than just adding GPUs. It’s about designing pipelines that fully exploit orchestration capabilities while keeping utilization high and latency low.

Why Kubernetes for GPU inference?

Kubernetes has long been the standard for container orchestration, offering a robust framework for automating deployment, scaling and management of containerized applications. Its strengths – elasticity, scheduling intelligence and resource abstraction – align perfectly with the needs of GPU-based inference.

Inference workloads are dynamic. Traffic may spike unpredictably, models may change frequently, and infrastructure often needs to scale up or down in minutes. Kubernetes provides the primitives to handle all of this: GPU node scheduling, autoscaling, load balancing, rolling updates and workload isolation.

In a GPU context, Kubernetes allows teams to:

- Run multiple models across shared clusters without manual intervention

- Dynamically schedule workloads to available GPU nodes

- Scale horizontally with demand

- Integrate seamlessly with CI/CD and MLOps workflows

Rather than managing GPUs manually, teams can rely on Kubernetes to keep infrastructure responsive and optimized.

Core building blocks of Kubernetes-native inference pipelines

A well-designed inference pipeline isn’t just about serving models – it’s about orchestrating every stage of the workflow. At a high level, these pipelines include:

- Request ingress and load balancing: Traffic enters the cluster through ingress controllers or service meshes that route requests to the appropriate model endpoints. High-performance routing ensures that GPUs stay busy without overloading specific nodes.

- Model servers: Containerized model servers – such as Triton, TorchServe or custom-built endpoints – handle the actual inference. Each server can host one or more models, depending on workload requirements.

- Autoscaling layer: Kubernetes Horizontal Pod Autoscaler (HPA) or KEDA dynamically scales inference pods based on traffic volume, GPU utilization or custom metrics. This is crucial to maintaining responsiveness while optimizing cost.

- Monitoring and observability: Inference pipelines depend on real-time visibility into performance metrics like latency, throughput, GPU utilization and error rates. Tools such as Prometheus and Grafana make these signals actionable, enabling teams to adjust capacity proactively.

- GPU node management: Specialized node pools host GPU workloads. Kubernetes device plugins expose GPUs to pods, ensuring workloads are scheduled efficiently. This layer also manages mixed workloads without resource conflicts.

These components form the foundation of Kubernetes-native inference – but true scalability comes from how they’re designed to work together.

Autoscaling intelligently

Inference traffic rarely stays constant. Kubernetes’ autoscaling capabilities allow inference pipelines to respond automatically to these fluctuations. Horizontal Pod Autoscaling scales the number of serving pods, while Cluster Autoscaler can spin up or down GPU nodes themselves.

However, scaling GPU workloads efficiently requires more nuance than CPU-bound services:

- GPU warm-up time matters. Scaling too late can lead to cold-start latency spikes. Proactive scaling based on predictive signals (like time-of-day patterns or historical traffic) can mitigate this.

- Pod binpacking helps optimize GPU usage. Instead of spinning up new nodes prematurely, Kubernetes can place multiple lightweight models on the same GPU.

- Graceful scale-down prevents dropping active requests, maintaining uptime and performance.

By combining reactive autoscaling with predictive strategies, teams can maintain both responsiveness and cost control.

Managing GPU scheduling

Efficient GPU scheduling is at the heart of high-performance inference pipelines. Kubernetes provides scheduling primitives, but inference workloads often require more fine-grained control.

Some best practices include:

- Node taints and tolerations to ensure only inference workloads land on GPU nodes.

- Affinity and anti-affinity rules to distribute workloads intelligently across nodes.

- Custom schedulers or scheduling extensions for specialized needs, such as allocating fractional GPUs or prioritizing latency-sensitive pods.

Scheduling is also key when running multi-model serving or mixed workloads (such as inference and preprocessing on the same cluster). A well-tuned scheduler prevents resource fragmentation and ensures GPUs are saturated with useful work.

Handling model lifecycle and updates

In production, models change frequently. Teams must be able to deploy new versions without downtime or performance degradation. Kubernetes simplifies this with rolling updates, blue-green deployments and canary releases.

- Rolling updates allow gradual rollout of new model containers while keeping existing pods running.

- Blue-green deployments provide a fallback option, reducing the risk of downtime.

- Canary releases let teams test performance on a small slice of traffic before going live at scale.

These strategies make it possible to deploy models continuously without interrupting inference services – a critical capability for high-velocity MLOps teams.

Orchestrating data flows efficiently

Inference pipelines are only as good as the data feeding them. For many workloads, especially multimodal or streaming use cases, data flow management becomes a bottleneck.

To keep GPUs fully utilized:

- Use high-bandwidth connections between data sources and GPU nodes.

- Minimize serialization and deserialization steps.

- Preprocess data upstream where possible to reduce GPU overhead.

- Employ data caching and message queues to smooth out traffic spikes.

Integrating data infrastructure with Kubernetes-native workflows ensures the pipeline scales seamlessly with both compute and I/O.

Observability and cost control

Running large-scale inference isn’t just about performance – it’s also about controlling cost. Idle GPUs are expensive, and inefficient scaling leads to wasted capacity.

Kubernetes observability stacks give ML teams visibility into:

- GPU and memory utilization

- Request latency and throughput

- Autoscaling behavior and node health

- Cost attribution per workload or namespace

By combining these insights with intelligent scaling and scheduling, teams can align resource consumption closely with demand.

Reserved GPU capacity can lower unit costs, while on-demand capacity provides elasticity. Kubernetes makes it easy to mix both models dynamically, so teams don’t overpay while maintaining responsiveness.

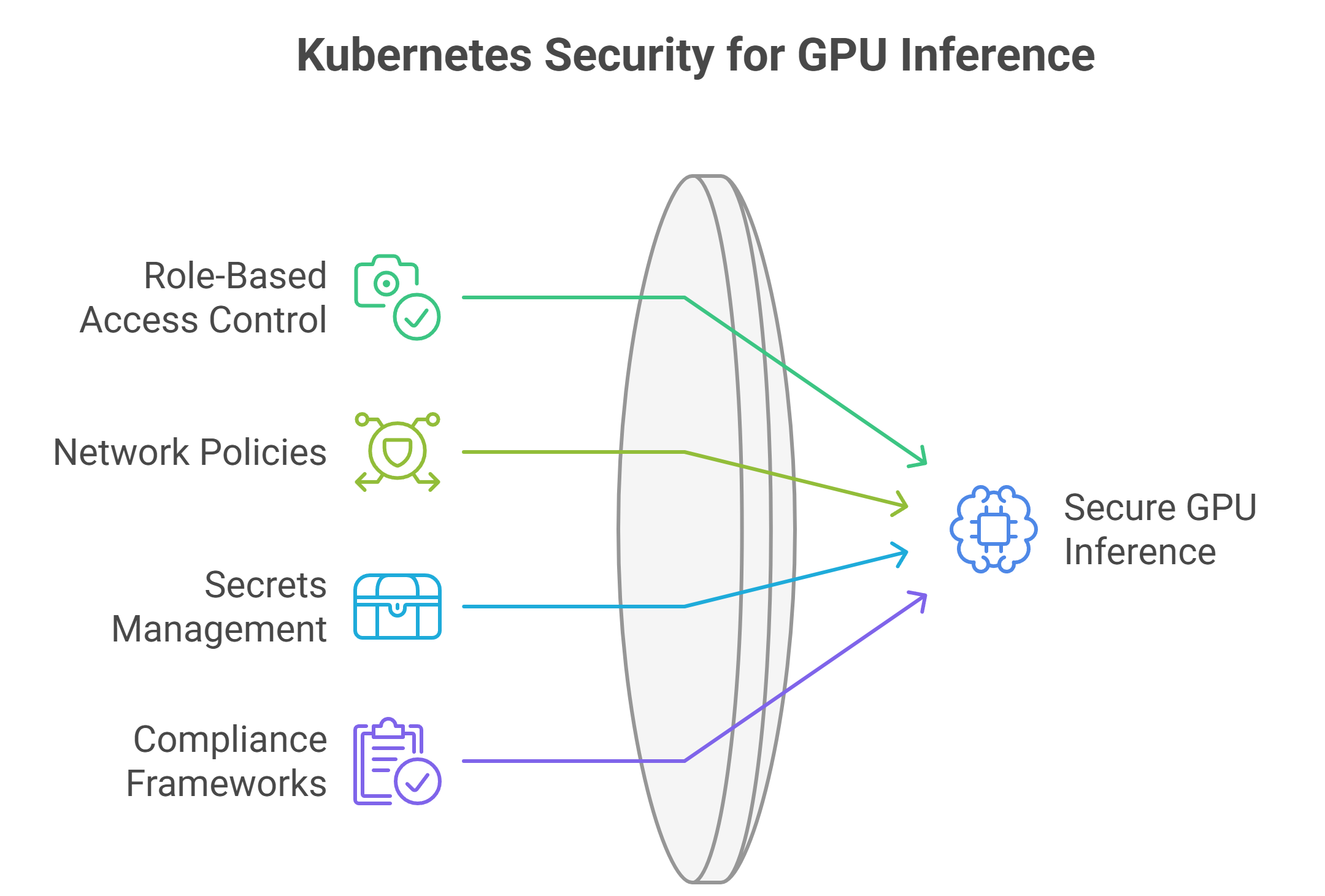

Security and compliance considerations

For regulated industries, security is a first-class concern. Kubernetes provides a robust security model that integrates with GPU inference:

- Role-based access control (RBAC) restricts access to inference services.

- Network policies enforce traffic isolation between workloads.

- Secrets management keeps credentials secure.

- Compliance frameworks such as SOC 2 can be integrated at the cluster level.

Security baked into the pipeline ensures that scaling inference doesn’t come at the cost of compliance or trust.

Why GMI Cloud is built for Kubernetes-driven AI

Kubernetes-native GPU clusters are rapidly becoming the backbone of scalable inference pipelines, offering flexibility, efficiency and control. Success depends on aligning architecture with operational realities – from autoscaling and scheduling to model lifecycle and security.

GMI Cloud provides the infrastructure to make this possible: GPU-optimized clusters with high-bandwidth networking, intelligent scheduling and elastic scaling. By combining reserved and on-demand GPU resources, enterprises balance cost and performance while keeping latency low.

What sets GMI Cloud apart is its Cluster Engine, a resource management platform that simplifies large-scale orchestration. It offers fine-grained control over GPU allocation, automatic bin packing and real-time observability to maximize utilization. Teams can dynamically scale workloads, minimize idle capacity, and maintain predictable performance without manual intervention.

With built-in observability, automated scaling, security integrations, and support for popular orchestration tools, GMI Cloud lets teams focus on building AI products, not managing infrastructure.

FAQs: Designing Inference Pipelines for Kubernetes-Native GPU Clusters

1. Why choose Kubernetes for GPU inference at enterprise scale?

Kubernetes brings elasticity, intelligent scheduling, and resource abstraction—exactly what dynamic inference needs. It handles GPU node scheduling, autoscaling, load balancing, rolling updates, and workload isolation, so teams can scale models quickly without rebuilding infrastructure.

2. What are the core building blocks of a Kubernetes-native GPU inference pipeline?

A robust pipeline includes: request ingress and load balancing (via ingress controllers or a service mesh), containerized model servers (for example, Triton, TorchServe, or custom endpoints), an autoscaling layer (HPA or KEDA), monitoring and observability (Prometheus, Grafana), and GPU node management using Kubernetes device plugins.

3. How do I autoscale GPU inference pipelines without latency spikes?

Combine reactive and predictive scaling. Use HPA/KEDA on traffic, GPU utilization, or custom metrics; warm GPUs to avoid cold-start delays; binpack pods to maximize GPU usage before adding nodes; and scale down gracefully so active requests are not dropped.

4. What are best practices for GPU scheduling in Kubernetes?

Use node taints/tolerations to keep inference on GPU nodes, affinity/anti-affinity to spread load intelligently, and—if needed—custom scheduling for fractional allocation or prioritizing latency-sensitive pods. This prevents resource fragmentation and keeps GPUs saturated with useful work.

5. How should we manage model lifecycle updates without downtime?

Leverage Kubernetes deployment strategies: rolling updates for gradual replacement, blue-green for safe fallback, and canary releases to test new versions on a small traffic slice. Tie this to your MLOps flow so model changes ship continuously without interrupting service.

6. How do we balance observability, cost control, and security on Kubernetes-native GPU clusters?

Track latency, throughput, GPU/memory utilization, autoscaling behavior, node health, and cost per workload/namespace. Mix reserved GPU capacity for steady demand with on-demand for bursts to avoid idle spend. Secure the stack with RBAC, network policies, secrets management, and integrate compliance frameworks such as SOC 2 at the cluster level.