As machine learning has matured from experimental projects into production-critical systems, the field of MLOps has risen to meet a growing challenge: how to manage AI models reliably at scale. In this environment, GPUs are not just hardware accelerators – they are the backbone of modern AI infrastructure. But when multiple teams, workloads and models compete for finite GPU resources, performance and cost can quickly spiral out of control.

This is where GPU scheduling enters the picture. Once a niche concern for HPC researchers, GPU scheduling is now a central pillar of next-generation MLOps, enabling enterprises to maximize GPU utilization, control costs and ensure fairness across workloads. For CTOs and ML teams, mastering GPU scheduling means turning GPUs from a scarce bottleneck into a shared, scalable resource that drives innovation.

Why GPU scheduling matters

AI workloads are diverse. A team training a billion-parameter natural language model may need dozens of GPUs working in parallel for weeks, while another team fine-tuning a computer vision model might only need a single GPU for a few hours. Inference workloads add another layer of complexity, often requiring bursty, low-latency GPU access that differs from steady training demand.

Without GPU scheduling, these workloads compete chaotically. Some jobs monopolize resources while others starve, leading to inefficiency and frustration. Scheduling ensures that workloads are assigned to GPUs based on priority, availability and performance requirements, creating a predictable and optimized environment.

The evolution of GPU scheduling

Early GPU use in machine learning was relatively simple: one job per GPU, with static allocation. But as AI adoption accelerated, this model broke down. Enterprises realized that GPUs were sitting idle for large portions of their allocated time – an expensive waste given the premium cost of GPU infrastructure.

Modern GPU scheduling has evolved into a sophisticated discipline that leverages container orchestration systems, advanced job queues and workload-aware allocation. Techniques like fractional GPU allocation, multi-tenant GPU sharing and dynamic preemption now allow multiple jobs to run concurrently without interfering with one another. This transforms GPUs from exclusive resources into flexible, cloud-native components of the MLOps pipeline.

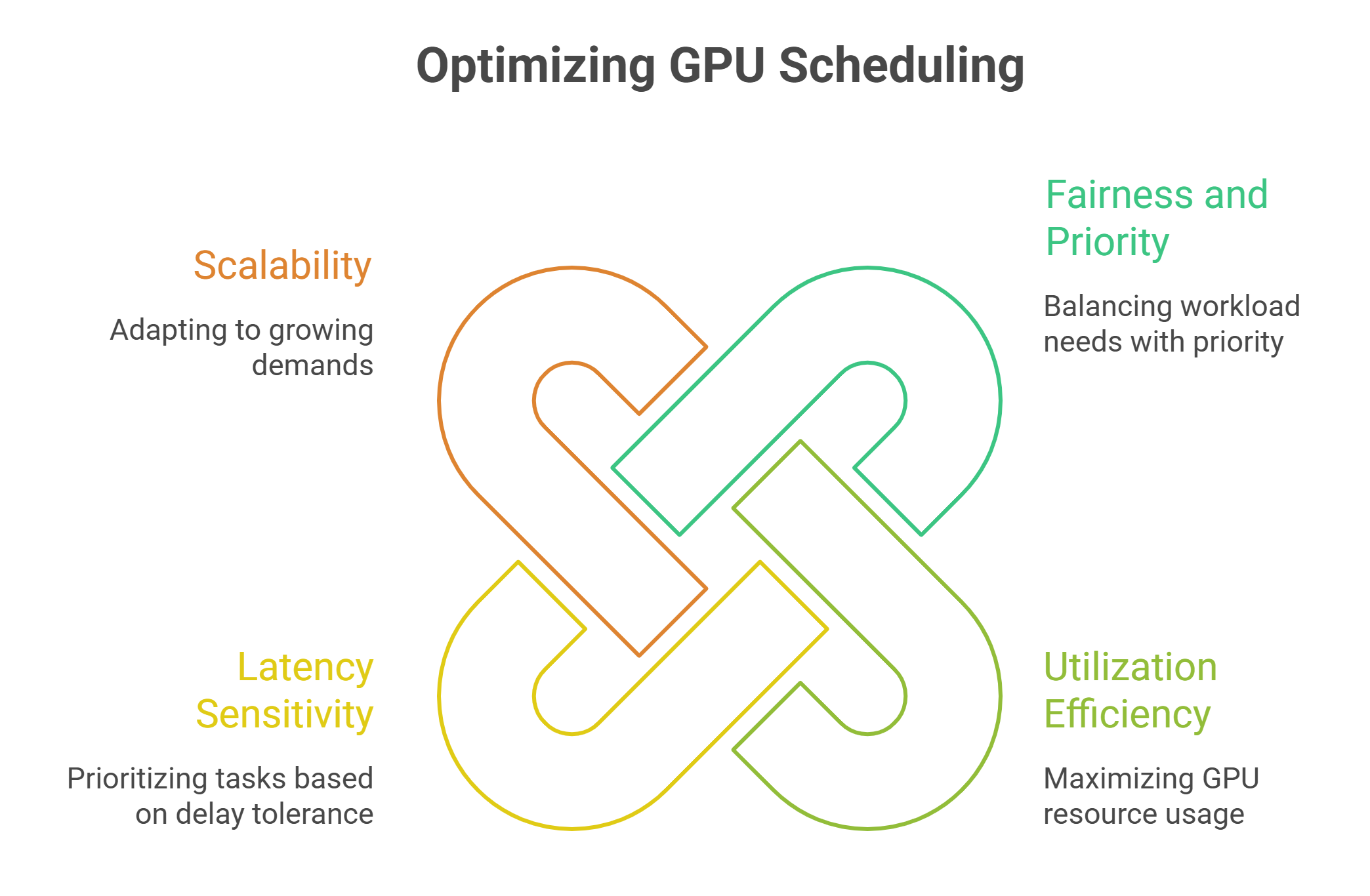

Key dimensions of GPU scheduling

- Fairness and priority: Scheduling systems must balance competing needs. A production inference workload powering customer-facing applications may require guaranteed GPU access, while a research experiment can tolerate delays. Priority-based scheduling ensures that critical workloads always come first.

- Utilization efficiency: The cost of GPUs makes underutilization a serious problem. Scheduling systems can break GPUs into fractions, assigning slices to smaller jobs while larger training tasks consume full GPUs. This fine-grained allocation drives higher efficiency across clusters.

- Latency sensitivity: Not all workloads are equal in their tolerance for delays. Inference systems need near-instant GPU response, while batch training jobs can wait. Scheduling must account for these differences, assigning resources dynamically to minimize latency where it matters most.

- Scalability: As teams and models grow, the scheduling system itself must scale. GPU scheduling is not just about single clusters – it’s about orchestrating resources across distributed environments, data centers and cloud providers.

GPU scheduling in the MLOps lifecycle

MLOps spans the full AI model lifecycle: from development and training to deployment, monitoring and iteration. GPU scheduling is embedded into each of these stages:

- Training: During distributed training, schedulers allocate GPUs across nodes to ensure jobs run efficiently and without unnecessary queuing. Advanced schedulers also optimize placement to reduce communication overhead between GPUs.

- Deployment and inference: Schedulers assign GPUs to inference workloads based on traffic patterns. For real-time applications, they ensure low-latency GPU access by reserving capacity or using auto-scaling.

- Experimentation: Research and development often involves running dozens of parallel experiments. GPU scheduling helps distribute resources evenly, preventing one team’s experiments from consuming all available compute.

- Monitoring and iteration: Scheduling is integrated with monitoring tools, providing visibility into GPU usage and enabling proactive reallocation when workloads change.

By weaving GPU scheduling into the entire lifecycle, MLOps platforms create a continuous loop of efficiency, performance and control.

How cloud platforms approach GPU scheduling

On-prem systems often struggle with GPU scheduling due to limited resources and rigid infrastructure. Cloud-native platforms, on the other hand, are designed with dynamic scheduling in mind. They combine autoscaling with workload-aware orchestration, ensuring GPUs are always allocated where they deliver the most value.

GMI Cloud, for example, integrates GPU scheduling into its inference optimization platform called GMI Cloud Inference Engine. By automatically balancing workloads across GPU clusters, the platform ensures low latency for real-time inference while maintaining cost efficiency for batch training. Developers can specify workload requirements through APIs, and the system intelligently provisions GPUs accordingly.

This abstraction allows ML teams to focus on building models rather than managing infrastructure. Instead of worrying about who gets which GPU, teams trust the scheduler to maximize performance and minimize cost.

The future of GPU scheduling in MLOps

Next-generation MLOps will push GPU scheduling even further. Trends to watch include:

- AI-driven scheduling: Using reinforcement learning or predictive analytics, schedulers will anticipate workload demand and allocate GPUs proactively.

- Cross-cloud orchestration: As enterprises adopt hybrid and multi-cloud strategies, scheduling will extend across providers, ensuring seamless resource allocation no matter where workloads run.

- Specialized scheduling for model types: Different workloads – NLP, vision, reinforcement learning – place unique demands on GPUs. Future schedulers will optimize based on model architecture as well as workload size.

- Energy-aware scheduling: With sustainability becoming a priority, schedulers will consider not only performance and cost but also the energy profile of GPU clusters.

These advancements will make GPU scheduling an even more central part of enterprise MLOps, ensuring that as AI models scale, infrastructure can keep pace.

Final thoughts

For modern enterprises, GPUs are too valuable to be left unmanaged. Without effective scheduling, the result is underutilized resources, inflated costs and unpredictable performance. With scheduling, GPUs become a shared, elastic resource that powers every stage of the AI lifecycle.

As MLOps evolves, GPU scheduling will define how efficiently organizations can train, deploy and maintain models at scale. Cloud platforms like GMI Cloud are leading this shift, offering intelligent, automated scheduling that eliminates bottlenecks and ensures that every workload gets the GPU power it needs.

For CTOs and ML teams, investing in platforms with advanced GPU scheduling capabilities might just be the key to unlocking reliable, scalable, and cost-effective AI in production.

Frequently Asked Questions About GPU Scheduling in Next-Generation MLOps

1. What is GPU scheduling in MLOps, and why does it matter now?

GPU scheduling is the policy and tooling that decide which workloads get which GPUs, when, and for how long. As multiple teams run very different AI jobs (weeks-long multi-GPU training, short fine-tunes, bursty real-time inference), scheduling prevents chaos—boosting utilization, controlling cost, and keeping critical, latency-sensitive workloads responsive.

2. How does GPU scheduling improve utilization and cost efficiency across AI workloads?

Modern schedulers go beyond “one job per GPU.” They support fractional GPU allocation, multi-tenant sharing, and dynamic preemption so small jobs can use slices while large training tasks consume full devices. By matching resource size to need—and reallocating as demand changes—clusters run hotter (in a good way) instead of leaving expensive GPUs idle.

3. How is scheduling handled differently for training, inference, and experimentation?

Training: Schedulers spread jobs across nodes to minimize queueing and place them to reduce communication overhead during distributed runs.

Inference: They reserve capacity or auto-scale for bursty, low-latency traffic so user-facing apps stay snappy.

Experimentation: They fairly distribute GPUs across many parallel tests so one team’s trials don’t monopolize the fleet.

Throughout, monitoring hooks provide visibility to reallocate proactively as patterns shift.

4. What do “fairness” and “priority” mean in a GPU scheduler for MLOps?

They mean aligning GPUs with business impact. A production inference service may be guaranteed first access, while a research experiment can tolerate delays. Priority-based and latency-aware scheduling ensures critical paths get immediate GPU time, and less urgent jobs fill the gaps—raising overall throughput without starving anyone.

5. How do cloud platforms approach GPU scheduling—and what’s the GMI Cloud angle?

Cloud-native platforms pair autoscaling with workload-aware orchestration so GPUs are provisioned where they deliver the most value. In the article’s example, GMI Cloud bakes scheduling into its GMI Cloud Inference Engine, automatically balancing workloads across clusters for low-latency inference and cost-efficient batch training. Developers express requirements via APIs; the platform provisions the right GPUs and abstracts away the who-gets-what debate.