This article explains how MLOps teams can successfully transition from small-scale experimentation to enterprise-grade production by managing GPU resources effectively.

What you’ll learn:

- Why GPU demands differ between research experiments and production workloads

- Key strategies for GPU allocation, including reserved, shared, and fractional approaches

- The role of intelligent scheduling and preemption in balancing priorities

- How monitoring and observability optimize both performance and cost

- Best practices for bridging silos between research and production teams

- Cloud vs. on-prem GPU management trade-offs and hybrid strategies

- Proven practices that turn GPU resource management into a competitive advantage

For many machine learning teams, the journey of building an AI system begins with small-scale experimentation. A data scientist might start by running a notebook on a single GPU, testing a new model architecture on a subset of data, and sharing quick results with colleagues. These initial steps are about proving feasibility and validating ideas, not yet about efficiency or scale. But as projects move beyond proof of concept and into serious development, infrastructure requirements shift dramatically. Training deep networks often requires multiple GPUs running in parallel to complete jobs within reasonable timeframes. Inference workloads, meanwhile, must deliver low-latency responses across distributed systems. Suddenly, GPU resources are no longer just tools for exploration – they become the backbone of an enterprise-scale machine learning operation.

This shift from experimentation to production is where MLOps proves its value. Just as DevOps revolutionized software engineering by making deployments repeatable and scalable, MLOps ensures that AI models move smoothly from research into production environments.

Central to this transition is GPU resource management. Without it, teams risk wasted compute, runaway costs and bottlenecks that stall innovation. With it, GPUs transform into a shared, elastic resource that powers experimentation, training, and inference at scale.

The GPU resource challenge in MLOps

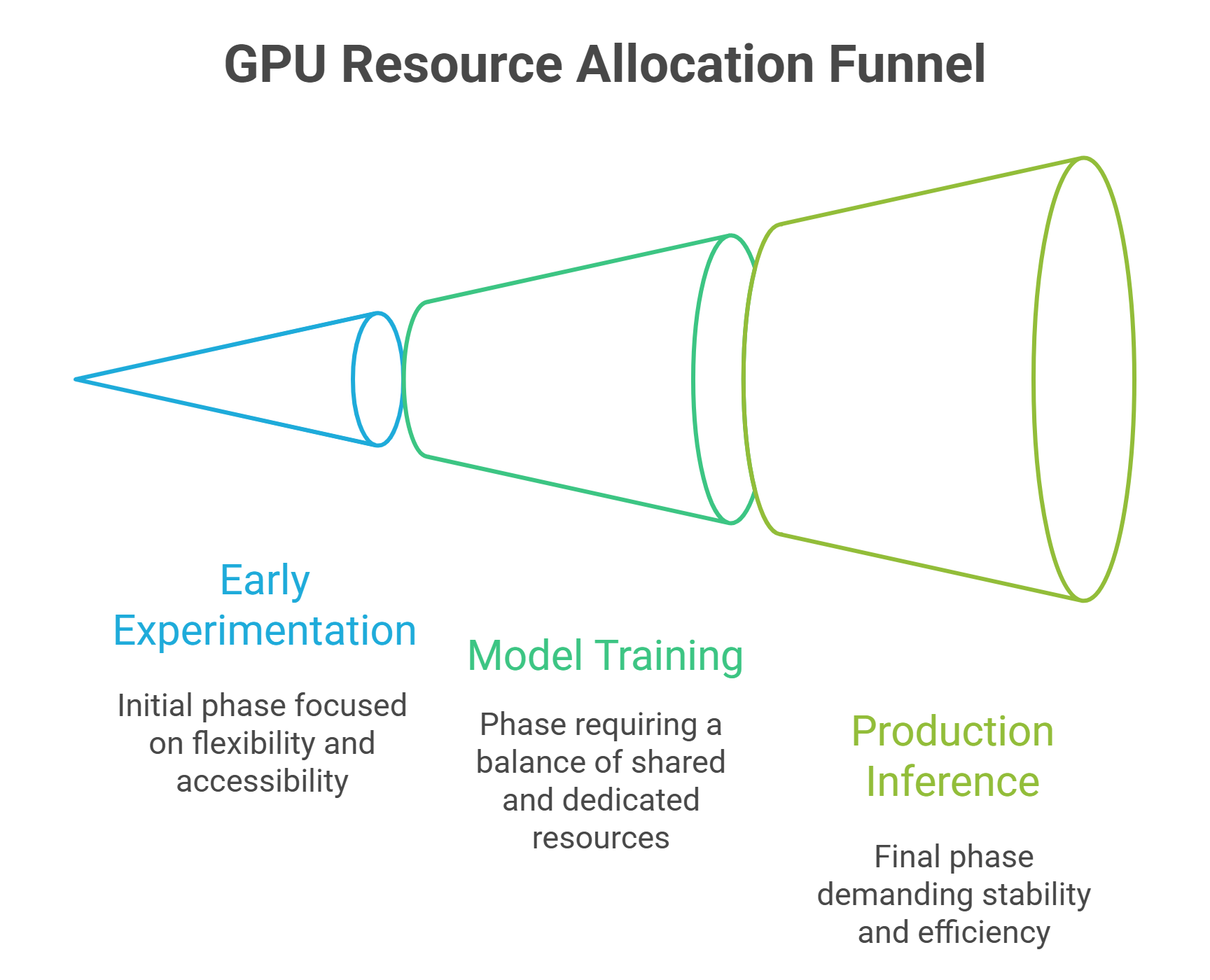

Experimentation and production place very different demands on infrastructure. In early development, workloads are unpredictable – researchers test ideas quickly, run multiple experiments in parallel, and frequently change hyperparameters or datasets. These jobs don’t always require maximum GPU performance, but they demand flexibility and accessibility.

Production workloads, on the other hand, are defined by stability and reliability. Inference systems must meet strict latency requirements, and training pipelines must scale without interruption. Here, resource management shifts from convenience to efficiency: GPUs must be utilized as fully as possible, with minimal idle time.

Balancing these competing demands is a core challenge for MLOps. The same GPU cluster must serve researchers experimenting with prototypes and engineers deploying mission-critical inference services. Left unmanaged, this tension leads to conflicts over resources, wasted compute cycles and frustrated teams.

Resource allocation strategies

Effective GPU management starts with clear allocation strategies. Several models are commonly used in MLOps environments:

- Reserved allocation: Assigning GPUs to specific teams or projects provides predictability but risks underutilization when resources sit idle.

- Shared pools: A common pool of GPUs allows jobs to be scheduled dynamically, improving utilization but requiring strong governance to avoid resource conflicts.

- Fractional GPUs: Advanced orchestration systems now support slicing GPUs into smaller units, allowing multiple lightweight jobs to share a single card without interfering with each other.

Each approach has trade-offs, and the right choice depends on the stage of the AI lifecycle. Early experimentation often benefits from fractional or shared pools, while production inference may require dedicated allocation to guarantee consistent performance.

Scheduling as the backbone

At the heart of resource management lies GPU scheduling. Scheduling systems ensure that jobs are queued and executed according to priority, availability and workload type. For example, a mission-critical fraud detection system may always receive priority access to GPUs, while a batch training job can wait in the queue.

Next-generation schedulers also support preemption, allowing high-priority workloads to interrupt lower-priority ones. This prevents critical applications from being delayed while still enabling efficient use of available resources. Integration with orchestration tools like Kubernetes ensures that scheduling policies are applied consistently across distributed environments.

Monitoring and observability

Resource management doesn’t stop at allocation – it requires continuous monitoring. Tracking GPU utilization, memory consumption, and job latency provides visibility into how resources are being used.

For experimentation, monitoring helps teams identify inefficiencies such as underutilized GPUs or jobs running on hardware that exceeds their needs. For production, monitoring ensures that workloads meet service-level agreements and provides early warning of potential bottlenecks.

Observability also enables cost optimization. By tracking not just performance but also the financial impact of GPU usage, MLOps teams can align infrastructure decisions with business goals.

Bridging experimentation and production

The transition from experimentation to production often creates silos between research and engineering teams. Researchers may use different frameworks, datasets or workflows than production systems, leading to friction when models are handed off.

MLOps platforms help bridge this gap by standardizing workflows and providing consistent GPU access across environments. A researcher experimenting on a small GPU instance can, with minimal changes, scale their model to a multi-GPU cluster for production training. Similarly, the same scheduling and monitoring tools apply across the lifecycle, ensuring continuity from prototype to deployment.

This continuity reduces time-to-market for new models and creates a more collaborative culture between teams. Instead of debating over resources, teams align on shared infrastructure that supports both innovation and stability.

Cloud vs. on-prem GPU management

Enterprises face a critical decision when managing GPU resources: should they rely on on-prem hardware or cloud-based infrastructure?

On-prem systems provide full control and may reduce costs for predictable, high-volume workloads. However, they are limited by fixed capacity. Spikes in demand – such as a sudden surge in inference requests – can overwhelm local clusters, leaving teams without the compute they need.

Cloud-based GPU platforms offer elasticity, scaling resources up or down based on workload. This flexibility is particularly valuable in experimentation, where demand fluctuates, and in production, where uptime is critical. By outsourcing infrastructure management, teams can focus on optimizing workflows rather than maintaining hardware.

In practice, many enterprises adopt hybrid strategies, using on-prem GPUs for steady workloads and cloud GPUs for peak demand or specialized training. Effective resource management requires orchestration across both environments, ensuring seamless scaling.

Best practices for GPU resource management

Through experience, leading MLOps teams have developed a set of best practices for managing GPU resources effectively:

- Set clear priorities: Define which workloads require guaranteed GPU access and which can tolerate delays. Align these priorities with business objectives.

- Right-size jobs: Match workloads to appropriate GPU types and configurations. Avoid over-provisioning by running lightweight jobs on fractional GPUs.

- Automate scaling: Use cloud autoscaling to respond to demand in real time, preventing both bottlenecks and idle resources.

- Unify monitoring: Implement observability tools that track both utilization and cost across experimentation and production environments.

- Foster collaboration: Break down silos between research and production by standardizing workflows and resource policies.

By following these practices, enterprises can turn GPU management from a source of friction into a competitive advantage. Platforms like GMI Cloud make this even more attainable, with features such as autoscaling, role-based access, monitoring dashboards and flexible GPU configurations built directly into the infrastructure. Instead of piecing together these capabilities in-house, ML teams can rely on a cloud environment that already incorporates them, accelerating both experimentation and production.

Final thoughts

Managing GPU resources in MLOps is not just about saving money or preventing bottlenecks – it is about enabling innovation. Experimentation thrives when researchers have frictionless access to GPUs, and production succeeds when resources are allocated with precision. The challenge for enterprises is to create a system that balances both worlds.

GPU cloud platforms like GMI Cloud make this possible. By providing elastic scaling, intelligent scheduling and integrated monitoring, they allow ML teams to move fluidly from early experimentation to full-scale production. For CTOs, the result is confidence that GPU resources are being used efficiently, strategically and in ways that drive real business impact.

Frequently Asked Questions About Managing GPU Resources in MLOps

1. Why is GPU resource management so critical when moving from experimentation to production?

Because the demands change completely. Early on, teams need flexible, easy access to run lots of small, fast experiments. In production, you need stability, high utilization, and low-latency inference. Without clear management, GPUs sit idle, costs run away, and critical workloads get blocked; with it, GPUs become a shared, elastic backbone for experimentation, training, and inference at scale.

2. Which GPU allocation strategy should we use: reserved, shared pool, or fractional GPUs?

t depends on the stage and workload:

- Reserved allocation gives predictability (great for steady, production inference) but risks underutilization.

- Shared pools improve overall utilization by scheduling jobs dynamically—best when multiple teams compete for capacity.

- Fractional GPUs let several lightweight jobs share one card—ideal for early experimentation and quick prototypes.

3. What does GPU scheduling actually do in an MLOps stack?

Scheduling is the backbone. It queues and places jobs by priority, availability, and workload type—so a mission-critical, low-latency inference service can preempt a lower-priority batch training job. Integrated with orchestrators like Kubernetes, modern schedulers also support preemption and consistent policy enforcement across distributed environments.

4. What should we monitor to keep performance high and costs in check?

Continuously track GPU utilization, memory consumption, and job latency, then pair that with cost visibility. For experimentation, this reveals over-provisioned runs (e.g., small jobs on big GPUs). For production, it validates SLAs and flags bottlenecks early so you can reallocate or scale before users feel it.

5. Cloud or on-prem for GPU management—and what about hybrid?

On-prem offers control and can be cost-effective for predictable, high-volume workloads, but capacity is fixed.

Cloud GPU platforms add elasticity—scale up for spikes (training or sudden inference demand) and scale down to avoid idle spend.

Most enterprises land on hybrid: steady workloads on-prem, bursts and specialized training in the cloud—coordinated by unified orchestration.