As AI models grow larger and more complex, the demands on data pipelines are reaching new extremes. Training a billion-parameter natural language model or a computer vision system with millions of annotated images requires not just raw GPU power but also the ability to move, process and manage data at scale. Without optimized data pipelines, even the most powerful GPUs sit idle, waiting for input.

Cloud platforms have become the natural environment for large-scale AI training, offering elasticity, distributed storage and high-performance compute. But cloud infrastructure alone is not enough – the efficiency of your data pipelines often determines whether training completes in days or weeks, and whether costs spiral out of control or stay manageable. For CTOs and ML teams, optimizing these pipelines is a critical step in turning ambitious AI projects into practical results.

The unique challenges of AI data pipelines

Unlike traditional data processing, AI training pipelines have specific requirements that amplify existing challenges:

- High throughput: Training requires a continuous flow of large batches of data to GPUs. Any bottleneck in reading, transforming or streaming data slows down the entire system.

- Low latency: When GPUs wait for input, training efficiency plummets. Minimizing the delay between data storage and GPU consumption is critical.

- Scalability: AI datasets grow constantly, and pipelines must scale to handle petabytes of information without breaking down.

- Heterogeneity: Data often comes from diverse sources – text, images, video, sensor readings – and must be preprocessed consistently before entering the training loop.

Optimizing for these factors requires more than just adding hardware. It involves careful design of how data is ingested, transformed, stored and delivered to GPUs in a way that aligns with the cloud’s distributed nature.

Designing for throughput and latency

One of the biggest mistakes in large-scale training is underestimating the impact of I/O bottlenecks. GPUs are extremely fast, but if data is not fed into them efficiently, their utilization drops. Optimizing throughput and latency requires a few best practices:

- Use parallel I/O: Instead of loading data sequentially, pipelines should read from storage in parallel, distributing the workload across multiple threads or processes.

- Adopt streaming architectures: Pre-loading entire datasets is often impractical. Streaming allows data to flow in smaller chunks, keeping GPUs busy while the pipeline fetches more in the background.

- Leverage caching: Frequently used datasets or preprocessed features should be cached close to compute nodes, reducing the need for repeated long-distance transfers.

In cloud environments, where storage and compute may reside in different regions or availability zones, minimizing data transfer latency is especially important. Techniques such as colocating storage with compute clusters and using high-bandwidth networking can significantly reduce training time.

Preprocessing at scale

Raw data is rarely ready for training. Images may need resizing, text must be tokenized, and tabular data often requires normalization or feature engineering. At small scale, preprocessing can be handled ad hoc, but at enterprise scale, this becomes a major engineering challenge.

To optimize preprocessing:

- Distribute preprocessing tasks: Tools like Apache Spark, Ray or Dask can parallelize preprocessing across large clusters, transforming terabytes of raw data efficiently.

- Pipeline integration: Preprocessing should be integrated into the pipeline so that data transformations happen seamlessly, without creating unnecessary intermediate storage bottlenecks.

- Hardware acceleration: In some cases, preprocessing itself can benefit from GPU acceleration, especially for tasks like image decoding or augmentation.

Well-optimized preprocessing pipelines not only speed up training but also ensure consistency, which is vital for reproducibility and compliance in regulated industries.

Storage strategies for large datasets

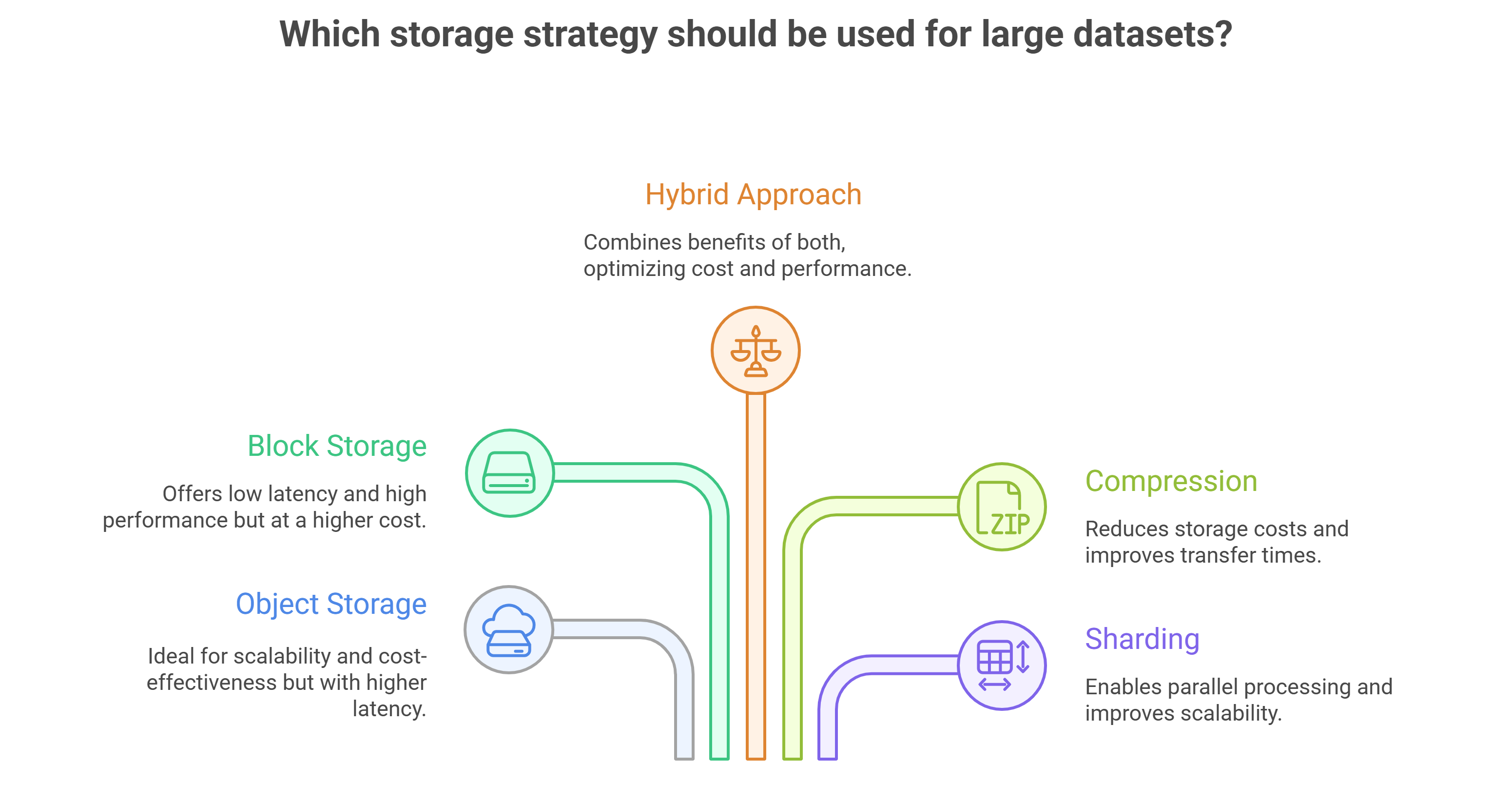

Choosing the right storage architecture is another critical step in pipeline optimization. Options include:

- Object storage: Cloud-native object stores like Amazon S3 or Google Cloud Storage are scalable and cost-effective, but may introduce higher latency if not carefully managed.

- Block storage: Provides low-latency access and is often colocated with compute, but may be more expensive at scale.

- Hybrid approaches: Many enterprises use a layered approach – object storage for long-term archiving and block or file storage for active training datasets.

Compression and sharding strategies can also improve pipeline efficiency, reducing transfer times and allowing parallel access to different parts of the dataset.

Orchestration and automation

Modern AI training requires orchestration tools that can automate pipeline management. Kubernetes and similar systems make it possible to scale pipeline components dynamically, adjust to workload changes, and recover gracefully from failures.

Integration with MLOps frameworks ensures that data pipelines connect seamlessly with model training, deployment and monitoring. This creates a continuous workflow where new data can be ingested, preprocessed and used for retraining models without manual intervention.

Automation also plays a key role in cost control. By automatically shutting down idle resources and scaling only when demand requires, orchestration systems prevent unnecessary spending on cloud infrastructure.

Monitoring and observability

Optimizing pipelines is not a one-time task – it requires continuous monitoring. Observability tools provide insights into where bottlenecks occur, whether GPUs are underutilized, and how data flows through the system.

Key metrics to monitor include:

- Data loading times

- GPU utilization rates

- Network bandwidth consumption

- Preprocessing latency

By identifying weak points, teams can refine pipelines iteratively, ensuring performance keeps pace as models and datasets grow.

The role of GPU cloud platforms

While enterprises can attempt to build and optimize their own pipelines, GPU cloud platforms provide a faster, more efficient route. Platforms like GMI Cloud are designed with high-performance data pipelines in mind, integrating optimized storage, high-bandwidth networking and autoscaling GPU clusters.

This eliminates the need for teams to reinvent the wheel. Instead of spending months tuning infrastructure, they can leverage a platform that delivers optimized pipelines out of the box, while still offering flexibility for customization.

Final thoughts

As AI models scale, the efficiency of data pipelines becomes just as critical as the compute power driving training. Bottlenecks in I/O, preprocessing or storage can undermine even the most advanced GPU clusters, wasting both time and money.

Optimizing data pipelines for the cloud requires a holistic approach: designing for throughput and latency, distributing preprocessing, choosing the right storage strategies and integrating orchestration and monitoring tools. Cloud GPU platforms provide the final piece, delivering infrastructure that scales intelligently and removes the operational burden from internal teams.

For CTOs and ML teams, the message is clear: investing in pipeline optimization is the difference between AI projects that stall in development and those that deliver real business impact at scale.

Frequently Asked Questions About Optimizing Data Pipelines for Large-Scale AI Training on the Cloud

1. Why do optimized data pipelines matter as much as GPU power for AI training?

Because GPUs are only fast when they’re fed fast. Large-scale training needs high throughput and low latency from storage to compute; otherwise GPUs sit idle waiting for input. Cloud elasticity helps, but pipeline design (ingest → transform → store → deliver) ultimately determines whether training finishes in days or drags on for weeks—and whether costs stay controlled or spiral.

2. How can we boost throughput and cut latency from storage to GPUs?

Use parallel I/O (read in multiple threads/processes), stream data in chunks instead of pre-loading entire datasets, and cache frequently used data close to your compute nodes. In cloud setups—where storage and compute may live in different regions or availability zones—colocate storage with your clusters and rely on high-bandwidth networking to keep GPUs continuously busy.

3. What’s the right way to handle preprocessing at scale?

Distribute it. Tools like Apache Spark, Ray, or Dask can parallelize tokenization, image resizing/augmentation, and tabular feature work across big clusters. Integrate these steps directly into the pipeline (avoid extra intermediate bottlenecks), and where it fits, consider GPU-accelerated preprocessing (e.g., for image decoding/augmentation). A consistent, integrated flow improves speed, reproducibility, and compliance.

4. Which cloud storage should we choose for training datasets—object, block, or hybrid?

- Object storage (e.g., Amazon S3, Google Cloud Storage): massively scalable and cost-effective, but can add latency if not managed carefully.

- Block storage: typically lower-latency and often colocated with compute, though pricier at scale.

- Hybrid: archive long-term in object storage and stage active training shards on block/file storage. Pair this with compression and sharding so multiple workers read in parallel and transfers stay lean.

5. How do orchestration and automation keep pipelines reliable and cost-efficient?

Platforms like Kubernetes let you scale pipeline components up/down, roll with workload spikes, and recover from failures gracefully. Tie your data flow into MLOps so new data is ingested, preprocessed, and retrain-ready without manual handoffs. Automate cost control, too—shut down idle resources and scale only when demand requires.