This article explains how multimodal inference is transforming AI by combining text, vision, and audio into unified systems, and explores the GPU infrastructure that makes it possible at scale.

What you’ll learn:

- Why multimodal inference is critical for real-world applications

- How GPUs enable parallel processing across text, image, and audio models

- Key infrastructure challenges such as memory, scheduling, and latency

- The advantages of cloud-based GPU platforms over on-prem systems

- Practical use cases in healthcare, finance, customer service, and autonomous systems

- Optimization strategies to reduce cost while maintaining responsiveness

- Strategic considerations for leaders adopting multimodal AI

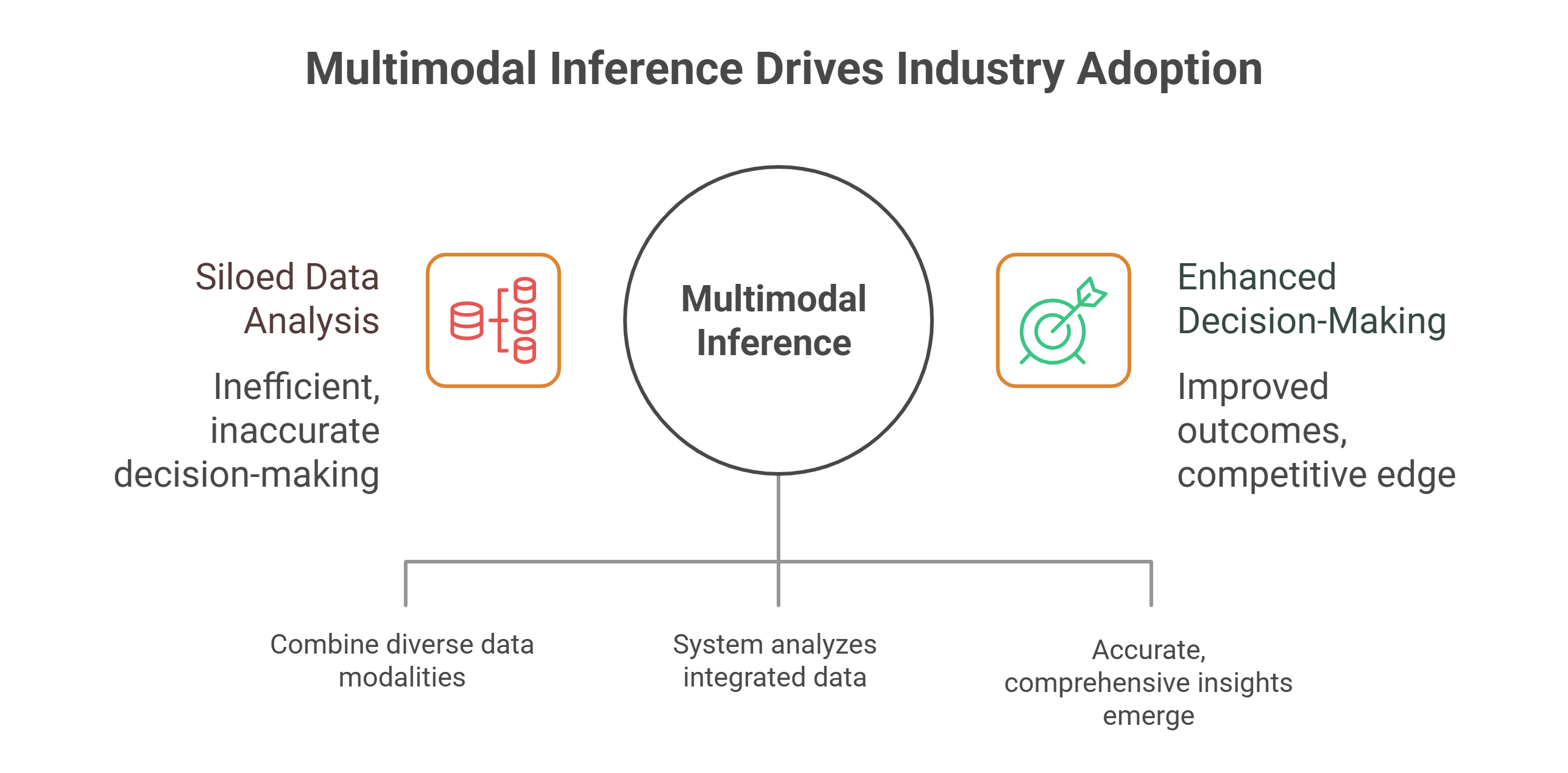

AI systems are hitting a turning point: the most valuable models aren’t those that process a single type of input, but those that fuse text, images and audio into one coherent understanding. This is multimodal inference – where language models interpret instructions, vision systems analyze visuals, and audio models capture tone or intent, all working in sync.

For CTOs and ML teams, multimodal capabilities are no longer experimental. They’re the foundation of next-generation applications – from customer support agents that can listen, read and see context at once, to healthcare tools that combine medical imaging, patient notes and spoken feedback in a single diagnostic workflow. The challenge isn’t whether these models can deliver – it’s how to power them at scale with infrastructure that keeps latency low, throughput high, and costs under control.

Why multimodal inference matters now

Most real-world problems aren’t unimodal. A fraud detection system may need to process transaction text data, match it with biometric voice confirmation, and validate video identity in one decision loop. Autonomous vehicles must combine sensor vision with driver instructions and environmental sounds. These scenarios require AI that can interpret multiple modalities in real time, aligning them into a single prediction or action.

The payoff is substantial: multimodal inference improves accuracy by cross-referencing inputs, enhances usability by creating richer interactions, and opens the door to applications that mimic how humans naturally process information. But it also amplifies the demands placed on infrastructure, multiplying requirements for memory bandwidth, latency optimization and scalability.

GPUs as the backbone of multimodal AI

GPUs provide the horsepower that makes multimodal inference practical. Their parallel architecture allows text, image and audio models to run simultaneously rather than sequentially. Instead of waiting for a language model to finish before feeding data into a vision model, GPUs execute these workloads in parallel, merging outputs into a unified response.

The thousands of cores in modern GPUs are designed for exactly the tensor operations and matrix multiplications that underlie today’s multimodal architectures. Just as important, GPUs offer flexibility: they can process heterogeneous workloads – a mix of transformer-based models, convolutional layers and spectrogram analysis – without falling into bottlenecks. For enterprise deployments, this is the difference between instant responses and frustrating lag.

Scaling challenges in multimodal workloads

Even with GPUs, multimodal systems present unique hurdles when scaling:

- Memory pressure: Running multiple large models together often exceeds the memory capacity of a single GPU.

- Scheduling complexity: Deciding which workloads to allocate to which GPU – and in what order – becomes critical for efficiency.

- Latency stacking: Each modality contributes its own latency. Without optimization, combined delays can undermine the entire real-time experience.

These issues underline the importance of GPU scheduling strategies, high-bandwidth interconnects and elastic scaling. Infrastructure must be intelligent, not just powerful, to keep multimodal AI responsive under production conditions.

Cloud infrastructure for multimodal inference

On-premises infrastructure struggles to keep up with these demands. Hardware utilization is inefficient, scaling is costly, and upgrades lag behind rapid GPU innovation. Cloud-based GPU platforms offer the agility that multimodal AI requires.

With the GPU cloud, teams can dynamically provision compute as workloads shift, allocate resources across modalities, and auto-scale during peak usage. Purpose-built inference engines add another layer of efficiency, routing requests, batching intelligently, and minimizing idle time across GPUs. The result is infrastructure that evolves as quickly as the models themselves.

Use cases driving adoption

- Healthcare: Combining imaging, text notes and audio inputs to accelerate diagnoses and improve patient care.

- Customer service: Virtual agents that transcribe speech, analyze tone and pull text-based resources simultaneously.

- Retail: Checkout systems that recognize products visually, authenticate via voice and personalize offers with transaction history.

- Finance: Fraud detection systems that evaluate text data, voice biometrics and video verification in parallel.

- Autonomous systems: Vehicles and drones integrating visual feeds, radar and spoken commands into a single control flow.

These examples highlight how multimodal inference isn’t just a technical achievement – it’s a commercial necessity for enterprises seeking to stay competitive.

Optimizing performance

Deploying multimodal inference effectively requires more than just raw compute. Key strategies include:

- Model optimization: Quantization, pruning and distillation reduce model size without sacrificing accuracy.

- Pipeline parallelism: Assigning different modalities to different GPUs ensures smooth throughput.

- Streamlined data pipelines: Preprocessing delays must be minimized so GPUs remain fully utilized.

- Continuous monitoring: Metrics like GPU utilization, latency and throughput help identify bottlenecks and guide refinements.

These practices lower costs while maintaining responsiveness, turning multimodal AI into a sustainable business capability rather than a resource drain.

Strategic considerations for leaders

For CTOs and ML leaders, the critical questions go beyond whether multimodal inference is valuable. The focus is on adoption strategies:

- Can the infrastructure handle variable surges in multimodal demand without breaking SLAs?

- Is cost predictable, with pricing models that match workload patterns?

- How easily will multimodal inference integrate into existing MLOps pipelines?

- Are compliance and security measures strong enough for sensitive multimodal data, from healthcare images to biometric audio?

Addressing these considerations upfront prevents costly missteps and ensures multimodal AI enhances rather than complicates enterprise operations.

The road ahead

The future of enterprise AI is multimodal. Large language models paired with vision and audio capabilities are setting new benchmarks for what’s possible, from multimodal assistants to integrated diagnostic tools. As these applications grow, the burden on infrastructure will only increase.

The GPU cloud provides the adaptability needed to meet this challenge. With parallel processing, intelligent orchestration and elastic scaling, it enables multimodal inference to run in production environments at the speed users demand.

Final thoughts

Multimodal inference is a defining shift in AI’s evolution, blending text, vision and audio into systems that deliver richer, more natural and more accurate results. Yet the complexity of these workloads means that success depends on more than just deploying powerful models – it requires infrastructure engineered for responsiveness and scale.

GPUs, when deployed on inference-optimized cloud platforms, form the backbone of this capability. They allow enterprises to unify modalities in real time, scale dynamically with demand, and maintain performance even as workloads grow more complex. For leaders, the priority is ensuring their infrastructure keeps pace with the multimodal era – because those who move first will set the benchmarks for user experience and competitive advantage.

Frequently Asked Questions About Multimodal Inference (Text, Vision & Audio)

1. What is multimodal inference and why does it matter now?

Multimodal inference is when AI systems process text, images, and audio together to produce a single, unified prediction or action. It matters because most real-world problems aren’t unimodal: think fraud checks that combine transaction text, voice biometrics, and video ID, or autonomous systems that fuse camera feeds with spoken commands and environmental sounds. This fusion improves accuracy, creates richer interactions, and unlocks next-gen apps—from customer service agents that can listen, read, and see to healthcare tools that blend imaging, clinician notes, and spoken feedback.

2. How do GPUs make real-time multimodal inference possible?

GPUs run text, vision, and audio models in parallel instead of sequentially. Their massively parallel cores handle the tensor ops behind transformers, conv layers, and spectrogram processing at the same time, then merge outputs into one response. That parallelism, plus the flexibility to juggle heterogeneous workloads, is what keeps latency low and throughput high in production.

3. What are the biggest scaling challenges—and what helps?

Multimodal stacks face:

- Memory pressure: multiple large models can exceed a single GPU’s memory.

- Scheduling complexity: deciding which workload goes to which GPU (and when) matters.

- Latency stacking: per-modality delays can add up fast.

You counter these with smart GPU scheduling, high-bandwidth interconnects, and elastic scaling so the system stays responsive as demand grows.

4. Why run multimodal inference on a GPU cloud instead of on-prem?

On-prem often struggles with utilization, costly scaling, and slow hardware refreshes. GPU cloud gives you agility: dynamically provision compute as workloads shift, allocate resources across modalities, and auto-scale for peaks. Purpose-built inference engines add efficiency by routing requests, batching intelligently, and minimizing idle GPU time—so infrastructure evolves as quickly as the models.

5. How do we optimize performance and cost for multimodal workloads?

Use a stack of practical tactics:

- Model optimization: apply quantization, pruning, and distillation to shrink models without losing accuracy.

- Pipeline parallelism: send different modalities to different GPUs to smooth throughput.

- Streamlined data pipelines: minimize preprocessing delays so GPUs stay saturated.

- Continuous monitoring: track GPU utilization, latency, and throughput to spot bottlenecks and refine iteratively.