これは、GMI Studios(近日公開予定)と並行してリリースされる予定のModelMatch機能の技術的な概要です。

クレジットは主任研究者に帰属します。 カオスホン、GMIクラウドのMLE研究員

技術概要エディター: コリン・モー、GMI クラウドのコンテンツ責任者

要約

GMI Cloudの大規模データセットとRAGアノテーションを活用した商用グレードの多次元評価パイプラインを紹介します。このフレームワークは、美的品質、背景の一貫性、ダイナミック度、イメージング品質、動きの滑らかさ、被験者の一貫性という6つの重要な側面を評価し、集計スコアと詳細な診断インサイトの両方を生成します。

重要なポイント:

- Veo3 を実現する 総合スコアが最高 一貫したバランスの取れたパフォーマンスを発揮します。

- クリング-イメージ 2 ビデオ-V2 マスター に優れている 特殊寸法、ターゲットを絞った忠実度の高いアプリケーションに最適です。

- 多次元指標 を提供してください モデル機能の微妙な理解、有効化 データ主導型のお客様固有の導入決定。

1。はじめに

AI 生成動画が急速に変化するにつれ 広告、エンターテインメント、ソーシャルメディア、企業にとっての本当の課題は、識別することです どのモデルが信頼性が高く高品質な結果を大規模にもたらすか。

動画生成は、コンテンツ制作からパーソナライズされたメディアまで、商用アプリケーションに移行しつつあります。次のものにアクセスできます。 大規模生成ビデオデータセット、 GMI Cloudは独自のプラットフォームを提供します 以下のベンチマークモデルへ 現実的な生産レベルの条件。

ビデオ生成の評価は依然として困難です。

- キャプションとビデオの配置が不完全、テキストベースの指標の信頼性を制限します。

- 既存のベンチマークは単一次元、動き、一貫性、または画像品質を無視することがよくあります。

- 診断の知見は限られているモデルが成功または失敗する理由を理解するのが難しくなります。

私たちの目標は、構築することです 初の商用グレードの多次元評価パイプライン AI ビデオ生成用。このフレームワークは次のことを実現します。 堅牢で多面的な指標 そして 実用的な洞察、モデル選択を有効にし、 顧客固有の最適化。

すべての評価は以下を使用して実施されました GMI Cloud のエラスティック GPU クラスターと推論パイプライン—お客様が利用できるのと同じインフラストラクチャ リアルタイムビデオ AI デプロイ。これにより、ベンチマークの結果が保証されます。 企業が生産現場で達成できるパフォーマンスを直接反映する。

1.1 業界への影響

このベンチマークは、AI 動画エコシステム全体に目に見えるメリットをもたらします。

- モデル開発者 洞察を得て、複数の側面にわたってパフォーマンスを微調整できます。

- コンテンツ制作者と企業 受信 データ主導型ガイダンス ニーズに合った適切なAIビデオモデルを選択するためのものです。

- GMIは、中立的な商用グレードの評価者としての地位を確立しています、学術的ベンチマークと実際のビジネス要件との間のギャップを埋めます。

1.2 GMI クラウドについて

GMI Cloudはビルダーに次世代のAIインフラストラクチャを提供します、オファリング スケーラブルな GPU クラスタ、推論エンジン、モデル評価パイプライン。私たちのプラットフォームは誰でも次のことを可能にします AI を大規模に構築、評価、デプロイする、技術的な障壁を取り除き、商業的採用を加速します。

2。方法論

2.1 データ収集

生成された動画の大規模なデータセットとそれに対応するプロンプトをGMI Cloudから収集しました。このデータセットは実際の生成シナリオの代表であり、実際の条件下でモデルのパフォーマンスを評価するための強固な基盤となっています。

2.2 注釈とラベル付け

を使用してビデオサンプルに注釈を付けました 2つのAI支援ツール:RAG(検索-拡張生成)とDeepSeek。

- ぼろ 関連する参考情報を迅速に収集するのに役立ちます。を使用します 事前定義済みのプロンプトリスト からの例を組み合わせたものです Vベンチ、ビデオベンチ、エバルクラフト、さまざまなスタイル、動き、コンテンツタイプなど、さまざまなシナリオを動画でカバーできるようにしています。

- ディープシーク RAGと連携して次のことを行います 動画そのものを分析、動き、美学、一貫性など、さまざまな品質側面にラベルやスコアを自動的に割り当てるのに役立ちます。

これらのツールを組み合わせることで、 大規模なデータセットに効率的に注釈を付ける メンテナンス中 多様な報道 そして 信頼性の高いディメンションレベルの評価、何千ものビデオを手動で視聴してスコアリングする必要はありません。

テイクアウト: このハイブリッドアプローチは、以下の長所を組み合わせたものです。 リファレンスベースの検索 (RAG) と ダイレクトビデオ分析 (DeepSeek) により、生成されたビデオコンテンツに注釈を付けて評価するためのスケーラブルで自動化された方法が提供されました。

2.3 評価フレームワーク

私たちの評価は以下に基づいています ベンチ/Fベンチ/VMベンチ、以下のように設計された拡張機能付き 商用規模のビデオ生成:

- サポート マルチ GPU 並列計算 大規模な評価を効率的に行うことができます。

動画の品質を以下で評価します 6 つの重要な次元:

- 美的品質 —フレームの全体的な視覚的魅力(ビデオの視覚的満足度。LAIONの美的予測因子:CLIP+リグレッサー/MLPを使用して測定)。

- 背景の一貫性 — フレーム間の背景の安定性と一貫性(シーン環境の一貫性がどの程度維持されているか、CLIPで測定)。

- ダイナミック・ディグリー — 動きの豊かさと多様性(モデルが生成するアクティビティと動きの量、RAFTオプティカルフローで測定)。

- イメージング品質 —解像度、シャープネス、ノイズやアーティファクトの欠如(技術的品質、SPAQでトレーニングされたMUSIQを使用して測定)。

- モーションスムースネス —時間的連続性と流動性(動きがどれほど滑らかで自然に見えるか、VBenchのフレーム補間モデルで測定)。

- サブジェクトの一貫性 — フレーム間のキーオブジェクトまたは被写体の保存(メインキャラクターまたはオブジェクトの一貫性が保たれているかどうか、DINO機能を使用して測定)。

注:vllm (tarsier-7b) を使用することも可能で、ICCV 2025の新しいベンチマークは近日公開予定です。

2.4 統計スコアリング

スコアリングと集計の方法論

- スコア正規化: すべてのディメンションスコアは a にスケーリングされます 0 ~ 1 の範囲これにより、読者は高パフォーマンスと低パフォーマンスを簡単に解釈できます。

- 外れ値の削除: 結果の歪みを避けるため、 スコアの上位 5% と下位 5% を破棄する 各ディメンションについて。これにより、極端に良いケースでも悪いケースでも評価が歪められることがなくなります。

- ディメンションレベルのスコアリング: 各動画は採点されます 6 つの次元のそれぞれについて別々に (美的品質、背景の一貫性、ダイナミック度、イメージング品質、動きの滑らかさ、被写体の一貫性)。

- 集計スコア: ディメンションレベルのスコアリング後、 総合得点 次元の加重組み合わせを使用して計算されます。

- 出力: 評価の結果 表、グラフ、要約これにより、迅速な定量的比較とモデル選択のための実用的な洞察の両方が可能になります。

3。結果

3.1 総合モデルランキング

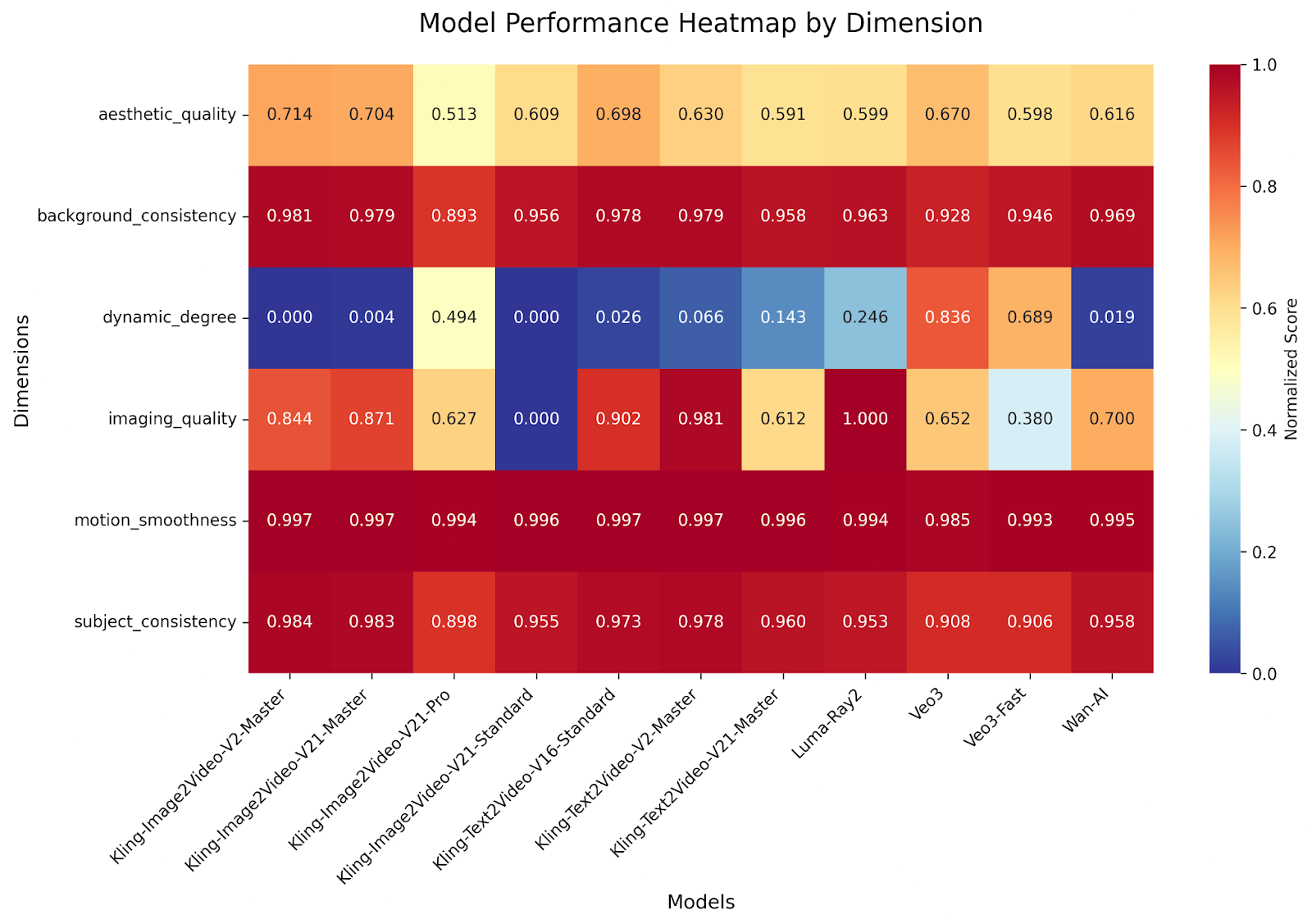

私たち 271件の評価済みビデオ 上の 5 つの主要なモデルファミリによって生成されました GMI クラウドインフラストラクチャ6つの重要な次元でそれらを採点します。 背景の一貫性、美的品質、被写体の一貫性、ダイナミック度、イメージング品質、動きの滑らかさ。各次元は 0 ~ 1 の範囲で正規化され、加重 (動的度数 = 0.1、その他 = 1.0) され、次のようになります。 実践的な意思決定に役立つ総合ランキング。

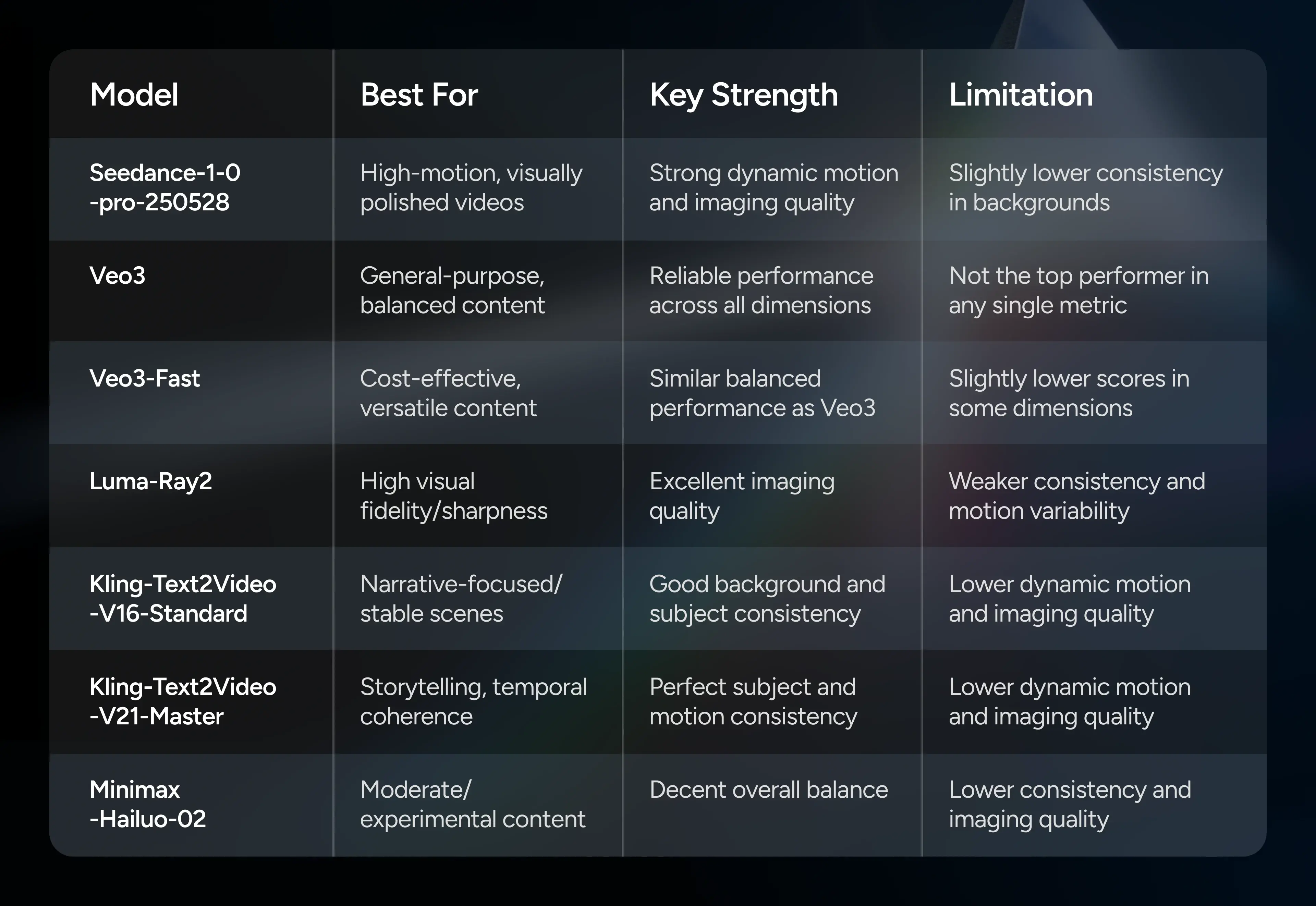

- シーダンス-1-0-プロ-250528 というスコアでリードしました 12.8784、優れている モーションエネルギーとイメージング品質、アクションの多い、視覚的に洗練されたコンテンツに最適です。

- Veo3 得点 12.0860、オファリング あらゆる面でバランスのとれたパフォーマンス、多用途の汎用ビデオ生成に適しています。

- Veo3-Fast で厳密にフォローされました 12.0829、提供 軽量で費用対効果の高い代替品 同様の機能を備えています。

- ルマレイ2 達成した 12.0080、ダイナミックな動きには強いが、一貫性は少し弱い。

- クリングバリアント (Text2Video-V16-Standard、V21-Master)が5位から6位にランクされ、デモンストレーションが行われました 一貫性と動きの滑らかさに特化した強み。

- ミニマックス-ハイルオ-02 得点 11.3902、一貫性とイメージ品質が低いため、需要の高いシナリオにはあまり適していません。

テイクアウト: Seedance-1-0-Pro-250528 が配信します 動きの激しい動画や技術的に要求の厳しい動画に最適なパフォーマンス一方、Veo3は 幅広い用途に対応するバランスのとれた信頼性の高い選択肢。

3.2 ディメンション別のベストパフォーマンスモデル

パフォーマンスをディメンション別に分類すると、どのモデルが優れているかがわかります。 特定のビジネスニーズ:

- バックグラウンドの一貫性: クリング-テキスト 2 ビデオ-V21-マスター (1.000) 保証する 完璧な環境安定性。

- 美的品質: シーダンス-1-0-プロ-250528 (1.000) が生産する 高度に洗練された、視覚的に魅力的な出力。

- 件名の一貫性: クリング-テキスト 2 ビデオ-V21-マスター (1.000) が保持する 安定しているキャラクターまたはキーオブジェクト フレーム間。

- ダイナミック・ディグリー: シーダンス-1-0-プロ-250528 (1.000) 生成する 最もエネルギッシュで魅力的な動き。

- 画像品質: シーダンス-1-0-プロ-250528 (1.000) 保証する 鮮明で高解像度な出力。

- 動きの滑らかさ: クリング-テキスト 2 ビデオ-V21-マスター (1.000) 届ける 滑らかで自然な動き。

テイクアウト: 多次元評価により明らかに 補完的な強み、以下に基づいてモデルを選択するようにクライアントを導く 特定の優先事項 — 動き、安定性、または全体的な研磨。

3.3 パフォーマンス一貫性分析

次のような場合は、さまざまなプロンプトで一貫したパフォーマンスを発揮することが重要です。 生産の信頼性とスケーラビリティ:

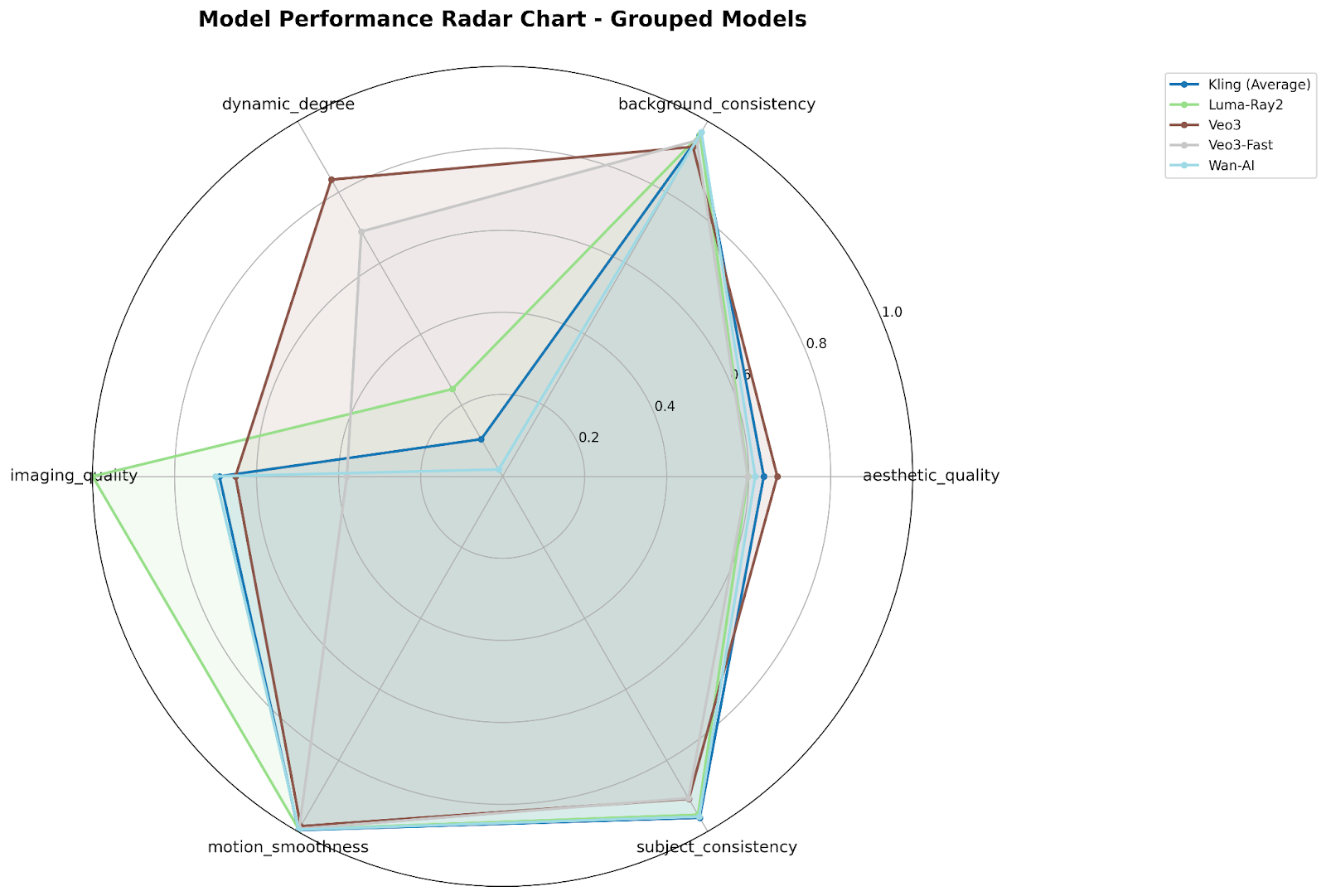

- シーダンス-1-0-プロ-250528 に強い一貫性を示す ダイナミックディグリーとイメージング品質ただし、背景と動きの滑らかさは少し低くなります。

- クリング-テキスト 2 ビデオ-V21-マスター に優れている 背景と主題の一貫性ただし、運動エネルギーと画質は低下します。

- Veo3 と Veo3-Fast 維持します あらゆる次元でバランスのとれた安定性、それらを信頼できるものにします 汎用デプロイ。

- ルマ-レイ2とミニマックス-ハイルオ-02 公演 トレードオフたとえば、Luma-Ray2は動きには優れていますが、一貫性は劣ります。Minimax-Hailuo-02は全体的に中程度のパフォーマンスを発揮します。

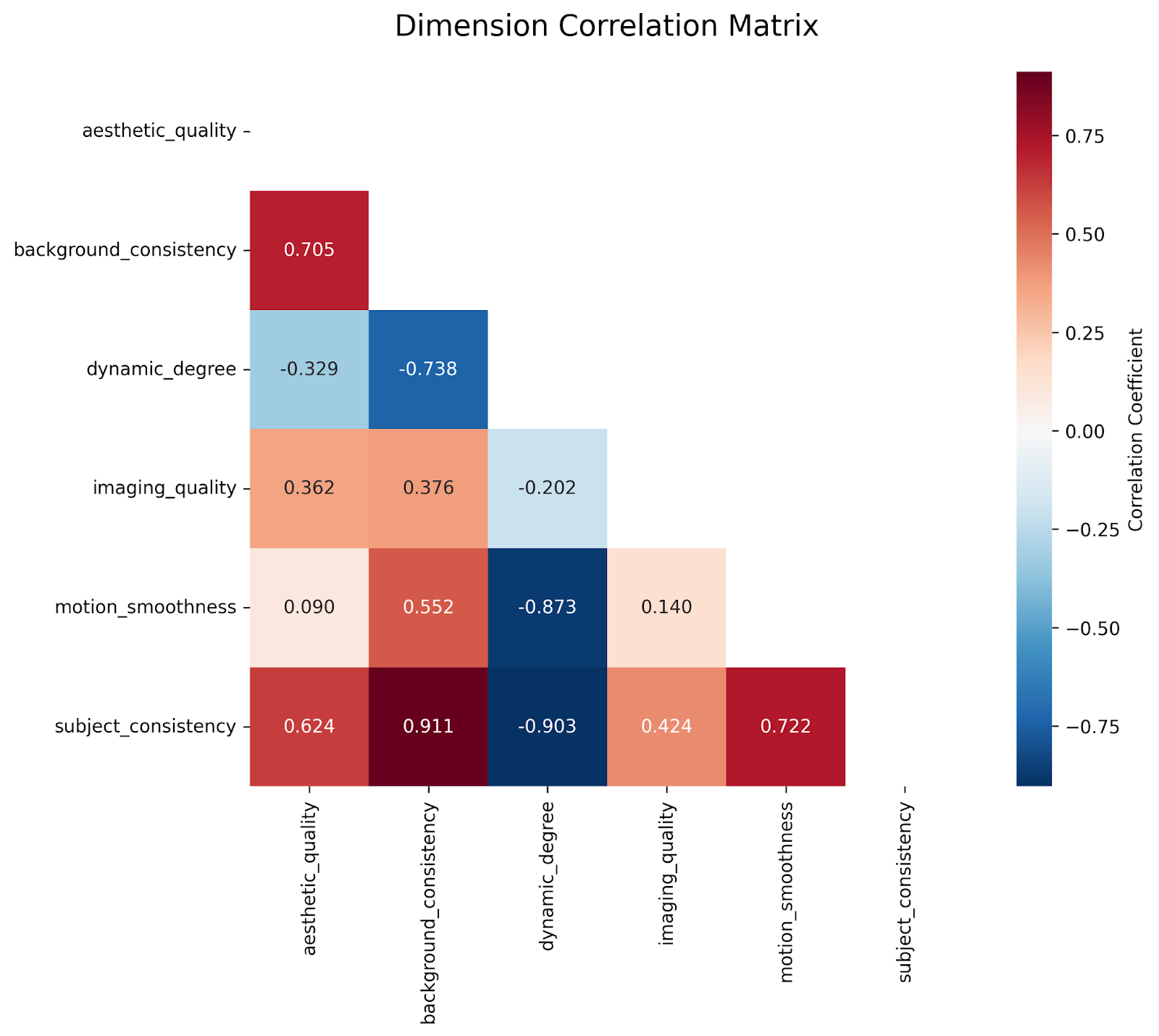

3.4 相関分析

A 相関ヒートマップ 指標間の相互依存性を調べるために、6つの評価ディメンションにわたって生成されました。暫定的な観察結果には以下が含まれます。

- 間の強い相関関係 モーションスムースネス そして 背景の一貫性これは、時間的コヒーレンスをうまく処理するモデルがバックグラウンドも安定していることを示しています。

- 美的品質 と中程度の相関関係を示しています イメージング品質 しかし相関は弱い ダイナミック・ディグリー、視覚的な魅力が必ずしも動きの豊かさと結びついているわけではないことを強調しています。

4。制限事項と今後の取り組み

制限事項:

- 現在の評価 寸法(背景、動き、美的感覚など)は、人間が動画を判断する方法を完全に反映していない場合がありますたとえば、物語の一貫性や感情的な影響。

- AIの評価スコアを人間の好みと相関させると 簡単ではない; 従来の相関指標では不十分であり、以下が必要です より高度な数学的アプローチ。

- 現在のマルチモーダルLLMは まだ十分に強くない 完全に自動化された、信頼性の高い、人間のような評価を提供します。

今後の仕事:

- 探索 ヒューマン・イン・ザ・ループ評価 AIベースのスコアリングを検証および改善します。

- 開発 LLM を搭載したエバリュエーター 複雑な物語や意味論的側面を自動的に評価することができます。

- このベンチマークを活用して構築 自律型ビデオ最適化エージェントは Photoshop の自動ワークフローに似ており、特定のビジネスシナリオに合わせてビデオ品質を繰り返し向上させます。

テイクアウト: これらの制限に対処することで強化されます 評価の忠実性、人間の知覚との整合性、および実際の展開、このベンチマークを次世代のAIビデオツールの基盤として位置付けています。

5。考察と結論

5.1 ディスカッション

当社の評価 5 つの主要モデルファミリーの 271 本の動画 モデル選択に関する実践的な知見がいくつか明らかになっています。

全体的なパフォーマンスとディメンション固有の強み

- Veo3 総合スコアにおけるリード、オファリング 6 つのディメンションすべてでバランスのとれた信頼性の高いパフォーマンス。

- クリング-イメージ 2 ビデオ-V2 マスター全体的には少し低いものの、 背景の一貫性、動きの滑らかさ、被写体の忠実性に優れています、必要なアプリケーションに最適です 特定の品質属性。

パフォーマンスの一貫性が重要

- などのモデル Veo3 そして クリング・イメージ2 ビデオ-V21-プロ 展示 低スコア変動、さまざまなプロンプトで安定したパフォーマンスを保証します。

- 個体スコアが高い一部のモデルが表示されます 差異が大きい、生産における潜在的な信頼性の問題を知らせます。

複数のディメンションにわたるパフォーマンスパターン

- クリング-イメージ 2 ビデオ-V2 マスター 輝く 時間的コヒーレンスと被験者の安定性。

- ルマレイ2 達成する 最高の画像品質、視覚的な忠実度を優先するシナリオに最適です。

- Veo3 そのまま 頼りになる汎用ソリューション バランスのとれたオールラウンドなパフォーマンスを実現します。

ユースケース別の推奨モデル

- ハイモーションでダイナミックなコンテンツ: 高いモデルを選択してください ダイナミック・ディグリー そして モーションスムースネス (例:Kling-Image2ビデオ-V2-マスター)。

- ビジュアルの忠実性に重点を置いたタスク: [選択] ルマレイ2 シャープネスと解像度のために。

- バランスのとれた多目的アプリケーション: Veo3 あらゆる次元で信頼性の高い結果を実現

テイクアウト: 適切なモデルの選択は、次の条件によって異なります。 特定のビジネスニーズ、そして多次元評価が可能に タスク主導型のお客様固有の推奨事項。

5.2 まとめ

- コンテンツ制作者向け: 提供する 定量的ガイダンス バランスのとれたモデル選びに 視覚美学、モーションリアリズム、シーン安定性 ストーリーテリングと広告用。

- 企業向け: を配信します 透明でデータ主導型の基盤 マーケティング・オートメーションからパーソナライズされたメディアまで、ジェネレーティブ・ビデオをコマーシャル・パイプラインに統合できます。

- AI エコシステムの場合: を設立 再現性のある標準化された評価プロトコル 現実世界のコンテンツ品質に対する期待に応え、加速している モデルの成熟度と責任ある導入。

- トレンドのベンチマークについて: からフォーカスを移動します 主観的なヒューマンスコアリング に 自動化された多次元分析AI評価の新局面を迎える 透明性とスケーラビリティ。

将来を見据えた解説

今後のイテレーションには以下が組み込まれます 人間の知覚スコアリング、 プロンプトスタイルの多様性テスト、および ICCV 2025で発表された複数の新しいベンチマークのサポート、の基盤を確立 世界初のエンドツーエンドの商用ビデオ AI ベンチマーク。このロードマップにより、 モデル設計、評価方法論、およびビジネスに合わせたAIビデオソリューションの継続的な改善、GMIクラウドを最前線に置きながら 商用ビデオ AI の評価と展開。