The demand for GPU cloud infrastructure has grown rapidly in recent years, driven by the widespread adoption of artificial intelligence. Training and running models for computer vision, natural language processing, and real-time inference require far more computational power than standard CPU-based setups.

But as businesses weigh their options, one question consistently arises: How much does GPU cloud really cost in 2025?

The answer is not as simple as looking at the hourly price of an instance. Real-world costs involve a combination of performance, utilization, scalability, and operational factors that directly affect the bottom line.

In this article, we break down the key elements that influence GPU cloud expenses and what organizations should consider before committing to an infrastructure strategy.

The rise of GPU cloud in AI workloads

Modern AI workloads are dominated by operations like large-scale matrix multiplications, attention layers and gradient updates that demand extreme parallelism and high memory bandwidth. GPUs (or graphics processing unit) are engineered for exactly this kind of throughput, which is why they have become the standard compute layer for both training and inference.

As AI adoption spreads across industries, the GPU cloud market has evolved with it. By 2025, businesses no longer see GPUs as a niche option but as the default choice for inference and training. From real-time language translation to fraud detection in financial services, the speed advantages are often indispensable.

But with growing demand comes higher scrutiny of costs. Organizations need to evaluate not just whether GPUs are faster, but whether they are cost-efficient for their specific workloads.

The headline cost: hourly instance pricing

The most visible cost of GPU cloud is the hourly or per-second rate of running an instance. A high-performance GPU instance in 2025 often ranges between $2 and $15 per hour, depending on the type of card, memory, and provider.

However, comparing instances solely on this basis is misleading. A GPU that costs twice as much per hour may finish the same workload in a fraction of the time, ultimately reducing the total expense. This is where benchmarking becomes critical.

Beyond hourly rates: total cost of inference

When evaluating GPU cloud, businesses should think in terms of total cost of inference rather than sticker price. Factors that shape this include:

- Throughput: How many inference requests or training steps can be processed per second.

- Latency: The delay between input and output, critical for real-time inference.

- Utilization: How efficiently the GPU is used during workloads. Idle GPUs mean wasted spend.

- Scalability: The ability to handle spikes in demand without overspending on unused capacity.

By analyzing cost per inference rather than cost per hour, organizations get a much clearer picture of efficiency.

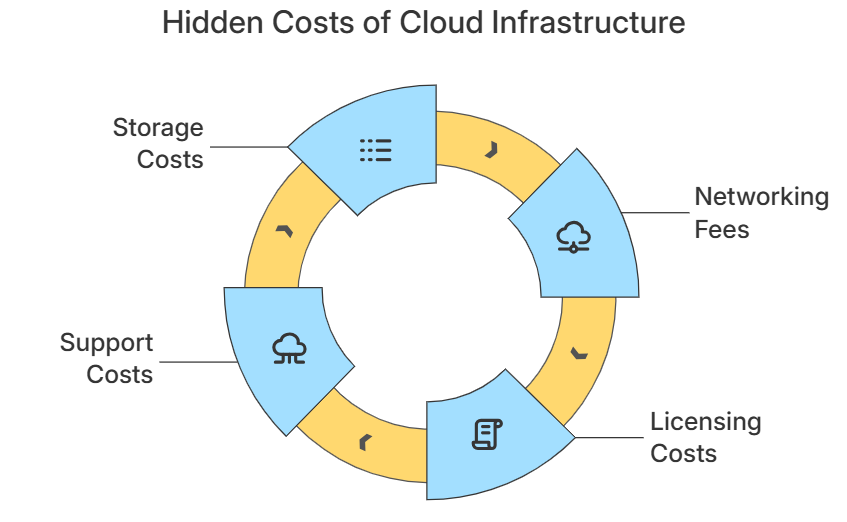

Storage, networking and hidden costs

GPU instances are only part of the equation. Cloud infrastructure comes with additional costs:

- Storage: Datasets, model checkpoints and logs require large volumes of high-speed storage, which can be priced separately.

- Networking: Data transfer between GPUs, CPUs and across regions can generate significant fees, particularly for distributed training.

- Licensing and frameworks: Some providers include optimized AI frameworks or proprietary inference engines, while others require additional licensing.

- Support and monitoring: Enterprises often pay extra for managed services, monitoring tools, and advanced support tiers.

These hidden costs can make the difference between a predictable bill and unexpected budget overruns.

Reserved, spot and on-demand pricing

Providers offer multiple pricing models, each affecting total costs:

- On-demand instances: Flexible, but the most expensive per hour.

- Reserved instances: Lower hourly rates in exchange for long-term commitments (1–3 years).

- Spot instances: Substantially cheaper, but with the risk of interruptions when demand surges.

The right mix depends on workload characteristics. For mission-critical, real-time inference, on-demand or reserved GPUs may be essential. For non-urgent batch jobs, spot pricing can yield dramatic savings.

Comparing providers in 2025

By 2025, the market is split between hyperscalers and specialized GPU cloud platforms.

- Hyperscalers (like AWS, Azure, Google Cloud) offer global availability and integrated ecosystems but often at higher costs. Networking and storage fees can add up quickly in these environments.

- Specialized providers (such as GMI Cloud, RunPod or Groq) focus on inference-optimized infrastructure. They frequently provide lower latency, more predictable pricing, and simplified scaling features.

Organizations increasingly mix providers, balancing hyperscaler integration with specialized GPU efficiency.

Cost efficiency through optimization

Lowering GPU cloud costs is not just about choosing the right provider – it is also about optimizing workloads. Techniques include:

- Batching: Grouping inference requests together to maximize GPU utilization.

- Quantization and pruning: Compressing models to use fewer GPU resources while maintaining accuracy.

- Autoscaling: Dynamically adjusting the number of GPU instances based on traffic.

- Hybrid deployment: Running latency-critical inference on GPUs while offloading background tasks to CPUs.

These strategies can reduce cloud bills by 30% or more without sacrificing performance.

Energy efficiency and sustainability costs

In 2025, cost considerations are increasingly tied to energy efficiency and sustainability. GPUs consume significant power, and cloud providers are passing on energy costs to customers through pricing models. Some platforms now advertise carbon-aware scheduling, where workloads are run in regions with cleaner energy sources, sometimes at lower prices.

For enterprises with environmental, social and governance (ESG) goals, choosing providers with sustainable GPU infrastructure is not only a cost factor but also a reputational one.

Benchmarking: the only reliable way to know

Every workload is unique. While marketing claims about cost and performance can be persuasive, benchmarking remains the only reliable method for calculating true GPU cloud costs. This involves running models on different provider setups, measuring throughput, latency, and cost per inference, then scaling those results to expected production workloads.

Benchmarks should also evaluate scalability, monitoring how systems perform under peak loads. A provider that appears cheaper on paper may underperform during traffic spikes, leading to user dissatisfaction and higher indirect costs.

The bottom line

So, how much does GPU cloud computing really cost in 2025? The short answer: it depends. Hourly rates may range from a few dollars to over ten, but the true cost is shaped by workload type, utilization, scaling strategy, and provider choice. Businesses that measure cost per inference, optimize their deployments, and benchmark across platforms will achieve the greatest efficiency.

Ultimately, the GPU cloud is not just an expense but an enabler. For companies deploying AI at scale, the value generated – in faster decision-making, improved user experience, and competitive advantage – often outweighs raw infrastructure spend. The challenge lies in aligning infrastructure choices with business needs, ensuring that every dollar spent on GPUs drives measurable impact.

Frequently Asked Questions About GPU Cloud Costs in 2025

How much does GPU cloud computing cost per hour in 2025, and why is the hourly price not the full story?

Typical high-performance GPU instances range from about $2 to $15 per hour depending on the card, memory, and provider. However, a pricier GPU may finish the same job much faster, so the real cost depends on throughput, latency, utilization, and scalability—not just the sticker price.

How should I calculate the total cost of inference for my AI workload?

Shift from “cost per hour” to “cost per inference.” Benchmark your model under realistic traffic to measure requests per second and latency, account for GPU utilization and scaling behavior, and compare the resulting cost per inference across providers.

What hidden costs can significantly impact my GPU cloud bill?

Beyond compute, you will pay for storage (datasets, checkpoints, logs), networking (data transfer within and across regions, especially for distributed training), potential licensing for frameworks or runtimes, and managed support or monitoring. These can turn a predictable estimate into overruns if not planned.

How do on-demand, reserved, and spot GPU pricing models compare in practice?

On-demand is the most flexible but the most expensive. Reserved instances trade a long-term commitment (typically 1–3 years) for lower hourly rates. Spot instances are much cheaper but can be interrupted, making them best for non-urgent batch jobs rather than mission-critical real-time inference.

Should I choose a hyperscaler or a specialized GPU cloud provider in 2025?

Hyperscalers (such as AWS, Azure, and Google Cloud) offer global reach and strong ecosystem integration but can have higher overall costs once networking and storage are included. Specialized platforms (such as GMI Cloud, RunPod, or Groq) focus on inference-optimized infrastructure with lower latency, more predictable pricing, and simpler scaling; many teams mix both to balance integration and efficiency.