Meet Kimi K2-Instruct

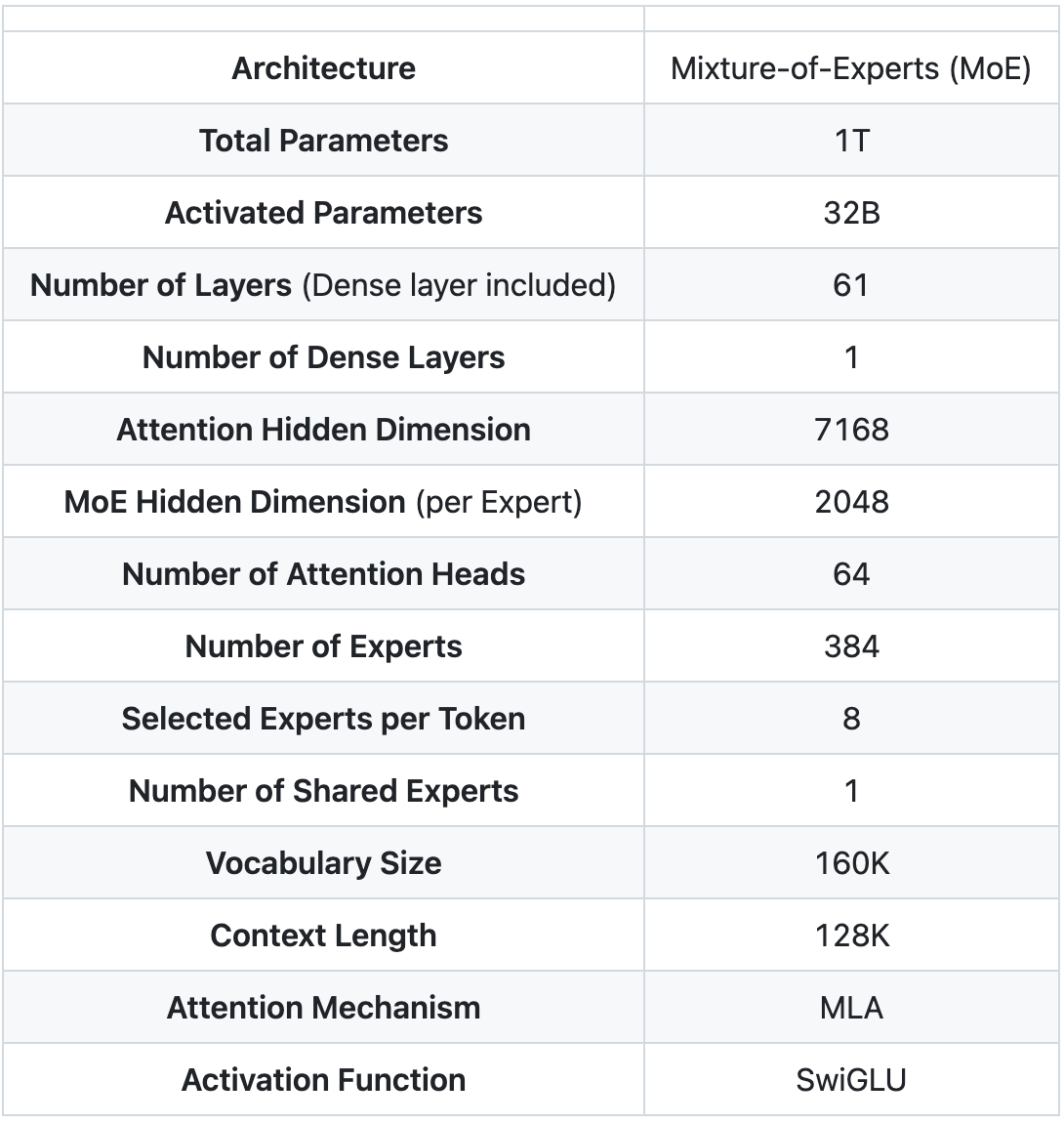

Kimi K2 is a 1‑trillion‑parameter Mixture‑of‑Experts model from Moonshot AI. The Instruct model is now fully integrated into the GMI Cloud inference engine. It comes with 32 billion active parameters, 1 trillion total parameters and a 128 k‑token context window. Trained with the MuonClip Optimizer, Kimi K2 achieves exceptional performance across frontier knowledge, reasoning, and coding tasks while being meticulously optimized for agentic capabilities. Check out their GitHub here.

Why Kimi K2?

Kimi K2 was developed by Moonshot AI, a frontier AI research lab based in China. Moonshot is focused on building competitive open models with practical applications, particularly in long-context memory and multi-modal learning. Kimi K2 is their most advanced offering to date and reflects their broader mission of making cutting-edge AI research openly accessible to the world.

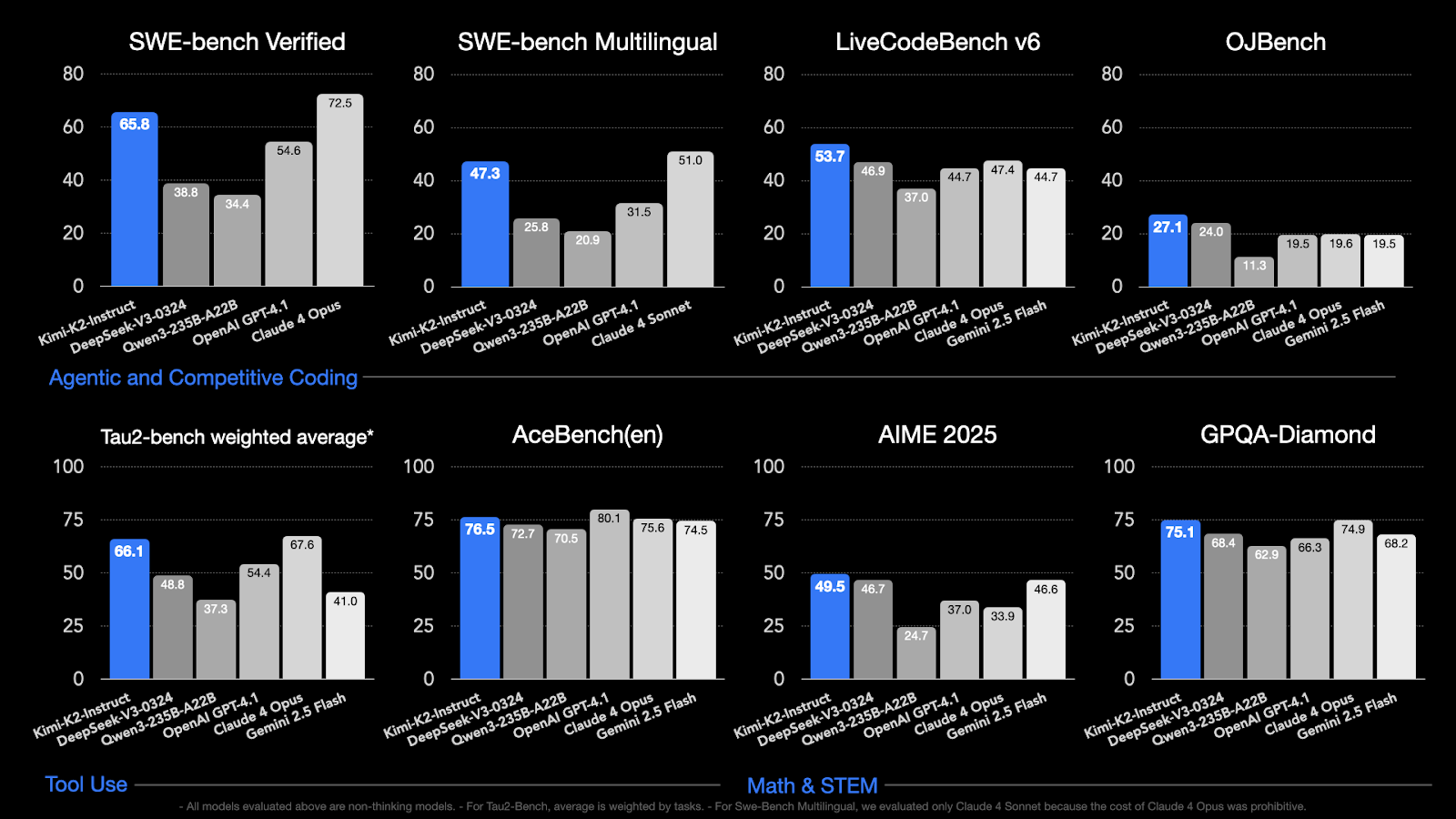

Here's the benchmarks for how it compares with other models in the wild:

Run Kimi K2 on GMI Cloud

You can deploy Kimi K2 immediately through our inference engine by following the instructions here.

GMI Cloud provides the infrastructure, tooling, and support needed to deploy Kimi K2 at scale. Our inference engine is optimized for large-token throughput and ease of use, enabling rapid integration into production environments.

Teams using GMI Cloud can:

- Serve Kimi K2 via optimized, high-throughput inference backend

- Configure models for batch, streaming, or interactive inference

- Integrate with prompt management, RAG pipelines, and eval tooling

- Connect via simple APIs without additional DevOps effort

- Scale with usage-based pricing and full visibility into performance

At GMI Cloud, we’re excited to offer access to Kimi K2 because it unlocks a new level of long-context reasoning for teams building research assistants, legal AI, financial analysis tools, and other high-memory applications. We see Kimi K2 as a core model for anyone looking to build intelligent systems that need to reason over vast, interrelated information.

Model Overview

Technical Overview

GitHub Repository

Get Started

Kimi K2 is now available on GMI Cloud for research and production use. Whether you're building AI agents, enterprise workflows, or RAG applications, GMI Cloud makes it easy to deploy and scale long-context models like Kimi K2.

Explore Kimi K2 on GMI Cloud Playground

About GMI Cloud

GMI Cloud is a high-performance AI cloud platform purpose-built for running modern inference and training workloads. With GMI Cloud Inference Engine, users can access, evaluate, and deploy top open-source models with production-ready performance.

Explore more hosted models → GMI Model Library

.png)