This article explains why GPU cloud platforms are increasingly replacing traditional VM-based compute for modern AI workloads, as training and inference place new demands on infrastructure.

What you’ll learn:

- How AI workloads differ from traditional applications in compute, networking, and latency needs

- Why VM-based GPU deployments often lead to poor utilization and higher costs

- How GPU-native scheduling and high-performance networking improve training and inference

- The importance of observability and elasticity for large-scale AI systems

- Why GPU cloud pricing models better align with real AI workload usage

- How GPU cloud platforms support modern MLOps and production AI at scale

For years, virtual machines were the default abstraction for running compute-intensive workloads in the cloud. They offered isolation, predictability and a familiar operating model for infrastructure teams. But as AI workloads have matured, the limitations of VM-centric architectures have become increasingly apparent. Training large models, serving low-latency inference and running multi-stage pipelines place very different demands on infrastructure than traditional application workloads.

GPU cloud platforms have emerged as a response to these demands. Rather than treating GPUs as just another resource attached to a VM, they are designed around GPU-native scheduling, networking and performance characteristics. This shift is reshaping how AI systems are built, scaled and operated.

Understanding why GPU cloud is replacing traditional VM-based compute requires looking beyond raw performance and examining how modern AI workloads actually behave.

AI workloads stress infrastructure differently than traditional applications

Traditional VM-based infrastructure was designed for workloads with relatively stable resource profiles. Web services, databases and batch jobs typically scale in predictable ways and tolerate moderate latency. AI workloads do not.

Training jobs generate bursty, synchronized demand across multiple GPUs. Inference services require extremely low and consistent latency, often under variable traffic patterns. Agentic systems and retrieval pipelines chain multiple model calls together, amplifying any inefficiency in scheduling or networking.

VM abstractions struggle to accommodate these patterns efficiently. GPUs are often statically bound to VMs, leading to fragmentation and idle capacity. Networking between VMs introduces latency and variability that degrade distributed training and inference performance. As workloads grow more complex, these inefficiencies compound.

GPU cloud platforms are built to handle these dynamics directly, rather than forcing AI workloads into abstractions designed for general-purpose computing.

GPU utilization suffers in VM-centric environments

One of the most common problems with VM-based GPU deployments is poor utilization. When GPUs are tied to individual VMs, they become difficult to share, schedule dynamically or reassign based on demand.

A training job may reserve multiple GPUs for hours, even if parts of the pipeline are CPU-bound or waiting on data. Inference services may require GPUs during traffic spikes but leave them idle during quiet periods. With VM-based compute, this idle time translates directly into wasted cost.

GPU cloud platforms address this by decoupling GPUs from static VM lifecycles. GPUs become schedulable resources that can be allocated, reclaimed and reassigned dynamically. This improves utilization without forcing teams to redesign their workloads around rigid infrastructure constraints.

Networking becomes a bottleneck long before compute does

As models scale, networking performance becomes just as critical as GPU performance. Distributed training relies on fast, low-latency communication between GPUs. Inference pipelines depend on rapid data movement between components such as embedding services, vector stores and generation models.

Traditional VM networking was not designed for this level of sustained, high-bandwidth communication. Even with enhanced networking options, VM-to-VM communication often introduces variability that degrades performance and scaling efficiency.

GPU cloud platforms are built around high-bandwidth, low-latency networking fabrics optimized for GPU communication. This allows distributed workloads to scale more predictably and reduces the overhead that often limits VM-based deployments.

VM abstractions hide too much of what AI teams need to see

VMs provide strong isolation, but they also obscure critical details. For AI teams, understanding what is happening inside the system is essential. Training efficiency depends on visibility into GPU scheduling, queue depth, data pipeline behavior and interconnect utilization.

In VM-centric environments, this information is often fragmented across layers or unavailable entirely. Teams may see that a VM is running, but not why a GPU is idle or why throughput has dropped.

GPU cloud platforms expose telemetry that aligns with AI workloads. Engineers can see how GPUs are being scheduled, how inference requests are batched and where bottlenecks emerge. This observability is crucial for optimizing performance and controlling costs as systems scale.

AI workflows demand elasticity that VMs struggle to provide

AI workloads are inherently elastic. Experimentation spikes unpredictably. Training jobs may require large bursts of compute for short periods. Inference traffic can fluctuate dramatically based on user behavior.

While VMs can scale, they often do so slowly and in coarse increments. Provisioning new VM instances, attaching GPUs and configuring networking introduces delays that are unacceptable for fast-moving AI teams.

GPU cloud platforms are designed for rapid elasticity. GPUs can be provisioned on demand, scaled up during peaks and released when no longer needed. This allows teams to respond to changing workloads without overprovisioning or long lead times.

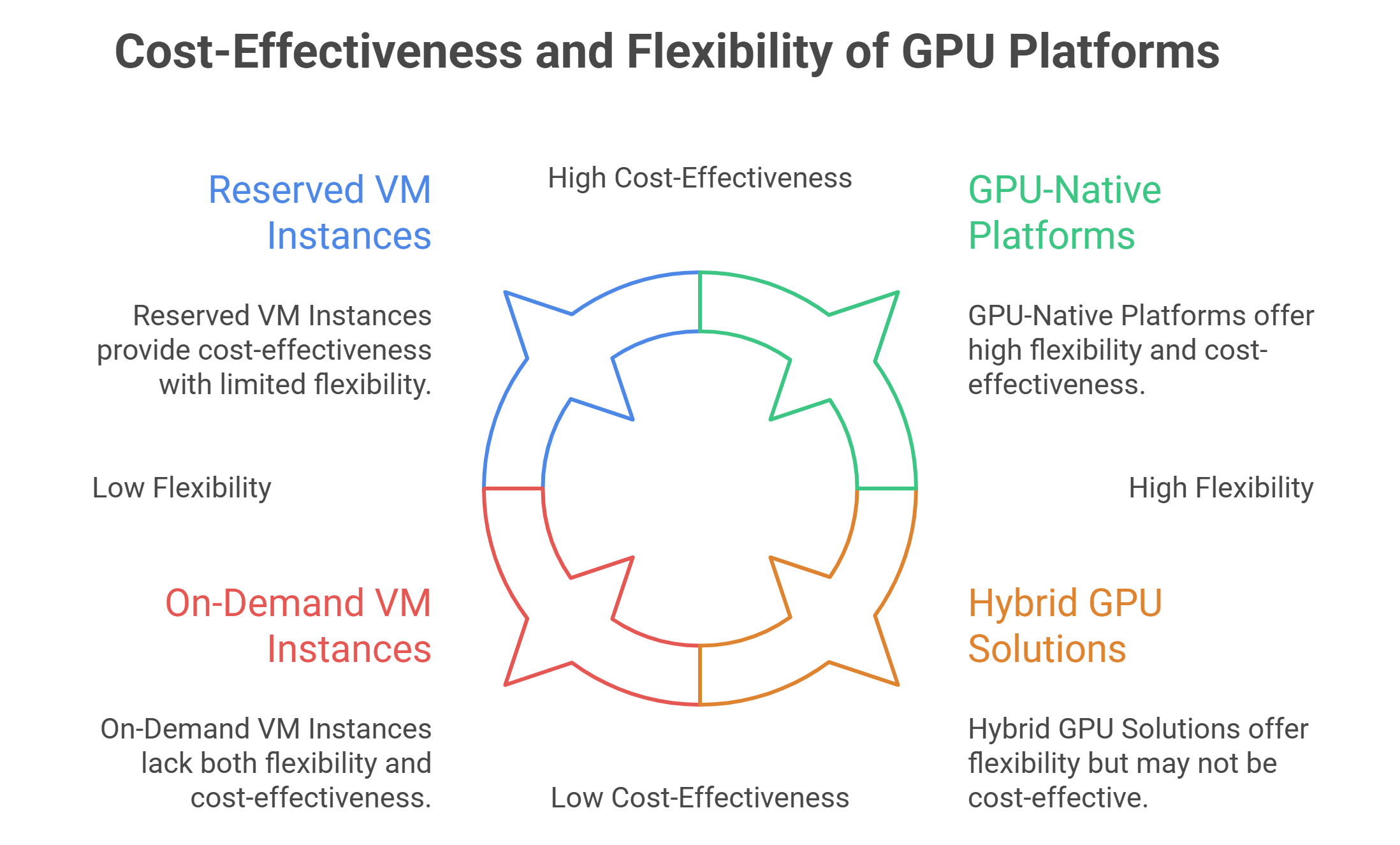

Cost models favor GPU-native platforms as workloads mature

VM-based GPU pricing often obscures the true cost of AI workloads. Teams pay for entire VM instances even when GPUs are underutilized. Long-running instances accumulate cost regardless of whether they are actively contributing to training or inference.

GPU cloud platforms align pricing more closely with actual usage. By enabling finer-grained scheduling and supporting a mix of reserved and on-demand capacity, they reduce the amount of stranded compute.

This is especially important as inference becomes the dominant cost center for many AI products. Optimizing cost per request or cost per token requires infrastructure that can adapt dynamically, something VM-centric models struggle to deliver efficiently.

Security and isolation without VM overhead

One reason VMs have remained popular is security. Strong isolation boundaries make them attractive for multi-tenant environments. GPU cloud platforms address this concern without relying exclusively on VMs.

Modern GPU clouds provide isolation through a combination of containerization, scheduling controls, network segmentation and access policies. This allows multiple teams or workloads to share GPU infrastructure safely while avoiding the overhead of fully isolated VM instances for each task.

For enterprises, this balance is critical. They gain the efficiency benefits of shared GPU pools without sacrificing the security guarantees required for production AI systems.

GPU cloud aligns better with modern MLOps practices

MLOps workflows emphasize automation, repeatability and continuous improvement. Models move from experimentation to training to inference through CI/CD pipelines. Infrastructure must support this flow without manual intervention.

VM-based environments often require custom scripts, static provisioning and manual coordination between teams. GPU cloud platforms integrate more naturally with container-based workflows, orchestration systems and automated pipelines.

This integration reduces friction between research and production and enables faster iteration cycles. Teams spend less time managing infrastructure and more time improving models and products.

Why enterprises are moving faster toward GPU-native cloud

AI workloads increasingly operate under constraints that VM-based abstractions were never designed to handle. Models are larger and more distributed, inference has become continuous and cost-sensitive, and agentic systems introduce parallel, multi-stage execution patterns. At the same time, GPU availability, pricing and performance vary widely across regions, making static allocation inefficient.

GPU cloud platforms respond by treating GPUs as first-class resources, optimizing networking and scheduling for AI-native behavior and exposing the observability enterprises need to operate at scale.

For organizations building production AI systems, this shift is less about incremental performance gains and more about infrastructure aligned with how modern AI actually runs.

Frequently Asked Questions about Why GPU Cloud Is Replacing VM-Based Compute for AI

1. Why are GPU cloud platforms replacing traditional VM-based compute for AI workloads?

Because AI workloads behave very differently from traditional applications. Training, low-latency inference, and multi-stage pipelines create bursty demand, require tight GPU coordination, and amplify scheduling/networking inefficiencies—areas where VM-centric setups often struggle.

2. What makes AI workloads harder to run efficiently on VM-centric infrastructure?

AI training can require synchronized work across many GPUs, while inference needs very low and consistent latency under changing traffic. Agentic and retrieval pipelines chain multiple model calls together, so any scheduling delays or network variability become bigger performance problems.

3. How does GPU cloud improve GPU utilization compared to VMs?

In VM-based setups, GPUs are often statically bound to specific VMs, which leads to fragmentation and idle time when workloads are waiting on data or CPU stages. GPU cloud platforms decouple GPUs from VM lifecycles so GPUs can be allocated, reclaimed, and reassigned dynamically based on demand.

4. Why does networking become a bottleneck in VM-based GPU deployments?

Distributed training depends on fast, low-latency GPU-to-GPU communication, and inference pipelines need quick data movement between components. VM-to-VM networking often introduces latency and variability that limits scaling efficiency, even when “enhanced networking” options are used.

5. What observability do GPU cloud platforms provide that VMs often hide?

GPU cloud platforms expose AI-relevant telemetry—like GPU scheduling behavior, queue depth, data pipeline performance, batching behavior for inference, and where bottlenecks appear—so teams can understand why throughput drops or why GPUs go idle.