This article highlights the most important open-source frameworks shaping GPU cloud adoption in 2025, explaining how they drive efficiency, scalability, and real-world AI deployment across industries.

What you’ll learn:

- Why open-source frameworks matter for GPU performance and cost efficiency

- The role of PyTorch, TensorFlow, and JAX in research and production

- How Hugging Face tools accelerate NLP, vision, and generative AI

- Scaling strategies with DeepSpeed, Megatron-LM, and Ray

- Reinforcement learning support through Stable Baselines3 and RLlib

- Lifecycle and governance with MLFlow and Kubeflow

- How to build a curated framework stack aligned with business goals

Building and deploying AI models on the GPU cloud is not just about choosing the right infrastructure. The frameworks you use to develop, train and serve models often determine how efficiently you can leverage that infrastructure. Open-source frameworks in particular play a central role, providing flexibility, community-driven innovation and compatibility with evolving hardware.

In 2025, the ecosystem has matured far beyond the early days of a few dominant libraries. Today’s developers and ML teams work in a diverse landscape of frameworks tailored to different tasks – from deep learning to reinforcement learning, from multimodal modeling to large-scale inference. For CTOs and practitioners, understanding which frameworks matter most ensures that GPU cloud investments translate into real business outcomes.

Why open-source frameworks matter for GPU cloud

The GPU cloud provides scalable compute, but frameworks determine how workloads actually run on that compute. A well-optimized framework can minimize latency, maximize throughput, and reduce operating costs by making full use of GPU parallelism. Poorly matched frameworks, by contrast, can waste resources and slow down deployments.

Open-source frameworks also encourage transparency and portability. Teams are less likely to be locked into proprietary ecosystems, and they benefit from improvements contributed by a global community. For enterprises that value flexibility and long-term sustainability, this openness is key.

PyTorch: the workhorse of deep learning

PyTorch remains the dominant framework for both research and production. Its dynamic computation graph and strong community support make it the first choice for model experimentation. Inference performance has also improved significantly, thanks to optimizations like TorchScript and ONNX export capabilities.

On GPU cloud platforms, PyTorch pairs naturally with elastic scaling – allowing teams to spin up clusters for distributed training and then shift seamlessly into inference mode. With rich ecosystem libraries for computer vision, NLP and multimodal tasks, PyTorch is the safe bet for teams seeking versatility.

TensorFlow and JAX: the ecosystem builders

TensorFlow continues to be relevant in 2025, especially for enterprises that value its mature tooling, TensorFlow Serving, and TensorFlow Extended (TFX) pipelines. While its popularity among researchers has waned compared to PyTorch, its production ecosystem is hard to ignore.

JAX, on the other hand, has surged in adoption among advanced ML practitioners. Known for its functional programming style and automatic differentiation, JAX excels in large-scale model training and reinforcement learning. GPU cloud users often choose JAX for workloads that demand cutting-edge performance and scalability.

Hugging Face Transformers and Diffusers: NLP and beyond

The Hugging Face ecosystem has become indispensable for deploying state-of-the-art models. Transformers streamline the use of pretrained language, vision and multimodal models, while Diffusers provide a foundation for generative image and audio tasks.

For GPU cloud deployments, Hugging Face libraries reduce friction by making it easy to fine-tune, serve and scale models that would otherwise take months of R&D. With integration into MLOps pipelines and inference engines, these frameworks have moved from convenience tools to mission-critical infrastructure.

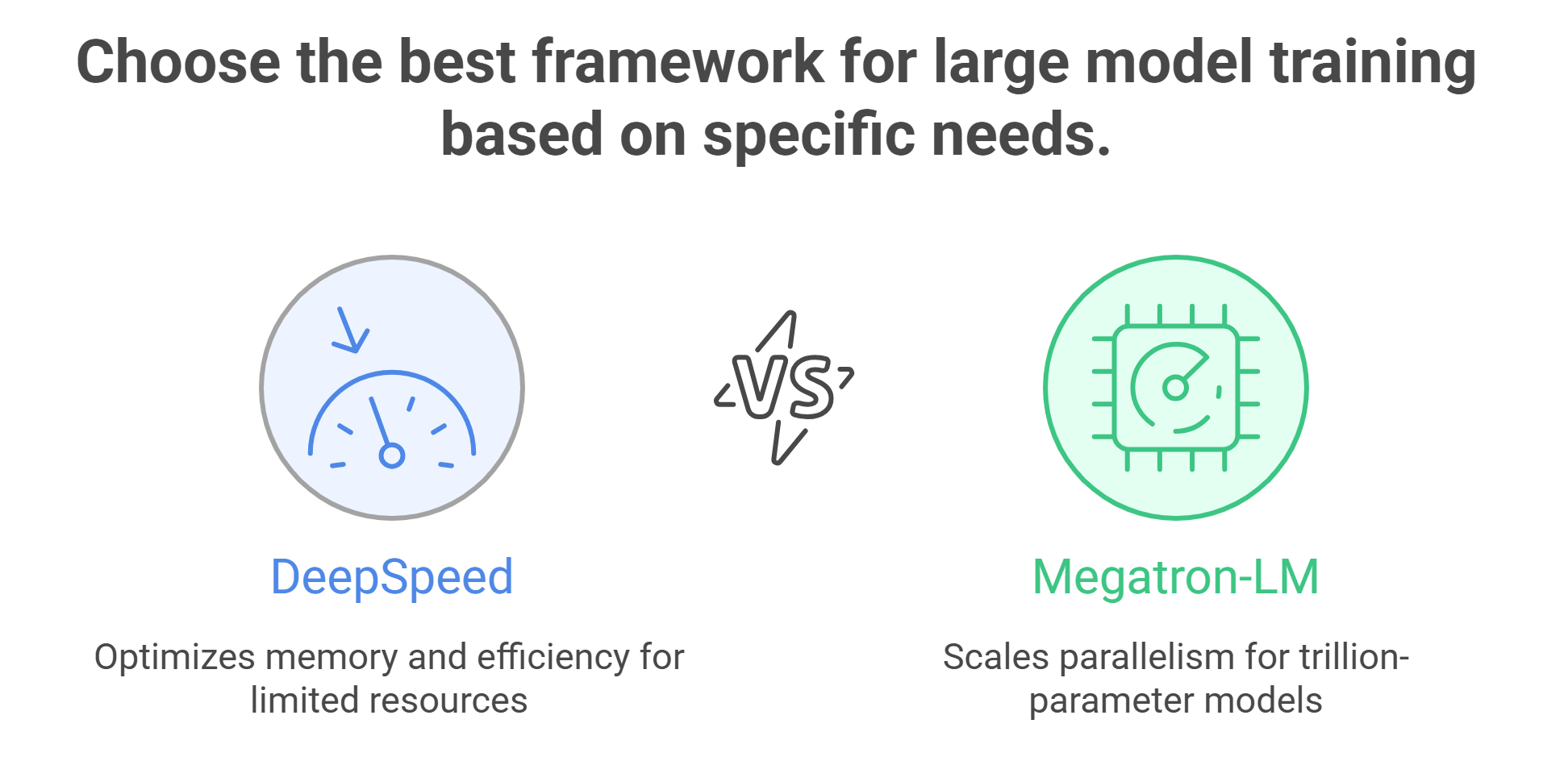

DeepSpeed and Megatron-LM: scaling giants

Training large language models or multimodal systems requires specialized frameworks for efficiency. DeepSpeed, developed by Microsoft, provides optimizations like ZeRO (Zero Redundancy Optimizer) to enable massive model training on limited GPU memory. Megatron-LM offers tensor and pipeline parallelism tailored for trillion-parameter models.

For GPU cloud users, these frameworks unlock performance that would be unattainable with vanilla training loops. They are particularly relevant for enterprises exploring foundation model development or customization.

Ray and Distributed AI frameworks

Scaling AI workloads across many GPUs isn’t just a hardware problem – it’s also a software orchestration challenge. Ray has become the de facto framework for distributed training and serving, offering abstractions for task scheduling, parallelization and model deployment.

In cloud environments, Ray enables ML teams to build scalable inference services that automatically adjust to demand. Combined with GPU scheduling, it ensures efficient resource use across multimodal and multi-agent workloads.

Stable Baselines3 and RLlib: reinforcement learning made practical

Reinforcement learning (RL) may not dominate headlines like LLMs, but it powers critical applications in robotics, logistics and operations optimization. Stable Baselines3 provides reliable RL implementations for quick prototyping, while RLlib scales these workloads across GPUs in the cloud.

For enterprises exploring automation and decision-making agents, these frameworks bridge the gap between research and deployment, ensuring that RL workloads can scale without infrastructure bottlenecks.

MLFlow and Kubeflow: tying it all together

No discussion of frameworks is complete without considering lifecycle management. MLFlow supports experiment tracking, model registry and deployment tools, while Kubeflow integrates tightly with Kubernetes for full MLOps pipelines.

On GPU cloud platforms, these frameworks are indispensable for enterprises running multiple models, experimenting with new architectures, and needing robust governance. They provide the structure required to keep multimodal inference and training manageable at scale.

How enterprises should choose

The sheer variety of frameworks in 2025 can be overwhelming, but selection should be guided by business needs:

- For flexibility and breadth: PyTorch and Hugging Face.

- For advanced research and performance: JAX, DeepSpeed and Megatron-LM.

- For scaling across GPUs: Ray and RLlib.

- For lifecycle and governance: MLFlow and Kubeflow.

The key is not to adopt every framework but to build a curated stack that aligns with workloads, team expertise and long-term goals.

Looking ahead

Open-source frameworks are the connective tissue between GPU cloud infrastructure and real-world AI applications. They shape how efficiently models are trained, deployed and scaled – and they influence the economics of AI just as much as hardware choices do.

For CTOs and ML leaders, the priority is staying current with frameworks that enable faster development, smoother scaling and more reliable deployment. In 2025, that means looking beyond a single library and embracing a stack that balances versatility, specialization and operational maturity.

What is especially important moving forward is the interplay between frameworks and the evolving GPU cloud itself. As hardware generations advance and as inference requirements grow more complex, frameworks will need to keep pace with new levels of scalability, cross-platform portability and deeper MLOps integration. Enterprises that continuously update their toolchains – rather than sticking rigidly to a single “golden stack” – will be best positioned to take advantage of these shifts.

Ultimately, the GPU cloud provides the horsepower, but frameworks determine how effectively that horsepower is harnessed. Teams that invest in both will set the pace for innovation, delivering AI that is not only powerful but also practical, sustainable and ready for production at scale.

Frequently Asked Questions About Open-Source Frameworks for GPU Cloud (2025)

1. Why do open-source frameworks matter for GPU cloud efficiency and cost?

Because the framework determines how your workload runs on the GPU cloud. A well-matched, optimized framework can minimize latency, maximize throughput, and cut operating costs by fully using GPU parallelism. Open source also brings portability and community-driven innovation, helping teams avoid lock-in while staying flexible as hardware evolves.

2. PyTorch vs. TensorFlow vs. JAX: which should I pick for GPU cloud in 2025?

PyTorch is the versatile “workhorse” for research and production. Its dynamic graph, TorchScript/ONNX export, and rich vision/NLP/multimodal ecosystem pair well with elastic scaling on the GPU cloud.

TensorFlow remains strong for enterprises that value its mature production tooling (e.g., TensorFlow Serving and TFX pipelines).

JAX is popular with advanced practitioners who want cutting-edge performance and scalability via its functional style and automatic differentiation—often chosen for large-scale training and reinforcement learning.

3. How do I scale large language models on the GPU cloud without running out of memory?

Use specialized scaling frameworks:

- DeepSpeed (e.g., ZeRO) enables massive model training on limited GPU memory.

- Megatron-LM provides tensor and pipeline parallelism tailored for very large (even trillion-parameter) models.

Together, they unlock training efficiency that would be hard to achieve with vanilla loops.

4. What should I use for distributed training and cloud-scale serving?

Ray is a de facto choice for distributed training and serving. It offers abstractions for task scheduling, parallelization, and deployment, so GPU-cloud inference services can scale with demand. Paired with GPU scheduling, Ray helps use resources efficiently across multimodal and multi-agent workloads.

5. Which open-source frameworks make reinforcement learning practical at scale?

Stable Baselines3: reliable RL implementations for quick prototyping.

RLlib: scales RL workloads across GPUs in the cloud so production deployments aren’t blocked by infrastructure limits.

This combo bridges the gap from RL research to real-world operations (robotics, logistics, optimization).