This article outlines the core capabilities that define a truly production-ready inference engine and provides CTOs with a practical checklist for evaluating latency, scalability, routing, observability, governance and cost efficiency in real-world AI deployments.

What you’ll learn:

- How to assess consistent low-latency performance under spiky, multi-model workloads

- Key throughput optimizations for LLM and multimodal inference pipelines

- What smart, signal-driven autoscaling should look like for modern AI systems

- How multi-model routing, orchestration and versioning reduce operational overhead

- The observability metrics that matter for performance, saturation and cost control

- Why support for modern inference frameworks prevents vendor lock-in

- How unified training–fine-tuning–serving ecosystems simplify production workflows

- What deployment flexibility and governance features enterprises should require

- How cost efficiency is achieved through batching, GPU sharing and intelligent scheduling

When an AI model moves from prototype to production, the deployment layer becomes just as important as the model itself. An inference engine isn’t just a runtime – it’s the operational backbone that determines latency, scalability, reliability and cost efficiency under real-world load.

For CTOs, choosing the wrong inference engine can lead to unpredictable performance, infrastructure sprawl or runaway costs. Choosing the right one unlocks high-throughput applications, smooth scaling and a roadmap that can evolve without painful re-architecture.

A “production-ready” inference engine isn’t defined by marketing claims. It’s defined by how well it handles the complexity and volatility of real systems: variable traffic, multi-model workloads, security requirements, multi-team collaboration and continuous iteration.

Below is a practical checklist that helps CTOs evaluate whether an inference engine can truly support enterprise-grade AI.

1. Consistent low latency under unpredictable load

Testing inference on a single developer machine tells you nothing about how it behaves at scale. Production workloads are spiky, multi-tenant and often require multiple models running simultaneously. A production-ready inference engine must maintain low latency even when:

- traffic surges beyond expected levels

- multiple models contend for GPU memory

- batch sizes change in real time

- routing decisions vary across endpoints

This consistency doesn’t happen by accident. It requires intelligent GPU scheduling, memory-aware placement, dynamic batching and fast networking. If an inference engine can’t sustain performance under load, it isn’t production-ready.

2. High-throughput execution for LLM and multimodal workloads

Throughput matters as much as latency – especially for LLM products or retrieval-augmented generation pipelines. A good inference engine optimizes:

- token streaming

- KV-cache reuse and eviction

- fused kernels

- paged attention

- speculative decoding

- parallel request scheduling

Modern AI applications rarely rely on just one model. Embeddings, rerankers, vision encoders and language models all run concurrently. A production inference engine must treat throughput holistically, optimizing the full DAG of inference steps instead of focusing on a single model in isolation.

3. Smart autoscaling that reacts to real inference signals

Autoscaling based on CPU utilization or simple request rate isn’t sufficient for AI workloads. LLMs exhibit unique scaling patterns – queue depth, p95 latency, batch inflation and KV-cache saturation all influence how scaling should behave.

A production-grade inference engine should support:

- scaling based on latency thresholds

- scaling based on queue growth and drain rates

- multi-model, per-endpoint scaling rules

- cost-aware policies that minimize GPU idle time

Autoscaling should also adapt to day-to-day workload patterns without manual tuning. CTOs should evaluate how quickly new GPUs can be provisioned, how gracefully scaling occurs and whether the engine avoids oscillation under variable demand.

4. Multi-model routing and orchestration without manual overhead

Most production systems serve multiple models simultaneously: LLMs, RAG components, classifiers, detectors, rerankers or domain-specific fine-tunes. If your inference layer forces you to maintain separate services, containers or GPU instances per model, you’ll hit scaling issues fast.

A production-ready inference engine should handle:

- routing traffic to the correct model based on version, tier or metadata

- serving multiple models on shared GPU nodes without interference

- prioritizing high-value workloads

- hot-swapping model versions with zero downtime

- shadow deployments for evaluation

These capabilities replace hours of DevOps work with predictable automation – a major advantage for lean engineering teams.

5. Observability that exposes latency, throughput, cost and saturation

CTOs need clear answers to three questions at all times:

- How are models performing?

- Are GPUs saturated or underutilized?

- Where is money being wasted?

A production-ready inference engine must offer real-time visibility into token throughput, p50/p95/p99 latency, queue depth, GPU memory usage, batching efficiency, cost per endpoint and instance-level utilization patterns. Without this visibility, you can’t optimize, forecast capacity or diagnose regressions. Good observability replaces guesswork with operational clarity.

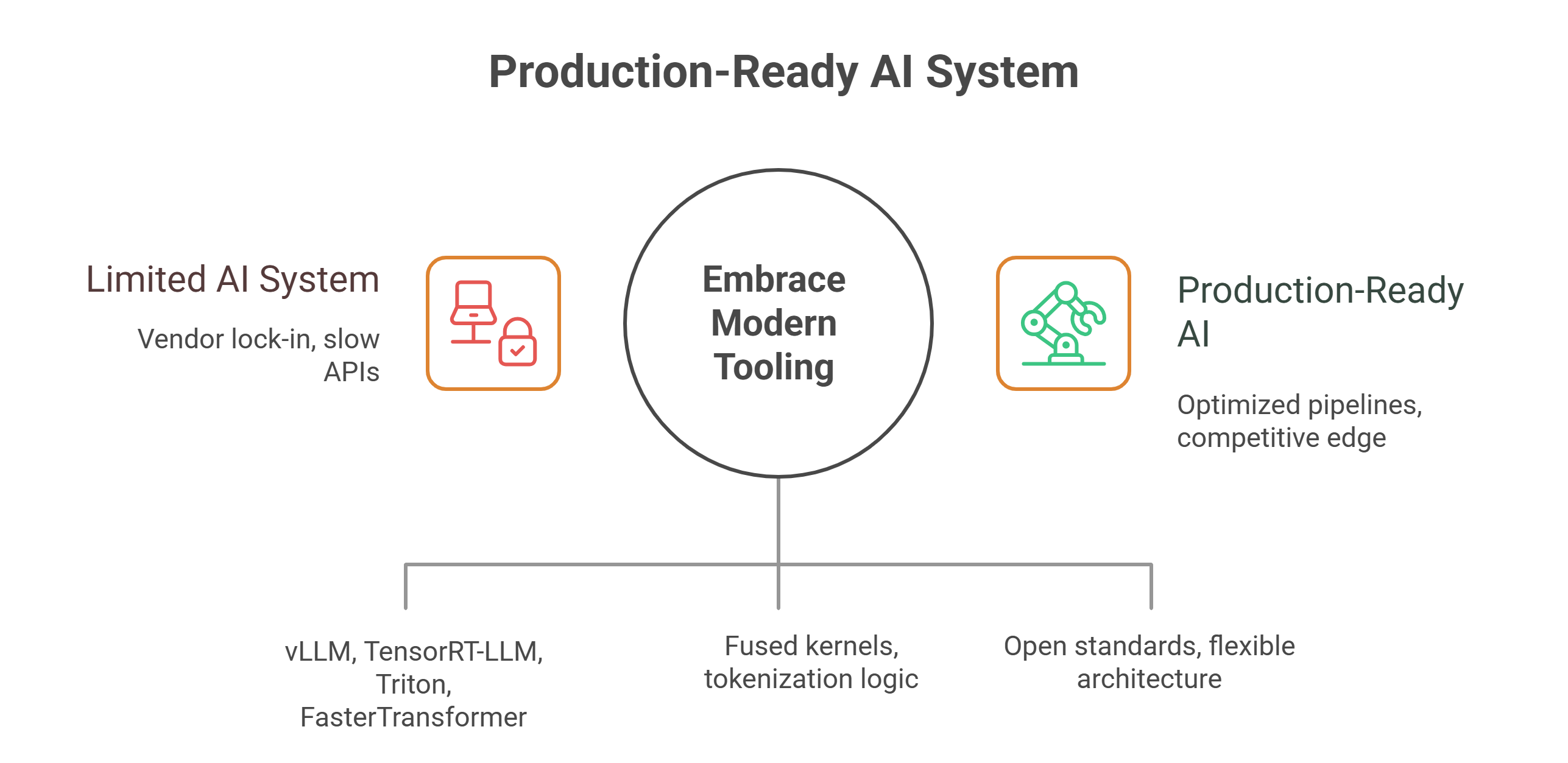

6. Support for modern inference tooling and frameworks

AI tooling evolves monthly. A production-ready system cannot lock you into proprietary runtimes or slow-moving APIs. It should support modern, high-performance frameworks such as:

- vLLM

- TensorRT-LLM

- Triton

- FasterTransformer

- Hugging Face TGI

- custom PyTorch inference loops

The inference engine should let your team bring its own code, fused kernels, tokenization logic and custom modifications – not force rewrites or impose hard limitations.

7. Separation of training, fine-tuning and serving without duplicating infrastructure

Training and inference require different resource allocation patterns, but they should not require different infrastructure stacks. When an inference engine integrates cleanly into the broader compute platform, teams gain several advantages:

- fine-tuned models can move to production instantly

- model artifacts and checkpoints remain consistent

- retraining loops feed directly into deployment workflows

- data governance and security remain unified

- CI/CD applies across training and inference

A production-ready inference engine sits inside a broader, cohesive ecosystem rather than operating as a standalone service.

8. Flexible deployment models for enterprise and hybrid environments

For CTOs building long-term infrastructure strategies, deployment flexibility matters. A production-grade inference engine should operate consistently across:

- cloud environments

- hybrid on-prem + cloud setups

- private clusters

- isolated enterprise networks

- multi-team, multi-tenant organizations

This ensures that compliance, security or contract requirements do not become blockers to scale. It also prevents workflow fragmentation when teams manage multiple environments.

9. Strong security and governance for multi-team environments

Once your team scales beyond a handful of developers, governance becomes essential. A production inference engine should provide:

- fine-grained role-based access controls

- per-endpoint and per-model permissions

- encrypted data flows

- audit logging

- isolation between teams and namespaces

For CTOs in regulated industries or enterprise SaaS, governance is not optional – it’s a foundational requirement.

10. Predictable cost efficiency at scale

Inference spending grows quickly – often faster than training costs. A production-ready engine must help control this through:

- fractional GPU allocation for small models

- batching optimization

- intelligent placement to reduce idle GPU memory

- hybrid reserved + on-demand pricing models

- utilization-aware autoscaling

Running inference at scale without cost discipline is one of the fastest ways to burn budget. A robust inference layer prevents this by design, not by accident.

How GMI Cloud aligns with this checklist

GMI Cloud’s Inference Engine is built around these production requirements, combining low-latency serving, multi-model routing, KV-cache optimization, intelligent autoscaling, high-bandwidth networking and unified observability.

Paired with Cluster Engine and GMI’s GPU-optimized infrastructure, the platform provides CTOs with the performance, visibility and operational control needed to support demanding AI applications at scale.

Frequently asked questions about production ready inference engines

1. What does a production ready inference engine actually mean in this context?

It is an inference layer that can handle real world volatility: spiky traffic, multiple models, strict latency targets, security needs and continuous iteration. It is judged by how well it keeps latency, reliability and cost under control in production, not by benchmark numbers on a single machine.

2. How does a production inference engine keep latency low under unpredictable load?

It combines intelligent GPU scheduling, memory aware placement, dynamic batching and fast networking so that latency stays low even when traffic surges, batch sizes change or several models share the same GPUs. If latency degrades as load becomes more complex, the engine is not truly production ready.

3. Why is autoscaling based on real inference signals so important for LLM workloads?

LLM traffic is better described by queue depth, latency and cache saturation than by simple CPU usage or request rate. A production grade engine scales based on latency thresholds, queue growth and per endpoint rules, while also trying to minimize idle GPU time.

4. What kind of observability should CTOs expect from an inference platform?

You should be able to see token throughput, p50 p95 p99 latency, queue depth, GPU memory usage, batching efficiency, cost per endpoint and utilization patterns across instances. Without this level of visibility it is almost impossible to optimize performance, control spend or debug regressions.

5. How should training, fine tuning and serving relate in a modern AI infrastructure?

They should share the same underlying platform instead of living on separate stacks. In a production ready setup, fine tuned models move to serving quickly, checkpoints and artifacts stay consistent, retraining loops feed into deployment and the same governance and CI CD principles apply across the lifecycle.